Imagine delivering a new feature, only to realize later that it has unresolved bugs and lacks proper documentation. You risk delivering a poor user experience or even facing rejection from app stores. However, such scenarios are avoidable with DoD (Definition of Done) and Kanban. DoD and Kanban are some of the most useful tools in Agile software development.

These two concepts are incredibly effective for product management, helping teams create good products on time and within budget. Although different, both concepts play interrelated roles in guiding teams toward clear goals while improving processes and promoting transparency.

While the DoD dictates the goal of a task or feature being completed, Kanban helps map out the workflow so you can reach those goals efficiently. In this article, we’ll take a deep dive into both of these concepts. We’ll explore how to design an efficient DoD, visualize it with Kanban, and track success in between. This guide will also discuss the common problems you’ll likely face and how to scale DoD and Kanban policies in larger Agile projects.

What is the Definition of Done (DoD)

The DoD, or Definition of Done, is one of the most important ideas in project management and agile methodology. It’s an agreement across a team about when a product release or a user story is complete. An established DoD makes sure that everyone in the team understands the criteria that each piece of work must meet to be considered “done” and ready for review, release, or handoff.

The Definition of Done is typically developed by the entire team. This may include the product owners, developers, and testers. Combining efforts in this way means that the DoD considers all the quality and functionality requirements that each role is concerned with.

The development team usually sets the DoD in the early phases of a project but may revisit it at intervals to keep up with changes in development projects. By engaging everyone in the process, the DoD becomes a baseline for quality. It gets everyone on the same page with regard to what counts as “done”. In reality, 93 percent of software development professionals consider a DoD valuable for assuring product quality.

Definition of Done Examples

The following are practical examples of the Definition of Done (DoD) in Agile environments:

1. Software Development Task

- Code reviewed, passed linting, and integrates with existing features.

- Automated tests written, executed, and passed.

- API documentation updated.

2. UI/UX Design Task

- Design approved by the product owner.

- Usability testing completed; key feedback addressed.

- Meets accessibility standards and is added to the design system.

3. QA/Testing Task

- All test cases executed successfully.

- Critical bugs resolved; regression testing completed.

4. Bug Fix Task

- Root cause documented; code fix peer-reviewed.

- Fix passes unit and regression tests.

Team Principles of Definition of Done

The Definition of Done (DoD) is built upon certain steps and processes to keep teams in line with quality and maintain coherence across the workflow. These principles include:

1. Transparency

Transparency starts with setting realistic, measurable standards for each task type. This might be coding, testing, documentation, or deployment. The DoD is stored in an internal repository, like a team wiki or project management system, and it is the single point of truth to which all team members have access. With the detailed project requirements in a central repository, the DoD provides a stable, consistent foundation for the team’s activity and needs.

The team reinforces transparency by creating regular checkpoints within their workflows to determine if the work satisfies DoD standards before proceeding. It could be through peer reviews or testing phases, where the team members validate DoD compliance.

2. Quality Assurance

QA is also an integral part of DoD. Each task has to pass a quality requirement specified by the DoD to become marked done. These quality metrics are often mandatory code reviews, automated tests, and performance checks done by software development teams.

To manage these requirements, teams implement automated test methods (e.g., JUnit for Java, NUnit for.NET) and static code analysis solutions (SonarQube) that analyze code in real time. Furthermore, continuous integration and delivery (CI/CD) pipelines also include these DoD checks automatically so that nothing gets completed until they meet certain quality requirements. Teams also have verification phases where specific people review the piece to see if it meets all requirements.

3. Shared Understanding

Shared understanding is developed by creating the DoD together, with all team members—developers, testers, product owners, and sometimes stakeholders. About 30 percent of software development professionals agreed that this is mostly the standard practice. Team members can collaborate on the checklist in meetings where DoD standards are discussed and refined to meet team requirements and the demands of quality and completion of each role. For new team members, onboarding sessions show them the DoD so they’re clear on each requirement and how it can affect them.

To build more common ground, some teams also demand cross-role sign-offs for tasks. For instance, a completed feature might require sign-off from a developer, a tester, and a product owner who all ensure that the task passes DoD. This approach makes sure all roles agree that a job is really complete. Thus, it eliminates uncertainty and creates a collective responsibility for quality.

4. Adaptability and Continuous Improvement

It’s important to be flexible and keep on improving if you want the DoD to continue to work for you. Scrum teams understand that the DoD must grow with the team as they learn and develop the project. In retrospectives, the team goes through the DoD to find any weak points or improvements. If a pattern emerges — defects due to bad testing, for example — the team might wish to add a test requirement to the DoD.

Changes in the DoD can happen at different paces — rather than overhauling the DoD once and for all, teams may include or modify one criterion and see how it changes the way they work. So, for instance, if the team thinks security should be taken more seriously, they can put a security review checkpoint in place first for a few high-priority tasks, and ramp up when the initiative works. Some teams also apply DoD versions at different levels, with multiple tiers to allow for the different scenarios.

Top Challenges Teams Face with the Definition of Done

As good as a DoD can be, teams may struggle to use it effectively. Let’s look at some of the reasons why this happens:

1. Vague or Incomplete Criteria

When a DoD isn’t accurate, teams get confused about the meaning of “done” and this causes inconsistent quality in deliverables. Vague criteria leave room for subjective judgment, which means one team member might view a task as completed and another might not.

This confusion causes unfinished or sub-par work to be sent to the next level, where they cause delays. Without clear guidelines, the team might miss critical steps, such as testing or documentation, and leave them incomplete until defects develop.

To address this issue, you must use specific, measurable terms. For example, instead of saying “Code must be tested,” specify, “Code must pass 100% of unit tests and meet 95% test coverage.”

2. Inconsistent Application Across Teams

In large, cross-functional development projects, there can be serious alignment problems when DoD is not consistent between teams. If one team has a tight definition of the DoD and another follows a loose interpretation, it’ll lead to quality and readiness gaps that will ultimately break the workflow and product integration.

Inconsistent usage will lead to delays because unfinished work needs to be refined before it moves onto the next stage. A single DoD model that is fully understood and adopted by all teams is crucial to keeping a seamless, coordinated process where every deliverable meets the same high standard.

To improve consistency, develop a global DoD template applicable to all teams. Include mandatory criteria (e.g., code reviews, unit testing) and optional fields for team-specific adaptations.

3. Lack of Team Ownership and Involvement

A DoD that’s issued by management without team input is likely to be less persuasive to those in the loop. And this will result in less motivation and accountability. Another consequence of shifting responsibility without taking ownership is inconsistent DoD standards. This is because team members might view it as an outside mandate instead of a valuable resource.

Enlisting the team to contribute to the DoD helps build a shared attitude toward quality and makes it aligned with the team’s workflows and challenges.

You may establish a requirement that completed features require sign-offs from developers, testers, and product owners, ensuring everyone agrees on task completeness. Also, onboard new team members by walking them through the DoD and explaining its impact on their responsibilities.

4. Overlooking Technical Debt

A DoD that doesn’t resolve technical debt – such as updating or resolving issues with legacy code – will eventually cause long-term complications that slack development and weaken code quality. Neglecting technical debt usually gives short-term benefits. But it’ll be a big expense in the future when problems accumulate and necessitate major updates.

If there are no technical debt standards built into the DoD, teams risk skipping maintenance that may not seem urgent but is critical for long-term stability and performance.

The best way you overcome the technical debt challenge is to include it in the DoD. Add explicit criteria, such as “Legacy code must be refactored,” or “All unused code must be removed. Adding technical debt to the DoD ensures teams have a quality codebase that’s scalable, and there’s less chance of problems stacking up that slow development.

5. Misalignment with Quality Standards

DoD that’s out of sync with quality standards risks launching products that don’t meet regulations or customer needs. This disconnect is usually the product of a DoD that’s too shortsighted, focusing mainly only on short-term deliverables without consideration for the project or industry requirements.

Quality standards that aren’t plugged into the DoD can unintentionally fall through the cracks. And this can cost teams time, money, and reputational damage.

Identify all relevant standards, such as accessibility (e.g., WCAG), security (e.g., OWASP guidelines), or industry regulations (e.g., GDPR for data privacy). Make sure these standards are directly reflected in the DoD.

Understanding Kanban Policies

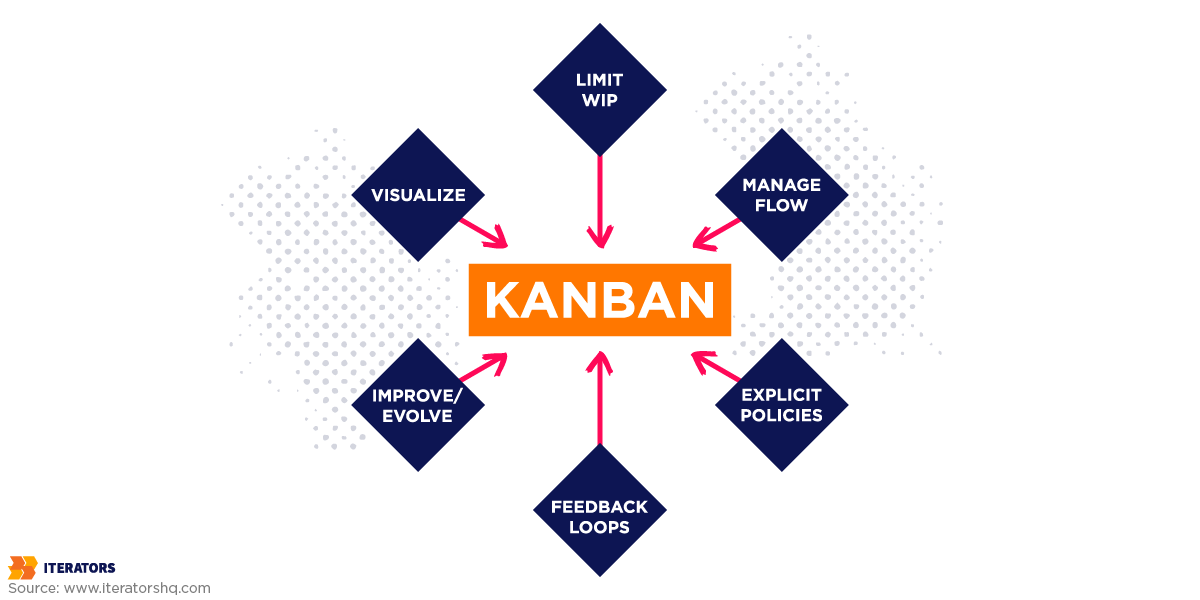

Kanban is a visual process management tool to track work as it progresses through a process. It helps teams visualize and refine their processes by scheduling tasks and limiting work in progress. Kanban was first introduced by Toyota in the 1940s as part of its lean manufacturing strategy. It was used to indicate stages of production to balance supply and demand. Eventually, it became a practice that could be applied to a lot of other fields too, particularly software development and knowledge work.

As teams adopt Kanban, they can progress through various stages of the Kanban maturity model, helping them enhance workflow efficiency and alignment as their processes mature.

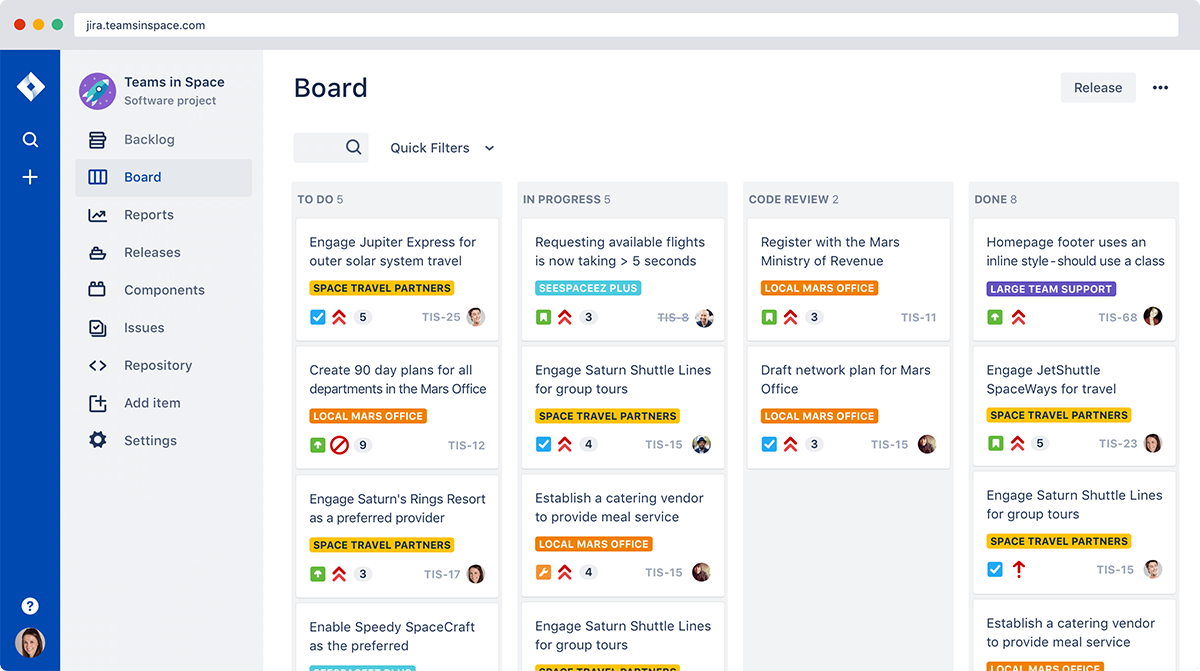

The Kanban system revolves around having a solid, common understanding of every step of the work process and seeing it visually so that teams can monitor progress and identify bottlenecks. A typical Kanban board is separated into columns for each workflow process (for example “To Do,” “In Progress,” and “Done”). Each work item has a card that moves from left to right as it progresses through the completion stages.

Kanban also shows you the status of work in real-time so everyone can see the status of their work, and areas where things are being delayed. This visualization element is a major strength of Kanban. Teams will have fewer obstacles in organizing work if they understand the flow of tasks instead of deadlines or timelines. In contrast to the traditional project management that plans and prioritizes for the long term, Kanban is focused on the management and optimization of the current workflow.

Using methods like STATIK: (Systems Thinking Approach to Implementing Kanban), teams can design Kanban systems that reflect the specific needs of their project environments, aligning each step with overarching goals.

Typical Example of Kanban in Software Development

Imagine a software development team that is developing a mobile app. The team includes developers, designers, testers, and a product manager. They organize their work on a Kanban board with columns labeled “Backlog”, “In Progress”, “Code Review,” “Testing,” and “Done”.

Each column of the Kanban board has a WIP limit to keep the team focused and evenly split the workload. For instance, the WIP limit of the “In Progress” column is 4 tasks, so there can be only four active tasks. If four projects are already in progress, the team can’t initiate another until one of them crosses over to the next column.

The “Code Review” column has a smaller WIP limit of 2 tasks because only a few senior developers can do code reviews. This WIP threshold provides a good insight to avoid bottlenecks — if tasks frequently pile up here, the team may choose to train more developers on code review to lighten the load. This adjustment prevents work from getting stuck on “Code Review” and accelerates them into “Testing” and “Done”.

The team regularly runs retrospectives to see how things are working and where there is room for improvement. For example, if they find that tasks are being delayed a lot in “Testing”, they might hire an extra tester to the team or automate their testing processes to expedite tasks. Another option might be to revise policies if they find that the vague acceptance criteria are causing problems.

As tasks move through the Kanban board, the team can measure metrics such as cycle time (length of time a task is taking from “In Progress” to “Done”) and lead time (“Backlog” to “Done”). A shorter cycle time represents good flow and a longer cycle time can reflect bottlenecks that need to be solved.

Core Principles of Kanban Policies

Kanban also, just like the Definition of Done, has principles to control workflow effectiveness, visibility, and team unity. The following Kanban principles are the rules of how teams create, visualize, and implement these policies to build a consistent workflow.

1. Workflow Visualization

Kanban has a core principle of mapping out the workflow so that teams can see where work is in progress, where it may be stuck, and what tasks come next. Teams will often begin with creating a Kanban board with columns for each phase of their workflow: “To Do”, “In Progress”, “In Review,” and “Done.” Tasks are displayed as cards that jump from one column to another as the work progresses.

With workflow mapped out, employees can visualize the progress of each task, uncovering bottlenecks or delays that can negatively impact productivity. This transparency also allows everyone on the team to see the team’s current workload and performance so that everyone can identify where to make improvements.

2. Limiting Work in Progress (WIP)

Kanban frameworks often place clear Work in Progress (WIP) boundaries to prevent team members from overworking and to achieve timely results. Every column or workflow step on the Kanban board has a limit of tasks that can be run at any given time. So, for instance, if you have a maximum WIP of five for the “In Progress” column, you can only have 5 tasks in active progress at that time.

WIP limits ensure that no one in the team works on multiple tasks at once to minimize context switching and help people finish work first before moving on to new work. Limiting WIP allows teams to control their own capacity and make sure that each task is given the time it needs to be completed. When a column exceeds the WIP threshold, team members must clear work from that point before taking on another one.

3. Explicit Process Policies

Explicit process policies are guidelines on how to manage tasks in the Kanban system and the specifications that each task needs to satisfy. These policies are usually co-created by the team. They specify what tasks need to be transferred from one column to another. So, for instance, a policy could dictate that tasks cannot go from “In Progress” to “In Review” until all unit tests have been completed and code review comments are addressed.

Clear policies define the needs for each phase and help to maintain a high-quality level across tasks. Writing out these policies and sharing them on the Kanban board or a common document makes sure everyone in the team knows what they are expected to do. Such alignment eliminates uncertainty and makes it easier for the team to be efficient and consistent with deliverables.

4. Managing Flow

Kanban policies are also concerned with streamlining work by finding and eliminating bottlenecks that slow down the flow. Teams can spot delays and make adjustments by examining the board regularly and reviewing how long each task takes at each stage. By constantly going over the board and seeing how much time each task has spent at each phase, teams will know where there are delays and make adjustments accordingly.

For instance, if there is a lot of work that keeps getting stuck in the “In Review” phase, the team could add more resources to that phase or change the WIP cap so that reviews are conducted quickly. Flow management also teaches the team to fix workflow inefficiencies early and often for more throughput. By monitoring flow, teams can be more productive with less slack and more consistent workflow.

5. Continuous Improvement

One of Kanban’s guiding principles is to keep improving and for teams to periodically review and modify their workflow policy to increase productivity and team performance. Teams regularly hold retrospectives to see if the Kanban policies are assisting with their goals and if they should make any necessary changes. For instance, if the team has discovered that some tasks are not getting done on time, they might consider different WIP thresholds or change their process policies to align with workflow.

Kanban continuous improvement is usually about small incremental changes that are tested and validated continuously. With this approach, the team can modify policies gradually without destroying the entire process. This principle encourages a culture of learning and agility that will allow teams to constantly iterate as they figure out how to optimize work output and quality.

Integrating DoD and Kanban

While the DoD and Kanban policies have different use cases in Agile, they’re extremely complimentary. Together, they create an optimized workflow by combining the rigor of quality standards (DoD) with the flexibility and flow management of Kanban.

Together, DoD and Kanban rules make sure that work flows well and follows quality standards. In practice, a task has to fulfill DoD conditions to pass through to the next Kanban stage. This ensures that it meets quality standards while continuing on the flow.

Combining DoD and Kanban principles promotes a culture of quality and productivity for the team. DoD requirements reinforce an eye for detail, and Kanban gives you the elasticity and organization to make work move. They also build an environment in which employees invest not only in quality but also in process improvement.

Strategies for Meeting DoD Criteria in a Kanban Workflow

Here’s how teams can leverage DoD and Kanban to consistently deliver quality:

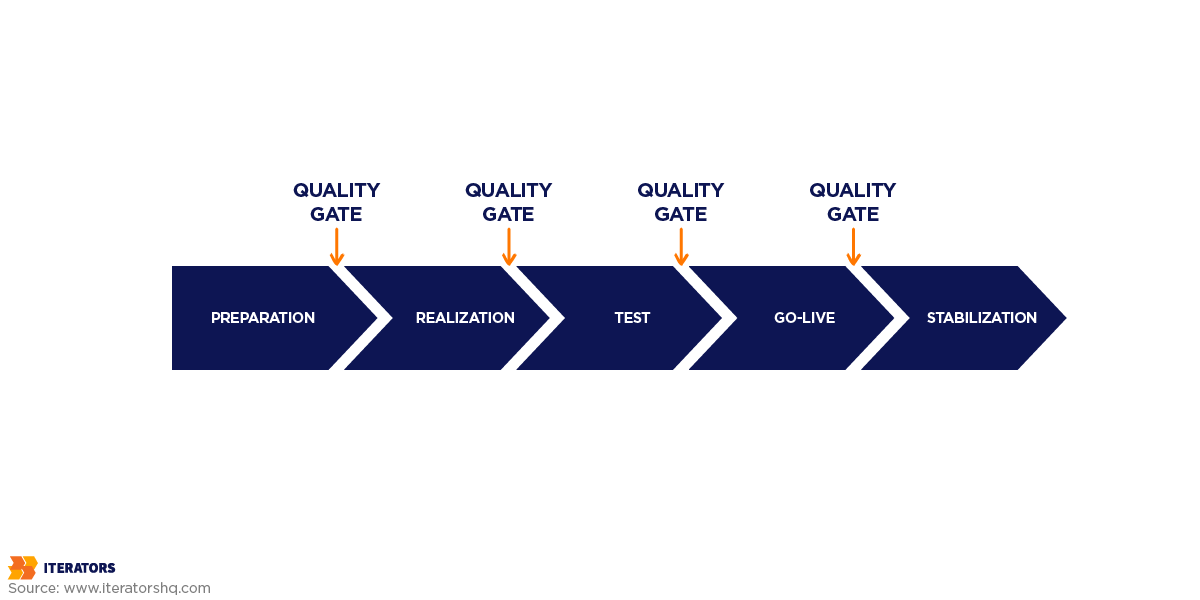

1. Implement Quality Gates for Critical DoD Requirements

Teams can set up quality gates on certain transition points on the Kanban board, where DoD criteria are inspected directly. For example, to transition from “In Review” to “Done”, a task needs to have passed all tests and documentation updates.

Quality gates can be automated (running automated tests before proceeding to the next stage) or peer reviews on key points such as code or documentation quality. Such gates enforce the DoD at key steps of the process so that tasks will not proceed to the next level without being checked for quality.

2. Assign DoD Champions for Accountability

Assigning DoD champions (people on your team who review specific DoD measures) can also help keep quality up all the time. One team member could be responsible for making sure code reviews are done, and another for keeping documentation up to date.

Champions can alternate to spread out the load and make the whole team familiar with various parts of the DoD. It helps ensure that everyone is accountable and that everyone has collective ownership of quality expectations.

3. Visualize DoD Progress on the Kanban Board

Visual indicators for DoD metrics on the Kanban board can be used to monitor DoD compliance in real-time. Checklists, or even coloured labels on task cards can indicate which DoD items have been met.

A checklist could be an item like “code review done,” “unit tests passed,” or “documentation created.” It’s a quick visual reminder to team members whether tasks are met. This ensures that missing criteria are addressed before the team passes a task on to the next level.

4. Automate DoD Validation with CI/CD Pipelines

DevOps teams can automate a portion of the DoD, like testing or code review in continuous integration/continuous deployment (CI/CD) pipelines. CI/CD tools, for instance, can be configured to run tests, inspect code quality, or search for new documentation when a task approaches some Kanban points.

Checks in the pipeline can function as quality gates, where tasks move forward only if they comply with DoD criteria. Since this process is automated, it reduces manual effort on the team and keeps things consistent.

5. Regularly Review and Adjust DoD and Kanban Policies Together

Retrospective sessions are also a good opportunity for teams to review how the existing DoD and Kanban policies are working together. If any DoD standard is regularly violated, the team can agree to change the Kanban process, maybe by adding a new column or changing WIP limits to accommodate those standards.

Monitoring both policies regularly keeps the team on the same page and prepared for emerging issues.

Measuring Success with DoD and Kanban

DoD and Kanban provide a powerful framework to quantify success by highlighting key indicators of quality, consistency, throughput, and improvement. Here’s how teams can measure success by measuring DoD and Kanban-related metrics:

Key Metrics for Definition of Done

Common DoD metrics include:

1. Completion Rate vs. Rework Rate

This percentage of work that is satisfactory to the DoD without rework can be an indicator of the DoD’s quality. High rework rates could be a sign that DoD criteria aren’t clear or adequate.

In order to calculate the completion rate, teams count the number of tasks or features they mark as “done” and cross-check it against the DoD without needing to modify them. The rework rate is calculated by the number of tasks that need additional adjustment after being completed, usually because they did not meet the DoD standards.

2. Defect Density

This represents the number of defects per unit of work (for example, per feature or sprint). A low defect density means that DoD is supporting the quality system. However, a higher defect density can indicate that the DoD criteria are not as tight, or teams are overlooking critical verifications.

The defect density is simply the sum of defects divided by the work item size (usually in lines of code or feature points). This information usually comes from tests or user surveys.

3. Failed Acceptance Tests

Failure to pass many tests implies that the DoD might not be suited to the end-user requirements or use cases. The team can use this data to refine the DoD so that it more accurately represents key functionality and performance requirements.

This metric is calculated by tracking the percentage of tasks that fail UAT/QA tests once they are marked as completed.

Key Metrics for Kanban

Unlocking the power of Kanban flow metrics allows teams to monitor performance effectively:

1. Lead time

Lead time tells you how long a task takes from when it is placed on the board to when it is done. Shorter, predictable cycles and lead times indicate that tasks are progressing well through the workflow, and vice versa.

You need to set the start time and end time for each task on the Kanban board to calculate your lead time.

2. Cycle Time

Cycle time is the amount of time a task takes to cycle through the Kanban board, from “Work in Progress” (WIP) to “Done.” Most project management tools track this information automatically so teams can figure out the average cycle time for tasks or feature types.

Fast cycle times generally mean that work is advancing quickly in the workflow while slow cycles imply that the process is delayed. By studying which stages contribute the most time in the cycle, teams can try to address those bottlenecks.

3. Throughput

This metric indicates how many tasks were done over some time. A steady or growing throughput is a good indication that Kanban is working and the team is finishing more work quickly without delays or bottlenecks.

Throughput is measured as the number of tasks or work items performed in a specified time frame. This value is automatically captured by most Kanban tools and teams can see throughput by day, week, or sprint.

4. Work In Progress (WIP)

Monitoring WIP helps teams maintain focus and avoid overloading any part of the workflow. Overly high WIP in a column could be a sign that tasks are being delayed in that stage.

With this insight, you can then reset WIP limits or allocate resources to ensure that work progresses smoothly across all stages.

Common Pitfalls in Measuring Success with DoD and Kanban, and How to Avoid Them

Here are some of the most common pitfalls that teams may encounter when trying to measure success:

1. Prioritizing Throughput over Quality

A common mistake some teams make when trying to measure Kanban success is sacrificing quality for throughput (total amount of work achieved). Work may be too speed-oriented that teams may miss or rush through DoD rules, which leads to defects, undocumented documents, or poor testing.

To avoid this, try to balance quality and throughput. Stats like DoD adherence rate, defect density, and rework percentage can help you get a more integrated picture of the results. You can also emphasize the need for quality at the team meetings by rewarding quality and productivity successes.

2. Inconsistent or Ambiguous DoD Criteria

Sometimes teams establish DoD criteria that are too granular or inconsistent from one task to the next, making it more or less unclear how well work gets done. Inaccuracy in DoD standards leads to confusion, as individual members of the team perceive the quality criteria differently and deliverables get compromised.

Build specific, precise, and universal DoD requirements for each type of task or project. Plan a team meeting to share DoD standards and write them down. Revising DoD requirements often, during retrospectives, keep them fresh, consistent, and clear to everyone on the team.

3. Ignoring Cycle Time and Lead Time Variability

In Kanban, you’ll have to monitor cycle time and lead time to get insight into flow effectiveness. But teams can forget about the variation of these metrics. Some tasks can take longer because of the complexity while some can be completed faster. Calculating cycle time as an average without considering variability leads to wrong findings and can conceal workflow issues.

Calculate and review the average and variance of cycle and lead times. Look for trends, like very high cycle times on tasks with certain DoD requirements, and change processes or Kanban steps as necessary. Data on distributions of cycle time, instead of averages, gives you a more complete picture of the teams’ workflow performance.

4. Misinterpreting WIP Limits and Overloading Stages

WIP limits help you avoid overloading and regulate flow, but sometimes teams set them too high or too low without considering task complexity. If you have WIP thresholds that are too high, your team members will multitask and lose focus and quality. On the other hand, if you have WIP limits that are too low, there will be bottlenecks that will delay the workflow.

Implement good WIP limits based on team size and the average time it takes to finish various tasks. Always check and change WIP limits as team size, skills or workload evolves. Keeping track of the flow and searching for bottlenecks helps the team know if the WIP limit is too tight or too loose.

5. Focusing on Individual Metrics Rather than Team Metrics

Success with DoD and Kanban is most effective when it’s measured as a team, not as an individual. When individual metrics — like the number of tasks each employee has completed — are put first, it can create unhealthy competition and a lack of collaboration. And team members are bound to lose sight of common quality standards.

Focus on team-level indicators like overall DoD compliance, throughput, and cycle time to encourage cooperative behavior. Supporting the shared vision of the team and rewarding the success of a group over the performance of individual contributors helps align your strategy around quality and efficiency. This reorientation reinforces team culture and responsibility.

6. Over-relying on Automated Metrics without Context

Automated metrics monitoring tools can be helpful, but they can also get teams to rely on the data without really seeing it. For example, the automated defect number or cycle time might not tell you why something went wrong or how it impacts the project’s goals.

Use automated metrics as a starting point but supplement them with qualitative data from team meetings, retrospectives, and feedback. Invite team members to share problems they faced, so that the metrics are tied to context. This balanced approach helps avoid overanalyzing and develops a better awareness of what works and what doesn’t.

Scaling DoD and Kanban in Agile Environments

Scaling DoD and Kanban in Agile environments such as Scrum of Scrums, SAFe (Scaled Agile Framework), or LeSS (Large-Scale Scrum) will demand consistency, flexibility, and planning. Here is how to scale DoD and Kanban across teams and projects:

1. Establish Consistent and Flexible DoD Across Teams

DoD scaling involves both standardization and adaptation. There should be a base DoD so that everyone can operate within a standard quality process. This ensures the fundamental elements of testing, code review, and documentation are applied uniformly across all teams.

However, individual teams should also be able to stretch this baseline based on their project requirements. Keep DoD standards up to date by having cross-team meetings to review and update these criteria. This is especially important as technical and product requirements evolve.

2. Use a Multi-Level Kanban Board for Cross-Team Visibility

In a scaled Agile environment, it’s especially important to keep track of work both on individual teams and across teams. Kanban boards with several levels give you this needed visibility. Each team has its own Kanban board where tasks are kept and they can set WIP limits and workflows.

At the program level, an upper-level Kanban board maps progress for teams, showing epics or critical tasks, interdependencies, or bottlenecks. For even more visibility, use visual cues to highlight dependencies. This allows teams to find and remove the blockers early and continue with a consistent workflow.

3. Implement Standardized WIP Limits with Flexibility for Team Needs

WIP limits are the foundation of workflow balance in Kanban. But for scaling you’ll need to standardize WIP limits on different teams but still leave room for variance. A standard WIP limit policy can help teams adopt Agile values that prioritize quality over quantity.

However, teams should have WIP limits within a set range depending on their capacity and complexity. Regular reviews at the program level are a great way to check and refine these WIP limits, keeping them flowing as well as spotting improvement opportunities across teams.

4. Coordinate DoD and Kanban Policies Through Scrum of Scrums

Coordination of DoD and Kanban policy gets difficult as the teams increase in number. Scrum of Scrums meetings—regular sync-ups with representatives from each team—help structure and facilitate alignment and collaboration. Teams have these meetings to review and ensure compliance with DoD standards. They also brainstorm challenges and review policies to ensure they are relevant and consistent across teams.

These meetings are also a time to discuss Kanban workflow optimizations, WIP change reports, and dependency management. By using this platform for problem-solving, teams can maintain DoD and Kanban consistency and facilitate cross-team coordination.

5. Use Agile Metrics to Monitor and Optimize Scaled Performance

It’s hard to measure quality and flow at a macro level without metrics that tell about a distributed set of teams. To measure quality, track DoD metrics such as adherence, defect, and rework rates at team and program levels.

For flow, program-level metrics like cumulative flow diagrams, lead time, and throughput show efficiency patterns and bottlenecks. Tracking dependency impact helps teams identify the cross-team dependencies impacting cycle time. Then program managers can allocate resources or prioritize activities to minimize delays and ensure continuous delivery.

How Emerging Trends in Agile Methodologies Influence the Evolution of DoD and Kanban Practices

Emerging trends in Agile methodologies are shaping and refining the ways teams approach DoD and Kanban practices. Here are some of the key trends influencing the evolution of DoD and Kanban:

1. Increased Focus on Quality Assurance and DevOps Integration

As Agile teams increasingly embrace DevOps, quality assurance practices are becoming an integral part of both DoD and Kanban. This integration encourages teams to treat testing, deployment, and monitoring as ongoing, iterative processes rather than end-of-sprint activities.

By embedding DevOps principles into DoD, teams ensure that automation, security checks, and deployment standards are met at every stage. Similarly, Kanban boards are evolving to include DevOps stages, such as continuous integration and continuous deployment (CI/CD), allowing for real-time visibility into where a task is within the development pipeline.

2. Remote and Distributed Team Adaptations

With the rise of remote work, Agile methodologies now place a greater emphasis on collaboration tools and communication practices that support distributed teams. DoD criteria are becoming more explicit and standardized to ensure all team members have a clear understanding of quality expectations, regardless of location.

Additionally, Kanban boards are now more digital and collaborative, with tools like Jira and Trello allowing team members to access and update the board in real time. These digital Kanban boards often include enhanced visualization features to help distributed teams identify bottlenecks and task dependencies, improving coordination and alignment.

3. Emphasis on Continuous Feedback and Customer-Centricity

Emerging Agile practices increasingly prioritize continuous customer feedback, influencing both DoD and Kanban by adding customer-focused criteria. In the DoD, teams now frequently include criteria related to user experience (UX) testing and customer validation to ensure that work aligns with end-user needs before it’s considered complete.

On the Kanban side, boards may include feedback loops or separate stages for customer reviews, making the process of incorporating customer feedback an explicit part of the workflow. These changes help Agile teams remain responsive to customer demands, iterating quickly based on real-world feedback.

4. Adoption of Hybrid Agile Models

Hybrid Agile models, such as Scrumban (a combination of Scrum and Kanban), are increasingly popular as teams seek to tailor their workflows. In hybrid models, DoD may combine both Scrum-specific deliverables (such as incremental story completion) and Kanban flow criteria (like reducing cycle time or improving lead time).

This hybrid approach allows teams to adapt their DoD and Kanban boards to fit the unique needs of their project while maintaining clear and meaningful quality standards. Additionally, hybrid models enable teams to create more flexible DoD criteria, suited to both structured sprints and continuous Kanban workflows, allowing them to scale and shift priorities as needed.

5. AI and Automation in Workflow Management

Artificial intelligence (AI) and automation are changing Agile practices in many ways, especially in the area of task management and quality assurance. Some tools may offer a DOD checklist generator, saving time it would otherwise take to manually craft a checklist.

In Kanban, tools like workstreams.ai are already leveraging generative AI features for filling out cards and creating tasks fast. Users can also add custom fields to cards, set up recurring tasks, or duplicate a card with just one click. These features make it easier for the development team to visualize progress on the board.

Get Started With Agile Excellence

Using DoD and Kanban policies in your team’s workflow helps ensure consistent quality and fast project delivery. Almost every development team today uses a DoD to standardize tasks and establish quality benchmarks. At the same time, they leverage Kanban’s visual design, work-in-progress limits, and explicit policies to control the flow of work, eliminating bottlenecks, and collaborating efficiently.

DoD and Kanban support accountability, transparency, and continuous improvement, allowing teams to create value more reliably. By applying the best practices and strategies discussed in this gude, teams can easily adjust to changing project requirements and build high-quality products. When applied properly, DoD and Kanban not only speed up processes, they also foster a strong, effective team culture which is crucial for long-term success.

At Iterators HQ, we specialize in helping teams to adopt agile practices like Kanban and develop robust, DoD practices. Whether you want to optimize your processes, increase quality, or drive continuous improvement, we’ve got you covered. We can help you with expert guidance and resources to support you. Want to scale your project management game? Contact Iterators HQ today and let’s make your team’s journey to productivity and excellence a reality.