Have you ever considered how business models sift through large amounts of data while monitoring pipelines for deployed software models and systems? These are issues faced by most business executives, and that’s exactly where ML Ops comes in.

According to Forbes, the ML Ops solutions market is expected to reach $4 billion by 2025.

Let’s dig into what it is, why it matters, and how you can implement it.

What Is ML Ops

Machine learning operations (ML Ops) are a crucial function of machine learning engineering. It can streamline the production and deployment of machine-learning models.

The complex operations of MLOps demand the expertise of data scientists, IT experts, and DevOps engineers.

Importance of ML Ops

ML Ops is incredibly important if you want an ML model that is fast, reliable, accurate, and relies on large amounts of data.

It uses continuous integration and deployment (CI/CD) practices for effective and speedy development and production of models. This ensures proper monitoring, validation, and governance of machine learning (ML) models to keep all processes synchronous.

ML Ops also improves the quality of machine learning models, simplifies the model management process, and automates the model deployment process in large-scale production environments.

Moreover, this machine learning function makes it easier to align these models with your business needs and regulatory requirements.

Need help with ML Ops in your company? At Iterators we can help design, build, and maintain custom software solutions for both startups and enterprise businesses.

Schedule a free consultation with Iterators today. We’d be happy to help you find the right software solution to help your company.

Difference Between ML Ops and Dev Ops

Dev Ops and ML Ops are both needed to build and deploy software applications. But even though they have similar functions, they do have some fundamental differences in their execution.

Let’s understand them below:

1. Development Processes

ML Ops and Dev Ops have different development processes. In the case of Dev Ops, the code creates an application or interface that enables companies to perform a specific process for itself or other companies.

In contrast, the ML Ops code allows a team to build, develop, or train ML models. This can be used in any process.

2. Version Control

The version control in Dev Ops mostly follows changes made to code and artifacts. For instance, a Dev Ops could rewrite the code for an application to make it faster or add new features.

In contrast, ML Ops pipelines can track more factors. However, building and training a machine-learning model requires you to track various metrics and hyperparameters.

3. Operational Requirements

Even though Dev Ops and MLOps both rely on cloud technology, they have distinct operational requirements. For instance, Dev Ops requires the use of infrastructure-as-code (IaC), build servers, and CI/CD automation tools.

However, the operational requirements of ML Ops include cloud storage for large datasets, frameworks for deep learning and machine learning, and GPUs for deep learning and computationally intensive ML models.

4. Workflows

Dev Ops pipelines mix and match repeatable processes, and their teams don’t necessarily follow a specific workflow.

However, ML Ops pipelines apply the same workflows repeatedly. This allows teams to ensure consistency and improves their efficiency.

5. Development and Deployment Operations

ML Ops requires constant monitoring and updating of ML models because they can degrade quickly. The ML models in ML Ops can generate predictions based on real-world data, so when the data changes, the model performance decreases.

In contrast, Dev Ops works with site reliability engineering (SRE), which focuses on software that monitors ML model development, production, and deployment. This software doesn’t degrade like an ML model.

Benefits of Implementing MLOps in Your Organization

It’s easy to build a machine-learning model that will use the data you feed it to predict what you want. However, if your organization wants to create an ML model that isn’t only fast and reliable but is accurate and caters to a large number of users, only ML Ops can help you out.

Let’s look into various benefits of implementing MLOps in your organization:

1. Efficiency

ML Ops facilitates faster model development, deployment, and production. Moreover, it also ensures ML models are high quality and reliable.

2. Scalability

ML Ops can enable you to control and oversee thousands of models and monitor them for continuous integration (CI), continuous delivery (CD), and continuous deployment at the same time.

It can also help you scale your business while efficiently managing all aspects of the growth process.

3. Reproducibility

ML Ops can help your data teams effectively collaborate and accelerate the release velocity, reducing the conflict between Dev Ops and IT.

This tightly coupled collaboration across data teams will only be possible due to the reproducibility of pipelines provided by ML Ops.

4. Risk Reduction

ML Ops can rid your organization of issues related to regulatory scrutiny and drift checks. It also offers greater transparency and enables you to regulate your machine-learning models by swiftly responding to such requests.

Moreover, ML Ops can help you align the models with the regulatory policies of your organization for greater compliance.

Challenges in ML Ops

The behavior of an ML model is affected by the code you put in and the data coming from the real world, which is an unending entropy source. The disconnect between data and code can cause several challenges in the machine-learning process.

Let’s look into them.

1. Lack of Reproducibility

Reproducibility ensures that an ML model will provide the same results in any production environment if given identical input data. This makes data the key component in building credible and accurate ML-based pipelines.

ML reproducibility is impacted by the changes in input data over time. Additions of new training data and data distribution variations changes lead to inconsistent data. So, if the data entered into the ML model is inconsistent, inaccurate, or not credible, it’ll produce inaccurate results, which reduces its reproducibility.

Moreover, ML reproducibility requires proper logging of parameters and hyperparameters. Due to a volatile input dataset, the parameters and hyperparameter values continue to change, making it difficult to replicate data. This reinforces the lack of reproducibility in machine learning.

2. Integration Issues

To make sure an ML model does what it’s supposed to do, teams have to pay attention to all of its downstream applications. This means understanding the required technical requirements to make it compatible with business systems and finding out the required accuracy.

For instance, if an ML model is used to predict how many people are likely to buy your product, you have to make sure the data the AI produces can be understood by your API. If you don’t do that, your ML model might not deliver the expected level of accuracy.

3. Model Decay

ML models are based on data that comes from the real world. It keeps on changing and can’t be controlled. Due to its volatile nature, ML models are prone to model decay. Machine learning models have to account for this challenge and manage it on the fly.

Model decay refers to the degradation of accuracy in machine learning models due to their interaction with non-static data sets and real-world events. Due to a lack of automated monitoring, the decreasing accuracy of the model often goes unnoticed, leading to model decay.

Addressing These Challenges with ML Ops

Now that we’ve discussed the challenges faced in the management of machine learning models, let’s dive into the different ways MLOps addresses these challenges:

1. Lack of Reproducibility

ML Ops increases the reproducibility of ML pipelines by putting into place consistent version tracking or data and model versioning. This ensures a tightly coupled collaboration between all data teams. Moreover, the following practices can also help increase reproducibility in ML Ops:

- Checkpoint management

- Version control

- Data management and reporting

- Proper logging of parameters

- Training and validation

2. Integration Issues

ML Ops facilitates the iterative deployment of ML solutions by setting up the solutions in different modules. The modules can be updated in sprints to save time and effort and prevent friction in the deployment process.

3. Model Decay

ML Ops can cater to disruptions and abrupt changes in data by keeping the data up-to-date, especially in the case of time-sensitive solutions. It uses tools like automated crawlers to periodically update data and prevent any lags in the performance data.

There are several ML Ops tooling options that help avoid model decay by automatically retraining the models:

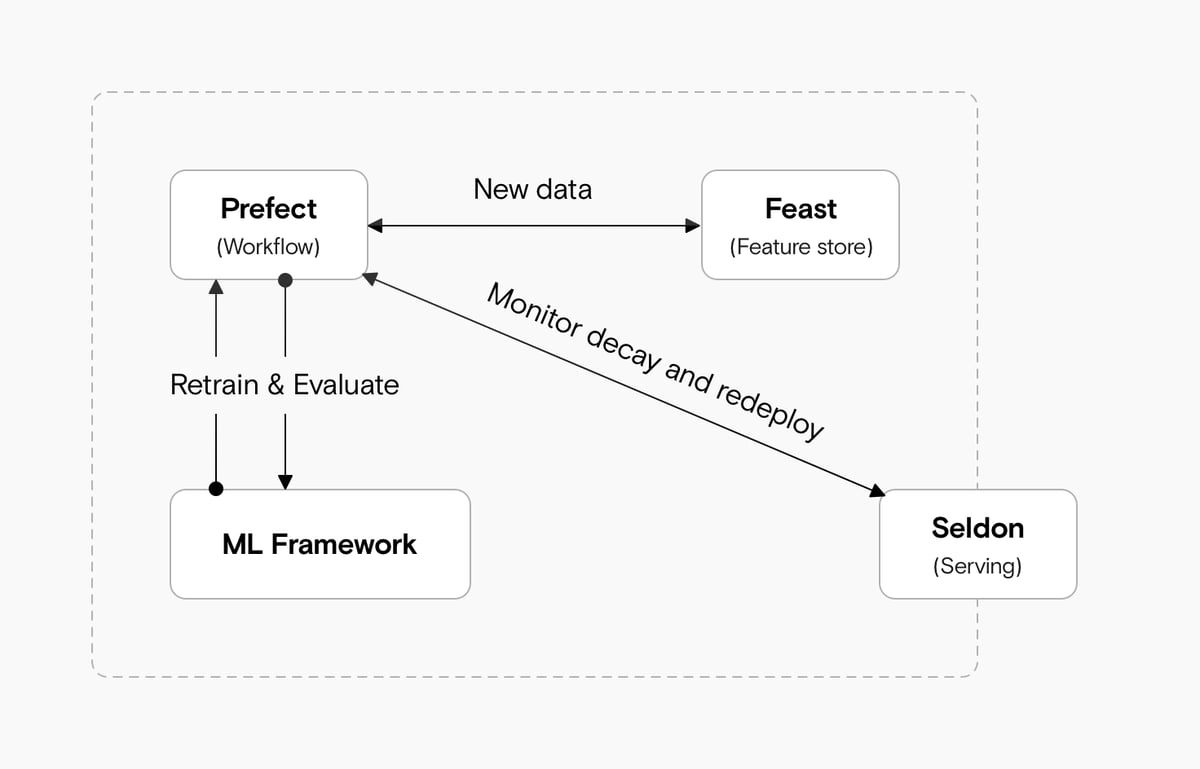

- Prefect: retrains the models and schedules monitoring to analyze a model and detect any potential decay. It’s a workflow and dataflow tool that lists the tasks to be run, executes them, and monitors other tasks being executed.

- Feast: a feature store that helps track changes in input data and share features between different models.

- Seldon: helps turn models into an API to serve it at scale. It’ll help you save time as you won’t need to write the serving code from scratch.

ML Ops Lifecycle

An ML Ops project has a lifecycle to efficiently model, produce, and deploy an ML model. Let’s dive into the stages of an ML Ops lifecycle and its management products.

Stage 1: Scoping

The first stage means defining the project. You need to determine whether the problem even requires an ML solution. You can do that by checking the availability of the relevant data and performing requirement engineering.

Stage 2: Data Engineering

Data engineering requires data collection, baseline establishment, data cleaning, data formatting, labeling, and data organization. In other words, you have to collect the required data to input into your ML model while ensuring it’s accurate.

Stage 3: Modeling

This stage requires you to create code that supports your ML model. Once that’s done, you can use processed data collected in the previous stage to train the model.

After that’s done, perform an error analysis by defining error measurements. This will help you track the performance of the model.

Stage 4: Deployment

Once the modeling is completed, it’ll be packaged and deployed in the cloud or on edge devices.

Packaging includes surrounding the model with the following:

- An API server with gRPC or REST endpoints

- A docker container deployed on cloud infrastructure

- A server-less cloud platform deployment

- A mobile app in the case of edge-based models

Stage 5: Monitoring

ML Ops allows you to maintain and update your ML model through a monitoring infrastructure that requires the following:

- Monitoring the environment where the ML model is deployed for load, storage, usage, and health.

- Monitoring the performance of the model to ensure accuracy and account for any loss, data drift, and bias. This enables you to compare the current performance of the model with its expected performance.

The ML Ops life cycle also incorporates a feedback loop where any issue arising during monitoring can be resolved in the data engineering, modeling, or deployment stages.

Machine Learning Lifecycle Management Products

There are several cloud platforms and frameworks that can manage the machine learning lifecycle. Let’s look into some of them:

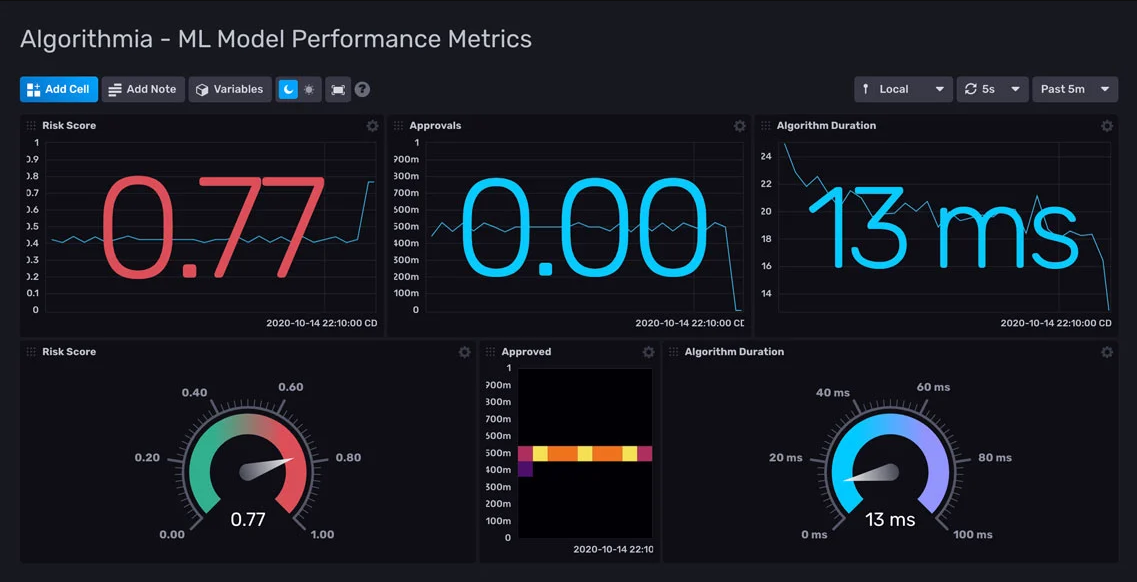

- Algorithmia: It facilitates the deployment, management, and scaling of your machine-learning portfolio.

- Amazon SageMaker: It fully integrates machine learning and deep learning and even includes an autopilot to help those without machine learning knowledge.

- Azure Machine Learning: It’s a cloud-based environment used for all kinds of machine learning, including classical and deep learning and supervised and unsupervised learning.

- Domino Data Lab: It automates Dev Ops for data engineering, speeding up the process of research and testing ideas.

- Google Cloud AI Platform: It includes a dashboard, notebooks, jobs, the AI Hub, data labeling, workflow orchestration, and models.

- HPE Ezmeral MLOps: It uses containers to offer operational machine learning at an enterprise scale. You can use it on-premises, on multiple public clouds, and even in a hybrid model.

- MLflow: MLflow is an open-source management product from Databricks. It facilitates covering and tracking projects and models.

Components of ML Ops

Now that we’ve looked into the major stages of an MLOps lifecycle, let’s dive into the key components of MLOps:

1. Data Collection

Data is a crucial part of ML models, and this stage entails collecting raw data (text, audio, and video) from multiple sources and consolidating it. Data collection is a key component of the second stage of the ML Ops Lifecycle – data engineering.

2. Data Verification

You need to verify the data you collect to ensure it’s valid, up-to-date, reliable, formatted, and reflects the real world. This is a crucial component of the second stage of the ML Ops Lifecycle – data engineering.

3. Feature Extraction

This is where you move on to the third stage of the ML Ops lifecycle: modeling. Select the features of the model you need to use for accurate predictions, rank the features concerning their importance, and include the most important ones.

4. Configuration

Set up the protocols for communications, system integrations, and how various components in the pipeline can collaborate. Don’t forget to connect your data and ML model to the database and document all the configurations. It is an important component of the modeling stage of the ML Ops lifecycle.

5. ML Code

ML Code is an essential component of the Modeling Stage as well. You can finally develop a base model to predict using the data input. Use ML libraries like TensorFlow and fast-ai for language support during coding. Once you have the model, tweak the hyper-parameters to improve your model’s performance.

6. Machine Resource Management

This is where you plan your resources for the ML model, like CPU, memory, and storage. You’ll need GPU and TPU if you’re working on a deep-learning model and a bigger storage for a larger model.

7. Analysis Tool

Once the model is ready, use an analysis tool to compute loss, determine the error measurement, check drifting in the model, and ensure result predictions are accurate. An analysis tool is used for the fifth stage of the ML Ops Life Cycle – monitoring. Now that you’ve created a model and deployed it, it is time to collect data on its success.

8. Serving Infrastructure

It is a crucial component of the deployment stage of the ML Ops Life Cycle. The developed and tested model needs to be deployed where users can access it, like the cloud (SWS, GCP, and Azure). If you’re going with an edge-based model, you can use a mobile application depending on its usage and size.

9. Monitoring

You need to create a monitoring system to observe your deployed model by collecting model logs, user access logs, and prediction logs. Monitoring tools are used for the last stage of the ML Ops Life Cycle – monitoring.

Role of Data Versioning, Model Monitoring, and Model Governance in ML Ops

Data versioning plays a crucial role in reproducibility. Traditional software only requires versioning code, but ML Ops includes data and model versioning to track model versions, data used in training the ML model, and meta-data associated with training hyper-parameters of the model.

On the other hand, model monitoring and model governance allow you to use ML Ops to tweak the developed and deployed model and adapt it to the needs and requirements of your organization.

The feedback loop in the ML Ops product lifecycle enables the data teams to use the monitoring data to make the necessary changes, especially with a volatile factor like data in the mix, which is constantly changing.

Tools and Technologies to Streamline ML Ops Processes

Let’s look at tools you can use to streamline ML Ops processes:

1. XGBoost

XGBoost improves the machine-learning accuracy of a model as it’s based on gradient boosting. If you’re dealing with a decision tree-based model, use XG-Boost instead of neural networks.

2. TensorRT

TensorRT runs machine learning inference on Nvidia hardware and is highly optimized to run on their GPUs. It’s the fastest tool to streamline ML Ops processes.

3. FBProphet

FBProphet facilitates the implementation of time series analysis in your model according to the needs and requirements of your model and organization. You can learn more about time series forecasting using FBprophet here.

Implementing ML Ops in Your Organization

Before you get started with your ML Ops implementation, define your business use case, and don’t forget to establish your success criteria. But once that’s done, there are three ways you can implement ML Ops. Let’s look at all of them:

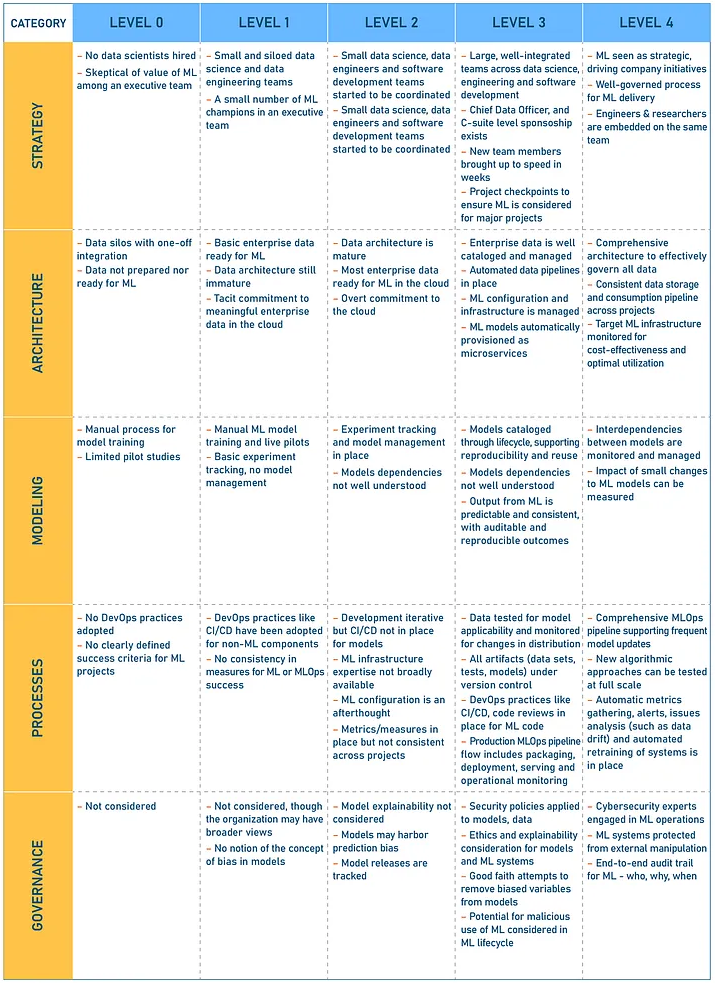

ML Ops Level 0

This level helps companies starting out with ML create a manual workflow that doesn’t require much training or maintenance. Here’s how to implement it:

- Use various data sources to select and integrate the relevant data.

- Perform exploratory data analysis (EDA) to understand the extracted data and prepare the data by splitting it into training, validation, and test sets.

- Implement various algorithms on the prepared data to train your ML models. For instance, you can train your model by tuning the hyper-parameters of your implemented algorithms.

- Use a holdout test set to evaluate the quality of your model and use the resulting set of metrics to assess the model.

- You can confirm the model deployment as adequate by conducting iterations and assessing the predictive results of the model.

Once you’re satisfied with the results of your model, you can finally deploy it by creating a server in the cloud, an application, or a website.

However, keep in mind that ML models can often fail or break when deployed. You can prevent that by using CI/CD and CT best practices because they’ll help you build, test, and deploy new implementations quickly.

ML Ops Level 1

The core function of this level is to automate the ML pipeline to perform continuous training of the model. It may be helpful for companies that work in a constantly changing environment or depend on customer-specific information.

Here’s how to implement this process:

- Introduce automated data to promote continuous delivery (CD) of model prediction service.

- Experiments are rapidly iterated for better readiness to move the whole pipeline to production.

- Modularize the source code for the ML components to ensure they’re reusable, shareable, and composable across the ML pipelines.

- You deploy a trained model in Level 0, whereas in Level 1, you can deploy a training pipeline that controls the trained model.

- Additionally, this level includes online model validation, like an A/B testing setup. Test your model for its consistency with the prediction service API and the compatibility of the infrastructure.

The ML pipeline uses data from a Feature Store in batches and transforms it into features required by the model.

ML Ops Level 2

To ensure your ML pipeline is always up-to-date, you need to have a robust automated CI/CD system. This is especially crucial for technology companies that have to retrain their employees hourly or daily.

The CI/CD automated ML pipeline includes the following stages:

- Development and Experimentation – You try out new ML algorithms and modeling iteratively to get a source code of the ML pipeline steps.

- Pipeline CI – Run various tests on the source code you built to get pipeline components like packages, executables, and artifacts.

- Pipeline CD – Implement the model by deploying the artifacts created in the CI stage to the target environment. It’ll automate the retraining and deployment of other ML models.

- Automated Triggering – The pipeline deployed in the CD stage will be executed by a trigger or based on a schedule. The trained model will finally be pushed to the model registry.

- Model CD – This stage entails a deployed model prediction service.

- Monitoring – use the live data to collect statistics on the model performance. It’ll either execute the pipeline or a new experiment cycle.

Common Pitfalls to Avoid During ML Ops Implementation

If you’ve decided to use an ML Ops pipeline to solve a problem, you have to do it right. Otherwise, you could run into a lot of implementation problems. Let’s look at a few you could run into:

1. Not Using the Right Stack

While using Python to create your ML Ops solution can improve your efficiency, it isn’t the best language for specific solutions.

This is because while Python can do just about anything, a specific language can give you a 5% efficiency increase that can make the difference between getting the right results and getting delayed.

So, you should use the right language, library, and stack to build your ML model instead of going for an all-in-one solution. For instance, you can use Scala for all deep learning tasks because it has excellent natural language processing libraries. At Iterators we can help you with that.

2. Knowledge Loss

As you start working on your ML program, you’ll most likely need people to build, deploy, and train your models. And while you can get away with a data scientist who moonlights as an ML Ops and Dev Ops, they may not be able to maintain ML solutions that are too complex.

Plus, if your data scientist gets an offer with a 20% pay bump and benefits to boot, they’ll most likely be gone, taking all their knowledge with them. This organizational knowledge loss can cripple your entire ML program.

3. Lack of Retraining

Models often break when you deploy them in the real world if you don’t retrain them frequently or actively monitor their quality in production.

For example, if you developed an ML app that recommends fashion products, its data should be updated according to the latest trends and products. That’s the only way to get current and usable recommendations.

ML Ops in Action

ML Ops improves the efficiency, scalability, and performance of all ML projects. It also enables them to scale against the increasing demand for input and output of models from different standpoints. And that’s exactly what these companies did:

1. Constru

Constru used an ML solution to reduce the time spent on product experimentation by 50%. Once the solution was integrated into its system, the company could handle 2x as much ML work without needing to hire more staff.

They use ML Ops to acquire engineering and business intelligence to help analyze and gauge project execution. AI algorithms extract relevant information from comprehensive 360-degree visual data collected. Then, the results are automatically analyzed to offer intelligence via an analytics dashboard.

2. NetApp

NetApp is another AI company that reduced the time-to-deployment for their AI services by 6-12x on average. This led to a 50% reduction in operating costs, which led to higher ROI for the company.

They used ML Ops to centralize data science work and reduce regulatory risks. ML Ops streamlines the process of data science project development and production for companies with large teams of code-first data scientists.

3. AgroScout

AgroScout is an agriculture company that used ML solutions to increase experiment and data volume by 50x and 100x, ensuring they could predict market demand and trends. This led to a 50% decrease in time-to-production.

They used ML Ops to create an automated, AI-driven scouting platform to detect any outbreak of disease or pests in vast agricultural areas and prevent substantial loss of crops and capital.

Future Trends in ML Ops

ML Ops can increase the efficiency and speed of deploying ML models into production. However, with the increasing complexity of ML models, manual management is proving cumbersome.

That’s why automation is at the forefront of new ML Ops trends. It streamlines operations, slashes deployment time, and reduces the risk of human errors, ensuring accurate and efficient model deployment.

Collaboration and integration are also redefining ML Ops. Historically, data scientists, ML engineers, and IT operations functioned in isolated silos, fostering communication gaps and inefficiencies. ML Ops aims to bridge these gaps by fostering a culture of collaboration.

So, are you ready to elevate your machine-learning game? Transform your models into real-world impact by unlocking the full potential of ML Ops.