As businesses become more complex, their operations are increasingly made up of smaller services from many providers. To keep all these services working together, stakeholders must manage contracts for system integrations. These service contracts govern the terms of interaction between systems, outlining everything from the kind of data that will be exchanged, to how errors should be handled, and how well an input should work.

A clearly defined contract ensures that different components can exchange information, even if they’re built with different languages or frameworks. This interoperability makes it possible to plug new services into your existing systems or even update apps without disrupting the entire system.

This article describes strategies for designing and maintaining robust service contracts. We’ll look at what message formats to support and the role of data validation and error handling. You’ll also learn about strategies for versioning and content negotiation to keep things running smoothly when services inevitably change.

Communication Protocols and Data Exchange

Developing good standards for system integration starts with clear and reliable communication protocols. Here are some of the most popular communication protocols:

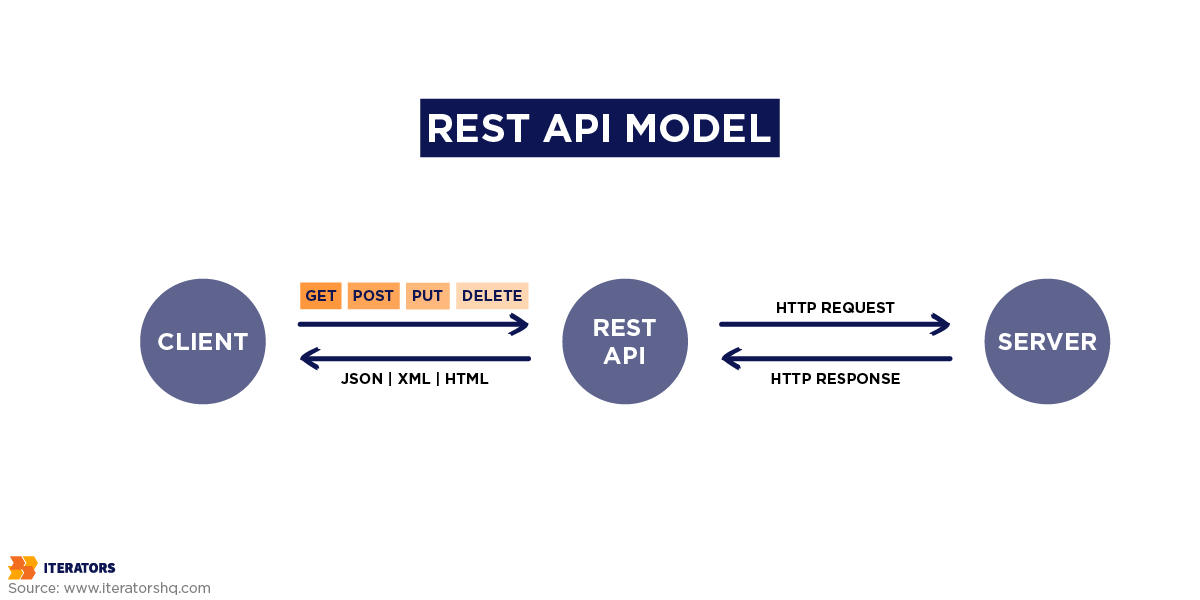

1. REST (Representational State Transfer)

REST, often implemented over HTTP REST protocols, is a standard for stateless communication. It’s popular because it fits with many existing models, it’s simple to program, and you’ll find a lot of other people who understand it. In fact, over 90 percent of developers use REST APIs.

In development projects, REST APIs define clear endpoints, request-response formats, and data models. . For example, an e-commerce platform may use a REST API to define a “GetProductList” service contract, specifying the URL structure, supported HTTP methods (e.g., GET or POST), and response schema.

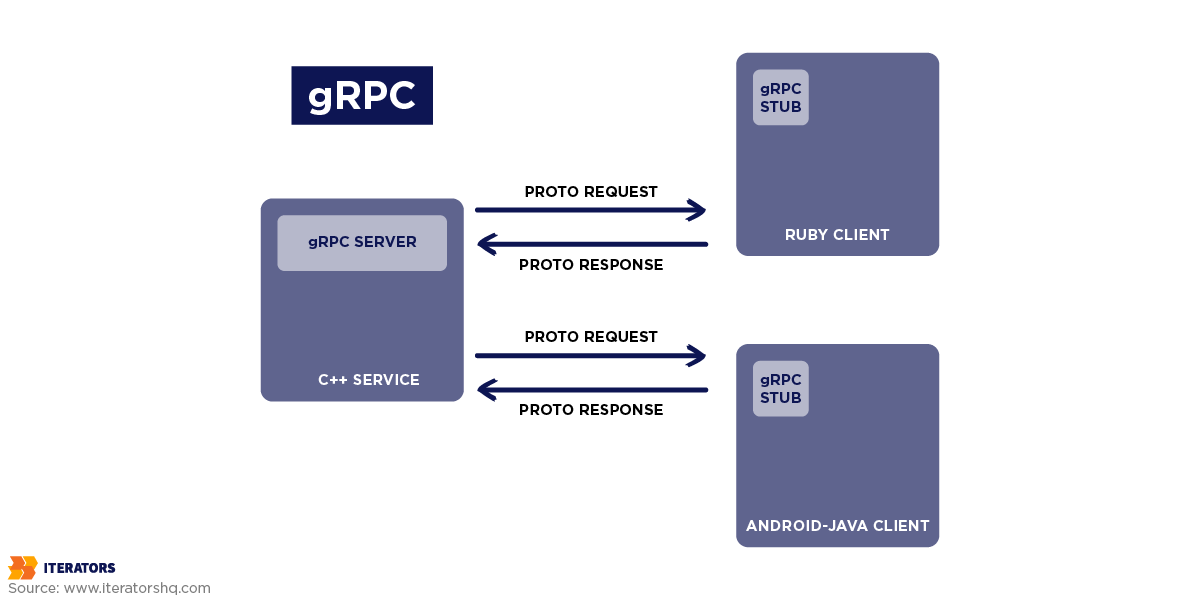

2. gRPC (gRemote Procedure Call)

So, what is gRPC protocol? Well, gRPC enables efficient binary data transfer, which makes it tailored for fast, real-time interactions. Using Protocol Buffers, gRPC provides faster serialization and deserialization compared to JSON. In fact, when comparing HTTP vs gRPC, gRPC’s binary data transfer can offer significant speed and efficiency improvements.

gRPC is therefore a good tool for large-scale microservices architectures that use or store a high amount of data. For instance, a microservices architecture might use gRPC to define a “ProcessPayment” service contract, detailing the serialized input/output formats and procedures. This approach ensures high performance and strict adherence to the contract while handling large-scale data operations.

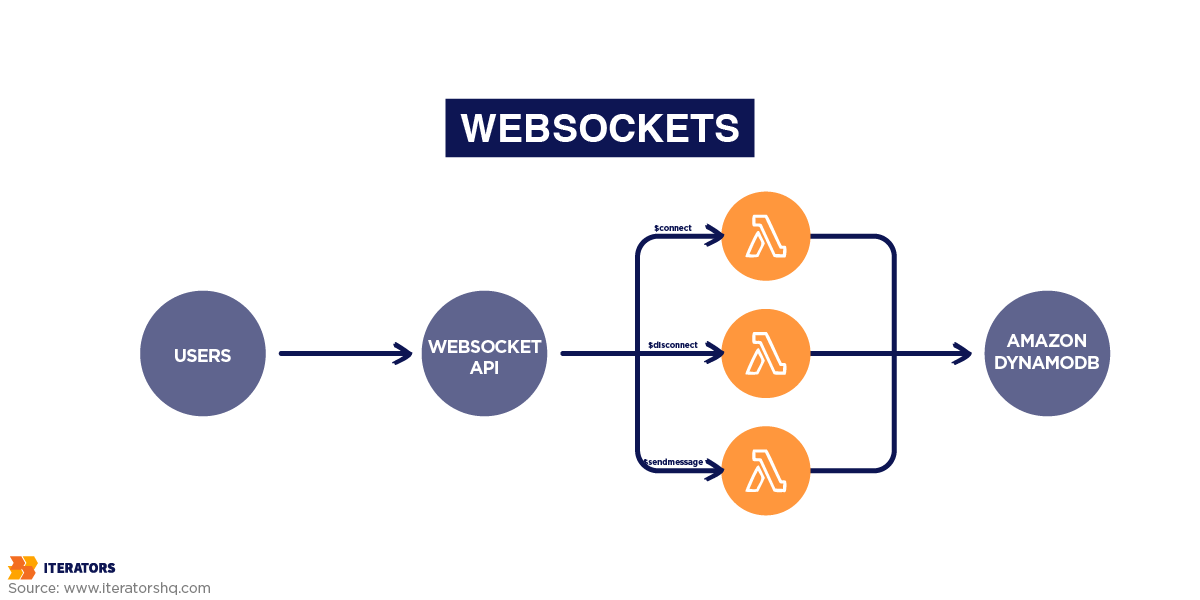

3. WebSockets

WebSockets enable bidirectional communication. A client can transmit data and receive it in real time through a single open connection. WebSocket protocols are also capable of handling data in various formats (eg, JSON or XML).

WebSockets are particularly useful for applications where real-time data streams are required, such as the stock market live price charts. For instance, a stock trading platform might implement a “SubscribeToMarketUpdates” service contract, enabling real-time price updates. This eliminates the need for constant polling and ensures data is pushed to clients as soon as it’s available, aligning with the protocol’s strengths.

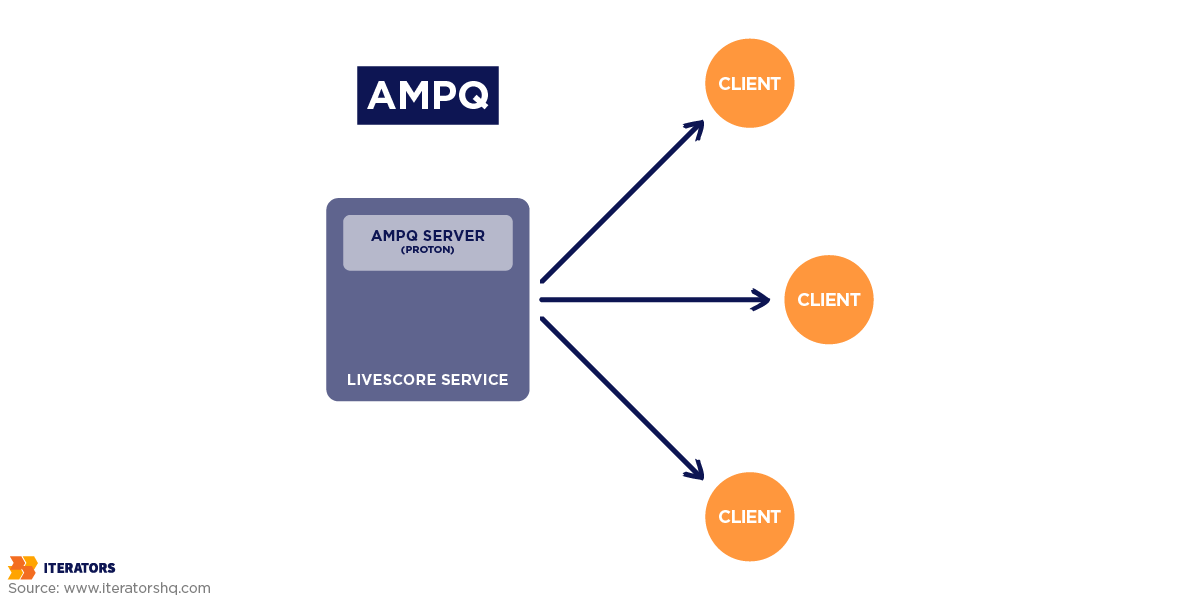

4. AMQP

AMQP is an open protocol for secure, message-oriented communication. It allows queueing, routing, and message delivery confirmation. It’s especially useful in complicated business environments like finance or logistics where you need reliable, secure messaging across disparate systems.

A logistics company, for example, may use AMQP to define a “TrackShipment” service contract, where messages are routed through brokers and confirmed upon delivery. This guarantees reliable communication even in cases of network disruptions, ensuring that critical data exchanges remain intact.

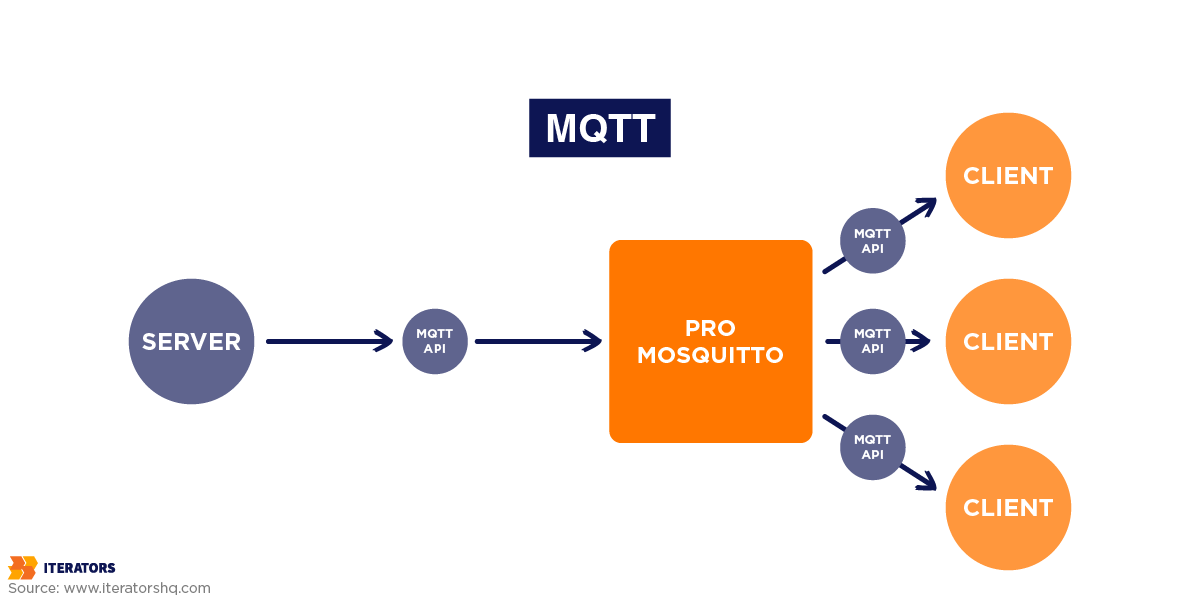

5. MQTT

MQTT is a low bandwidth, high latency messaging protocol, used for IoT. Its publish-subscribe architecture facilitates service contracts by defining topics and message structures. For example, an IoT network for smart homes may implement an “UpdateDeviceStatus” service contract, enabling devices to publish updates and subscribers to react in real-time. This ensures efficient data sharing while conserving bandwidth and power, crucial for IoT applications.

MQTT’s small message and low power consumption make it a perfect fit for applications that need to share data rapidly across millions of devices.

| Protocol | Purpose | Use Cases |

| REST | Standard web communication model based on HTTP methods | Web applications, microservices, CRUD operations |

| gRPC | High-performance, low-latency remote procedure calls | Microservices, real-time systems, inter-service communication in cloud environments |

| AMQP | Reliable, message-oriented communication for high-throughput, fault-tolerant systems | Financial services, distributed systems, message queuing |

| MQTT | Lightweight, efficient protocol for constrained devices | IoT, real-time monitoring, wearables, low-resource or high-latency environments |

| WebSockets | Real-time, bi-directional communication for interactive applications | Live updates, collaborative applications, chat systems, gaming |

Data Validation in Service Contracts

Robust data validation is integral to building scalable and reliable systems. For any integration system with multiple connected services, it’s essential to make sure that only clean, structured data moves between them.

Data validation at entry points is the first line of defense in preventing GIGO (Garbage In, Garbage Out) errors. Poor data quality entering one service can ripple through the entire system and can create downstream failures affecting the operation of multiple services. To avoid such scenarios, validation should occur at every entry point to verify that incoming data follows the expected structure and type specifications imposed by the service contract.

Schema validation is one of the most useful ways to meet an expected quality. It checks incoming data against a predefined data model (the schema) at runtime. This check ensures that the new entry adheres to the expected structure and data types.

Structuring Data with Message Schemas

Defining message schemas is necessary to specify the structure and format of the data exchanged. If the format is clear, consistent, and universally known, the services will interpret the data accurately, and the risk of error and miscommunication will be minimal. The most common schema formats are:

1. JSON Schema

JSON Schema is a widely used standard for defining the structure of JSON data. It allows specification of required fields, data types, and value constraints, ensuring compliance with agreed-upon formats. In contract management, JSON Schema formalizes expectations for data exchange.

For example, a financial service API might define a “ProcessPayment” contract using JSON Schema, mandating that fields like “transaction_amount” are numeric and non-negative. This clarity reduces errors, ensuring that all parties conform to the same data structure.

2. XML Schema (XSD)

XML Schema Definition (XSD) provides robust validation for XML data. It supports enforcing constraints like pattern matching and custom data structures. In contract management, XSD is often used for legacy systems where strict validation is critical.

For instance, an identity verification service could define a “ValidateUserProfile” contract, requiring fields like “email” to follow a specified format. Although less common in modern APIs, XSD remains valuable for high-stakes applications requiring rigorous data integrity.

3. Avro and Protocol Buffers (Protobuf)

These are binary serialization frameworks for speedy and compact communication. Avro is often employed to work with a big data application, whereas Protocol Buffers (used with gRPC) are good for high-performing applications that have a rigid data structure and need low overhead.

When leveraging Avro or Protobuf schemas, you can explicitly specify data types, structures, and defaults for integrations that involve lots of validation and data transformation. For example, a “SyncUserActivity” contract in a gRPC-based system might use Protobuf to ensure that all nodes interpret the serialized data identically. Avro, on the other hand, might underpin contracts in big data environments, facilitating seamless integration and transformation of massive datasets.

Validating these schemas at run time prevents bad data from coursing through your system and breaking downstream services. Validation can thus be a key tool for maintaining data integrity across system integrations.

Beyond schema validation, you may apply more specialized filtering and transformation to standardize field formats and weed out inconsistencies. For example, you might filter out extra whitespace or inconsistent date formats. These steps make the processing and pooling of data easier in the long run.

How to Enforce Data Integrity With Error Handling and Recovery Patterns

Error Handling Strategies

Besides building bulletproof software using error handling, you can use the concept to control how systems should behave when faced with data mismatches, network failures, or complete service downtime. Here are some effective strategies for handling errors:

1. Controlled Error Responses

Service contracts should define the best error messages and codes so that services can interpret and handle errors meaningfully. When creating error responses, structured formats such as requests.models.response can send clients rich, uniform error information so that the communication is direct and the debugging process is easy.

Error responses could include codes for validation errors, authentication failures, and others. Here’s an example of an error response for a validation failure:

{

"errorCode": "400 Bad Request",

"errorMessage": "Invalid email format. Please enter a valid email address.",

"httpStatus": 400

}

2. Retry and Timeout Policies

Retry policies determine how often a service can attempt a failed request before giving up, while timeout policies dictate how long a service should wait for a given request to complete before giving up. For example, a payment API might include this policy in its contract:

“If a payment request fails due to a network error, the client must retry up to 2 times with a minimum interval of 5 seconds between retries.”

These routing policies help customers handle network problems and transient errors responsibly by avoiding services that wait indefinitely for a response.

3. Fallback Mechanisms

Fallback mechanisms are alternative workflows that kick in when a primary service fails. For example, when a payment provider goes down, a service might attempt a retry later, or try to use a backup payment method.

Fallback mechanisms are an important aspect of product management. Adding these strategies to your service contracts ensures that your service continues to operate when primary capabilities are down. It also enables users to complete their tasks without undue interruption.

4. Idempotency for Safe Retries

In API design, idempotency ensures that executing an operation multiple times won’t result in additional unwanted effects. This is particularly important for actions like payments or database modifications, where duplicate requests can lead to errors. A service contract for an e-commerce platform might specify:

“If the primary payment gateway is unavailable, the service must automatically attempt to process the transaction through a secondary gateway.”

Another example could be a content delivery API specifying that, in case of a failed request for high-resolution images, the system should serve cached low-resolution versions to maintain functionality.

Error Recovery Patterns

Integrated systems must also include patterns for error recovery, which allow services to recover gracefully from failures. These patterns can be integrated into service contracts to provide standard responses and avoid the propagation of errors. Here are a few examples of error recovery patterns:

1. Circuit Breaker Pattern

This pattern terminates subsequent requests to a service when it detects a series of failures. This way, the service has time to recover before regular traffic resumes. For example, a service contract for a payment processing API might specify:

“If the ProcessPayment endpoint returns a timeout error for more than 5 consecutive requests within a 1-minute window, the circuit breaker must trigger, halting further requests for 2 minutes and notifying the monitoring system.”

This prevents additional strain on the failing service while enabling prompt issue resolution.

2. Bulkhead Pattern

The bulkhead isolates different components of an Integration system so that errors in one part don’t propagate through to other parts. This not only makes the logical abstraction of a system simpler but also reduces the amount of damage an error can cause in a bounded component. For instance, in a multi-tenant e-commerce platform, a service contract might state:

“Each tenant’s data processing must be isolated to ensure that errors affecting one tenant do not impact others. If one tenant’s data processing exceeds allocated resources, only that tenant’s requests will be throttled.”

3. Graceful Degradation

Graceful degradation allows an integration system to reduce its functionality in response to failures while maintaining core operations. For example, a weather data API’s service contract could specify:

“If real-time weather data is unavailable, the system must serve cached data from the last successful update within a 24-hour window.”

The Robustness Principle: Designing for Resilience

One general guiding principle in designing service contracts is known as the Robustness Principle, often summarized as “Be conservative in what you send, be liberal in what you accept.” This means that services should be very strict on the data they send through the contract. All required fields should be included and properly formatted. On the other hand, when services receive data, they have to be more forgiving, accepting non-standard or unexpected data as much as possible.

Google Maps is an excellent example of the robustness principle in practice. The app is liberal in what it accepts, allowing users to input locations in a variety of ways, including misspelled words or vague descriptions.

Enforcing this principle reduces the possibility of small inconsistencies blowing up into system errors. It helps ensure a service can function continuously, even if a peer component is updated or misconfigured at some point.

Handling Service Evolution with Content Negotiation and Versioning

Services contracts should also consider that new capabilities and updates must be considered as systems evolve and change. Content negotiation and versioning methods help services in development projects to handle different data formats and accommodate changes without breaking anything. Service contracts define these tactics upfront to ensure that system integrations will not break down.

Adapt to Multiple Data Formats with Content Negotiation

Content negotiation is the ability of services to change according to new data types and client requests, without changing much in the service logic itself. Thus, services can interoperate with multiple systems and be flexible through content negotiation.

Content negotiation allows services to provide responses in a variety of formats like JSON, XML, or Protocol Buffers as per the need or want of the client. This flexibility is essential in systems where clients may have different format requirements e.g., older systems may need XML and newer services JSON. So, how is it done?

For HTTP-based services, the Accept header gives clients the option to choose their response type, such as application/json or application/xml. Services can check this header and respond in the format requested by the client. This header tells the server what format to send data in a request.

Custom media types give you more control over content negotiation by defining the data type, API version, and other features. For example, application/vnd.myapi.v1+json. This format explains exactly what the clients are getting. This can be useful in scenarios requiring distinct formats or schema versions.

Versioning Service Contracts for Smooth Updates

With the right versioning policies, services can add new functionality or improvements without impacting existing clients. Service contracts can also be designed with transparent versioning rules to make updates roll out in a safe way, minimizing potential issues for dependent systems. Versioning can be done in several ways including:

1. URI Path Versioning

The most common approach is URI path versioning. It involves adding a version number to the API endpoint, such as /v1/resource or /v2/resource. This makes it easy for the clients to decide which version of the service they want to access. And it makes version control simple to manage.

2. Header Versioning

For header versioning, the version information is stored in the HTTP headers instead of the URL path. A client, for instance, might call for version 2 by adding a custom header like X-API-Version: 2. Header versioning keeps the URL structure clean. It can be handy for services that need flexible version control without changing endpoint paths.

3. Query Parameter Versioning

Query parameter versioning allows clients to specify the version as a query parameter, such as /resource?version=2. This method can be handy in systems where URL path format must be static, or in cases where clients may not have easy control over headers (e.g., JavaScript-based frontends).

4. Semantic Versioning and Change Communication

Semantic versioning uses a three-part version identifier: major.minor.patch, such as 2.3.1. Each segment represents a different level of change:

- Major: Introduces backward-incompatible changes (e.g.,

v2.0.0). - Minor: Adds backward-compatible features (e.g.,

v2.1.0). - Patch: Fixes bugs without changing functionality (e.g.,

v2.1.1).

Version headers or custom media types can be used with semantic versioning to show versions without modifying the URL path. This method provides fine-grained version control so clients can track feature releases and bug fixes.

Implement Deprecation Policies

Services may have to be retired as they are updated and upgraded. Deprecation policies allow clients sufficient time and instructions to upgrade to the latest version so that service updates are less disruptive. Here’s how to go about it:

1. Advance Notices and Documentation

Remind clients before you deprecate an older version. Provide clear API documentation detailing the migration process. This should cover code examples, migration guides, and timelines.

2. Gradual Phase-Out

Release deprecated versions gradually and give customers time to adjust. For instance, start with denying access to non-critical functionality on legacy versions, and then have a firm expiration date at which the old version will no longer be available.

3. Monitoring and Support During Transition

Provide assistance and tracking solutions for clients during the transition period. Analytics and reporting can show which customers or systems are still using an old version. Then you can reach out to these clients directly to help them migrate.

Advanced Contract Specifications for Complex Integrations

When system integrations get more complex, REST APIs may not be able to accommodate modern, distributed systems. Advanced contract definitions can extend service interactions by structuring complexities in workflows, security, and communication protocols. Here’s how to create advanced contracts to accommodate different integration models and data sources.

1. Establish Precise Request and Response Models

Advanced contracts have very detailed request/response models with pre-defined fields, so no extra information has to eat up the payload. This detail-oriented approach also clarifies optional fields or metadata that may differ between integrations. Hence, responses become more predictable. For example, in a financial API, a response model for transaction details might include:

{

"transaction_id": "12345",

"amount": 150.00,

"currency": "USD",

"status": "Completed",

"timestamp": "2024-12-05T12:00:00Z",

"trace_id": "abc-123-xyz"

}

Identify which fields should be mandatory and which must be optional. This makes expectations transparent to clients and services and will enable fast data management. Add metadata fields like timestamps or trace IDs for downstream services to record and analyze requests.

2. Design Sequential Messaging and Workflow Management

Integrations often have many dependent operations in a sequence. Clear workflows let project management control several dependencies without incomplete transactions or race conditions on system integrations.

For multi-step operations, specify the specific sequence of message exchanges so you can avoid race conditions or incomplete steps. Let’s assume you’re processing an order, the messages may follow this sequence:

Order Confirmation >> Payment Processing >> Inventory Update >> Shipping Initiation >> Order Completion

3. Implement Event-Driven Behavior and Pub/Sub for Asynchronous Operations

Event-driven design is often employed for microservices. It allows systems to adapt in real-time. By setting up event types, triggers, and subscriptions in your contract, services can talk to each other asynchronously and react immediately to actions or changes.

For example, in an e-commerce platform, you could have:

- Event Type:

ItemRestocked - Trigger: Inventory level exceeds the threshold.

- Subscribers: Marketing services (to notify customers), inventory analytics tools, and order processing systems.

A service contract could define event payloads like:

{

"event_type": "ItemRestocked",

"item_id": "67890",

"quantity": 100,

"timestamp": "2024-12-05T12:30:00Z"

}

4. Optimize Data with Compression and Encoding Standards

Data compression and encoding rules are essential for successful system integrations. This is especially true in environments with large data sets, bandwidth constraints, or real-time requirements.

Compression techniques compress data being transferred and speed up communication while saving bandwidth. Choose data compression techniques based on the type of data and the processing speed to encrypt and decrypt it.

Gzip and Deflate

Gzip and Deflate are widely used in systems and services. These multipurpose algorithms work well for compressing text files such as JSON and XML. Gzip compression, in particular, is widely used in web communication and reduces data size without much overhead. This makes it perfect for general-purpose applications.

Brotli

Brotli has better data compression ratios than Gzip, especially for static or structured data. Although not universally supported, Brotli is a good option when you are focused on having a small data size and the client can handle the decompression.

Encoding standards are used to keep data in good shape and consistency, across all systems. When you encode text or binary data, it prevents miscommunications that could halt data processing or cause problems in distributed systems. Here are the popular strategies:

UTF-8

UTF-8 is the most used codec for text content since it implements all the Unicode characters and works well with web services. The UTF-8 as a default text format guarantees characters are correctly encoded when transmitting data in more than one language or using special characters.

Base64 Encoding

If binary content (images, or contents of files) has to be included in text-based format (such as JSON or XML), then Base64 encodes the binary data into ASCII text. This makes it suitable for text-based transmission. Base64 comes in handy for APIs that want to deliver binary data but don’t want the payload text to become lost.

5. Build Security Protocols into the Contract

Higher levels of integration typically need to be more secure to guard against unauthorized access and data theft. Here are some security mechanisms to highlight within the service contract:

A. Token-Based Authentication

Use tokens (like JWT) to provide a secure, scalable way of verifying user identity across multiple services. Token-based authentication lets systems control access even in distributed environments.

B. Multi-Layered Authorization

For more sensitive integrations, OAuth offers finer control over access permissions, so clients can only specify the levels of access they need. API keys are a more direct, faster option for less sensitive data, but should be used with IP whitelisting or usage limits for additional security.

C. Encryption and Data Privacy Requirements

Encryption at rest and in transit is an integral part of modern service contracts. When dealing with personal information or money, be sure to specify the encryption protocol in the contract, for example, AES-256 or TLS.

Key Factors to Consider When Selecting a Solution for Managing Service Contracts

When choosing a solution for contract management of service, think about the following factors:

1. Robustness

This solution should have the capability of handling heavy workflows and large amounts of transactions. This includes handling multiple contracts at once and delivering all functions as expected. Robustness reduces downtime and improves reliability. Users can have confidence that the system will perform at all times even when under high load.

2. Scalability

The software you choose must also be able to scale with your organization’s growth and changes. Scalable software supports current integrations while scaling as team development software and requirements change. It can support growth in data, users, and features without massive redesign. Such versatility means it will remain resilient over time, and the solution will evolve with your startup without any extra expenses or downtime.

3. Data Integrity

Data integrity means maintaining accurate, scalable, and reliable data across the lifecycle of the contract. It must have automated validation checks, full audit trails, and backup to avoid data loss. Such protections also assure stakeholders that information can be trusted, which is vital for compliance and decision-making.

4. Compatibility with Existing Systems

A solution that can easily integrate with your existing software environment is a must. When a system can integrate with other tools — CRM, ERP, or a document management system — manual data entry and friction is removed. Integration makes for seamless workflows, where data moves seamlessly from one system to the next.

5. Security

Given the sensitive nature of service contracts, robust security measures are paramount. The solution should offer features such as data encryption, multi-factor authentication, and user access controls to protect confidential information. Compliance with industry regulations, such as GDPR or HIPAA, further enhances security, helping organizations avoid legal repercussions and safeguard customer trust.

Challenges in Managing Service Contracts

Service contracts can be intimidating especially when systems get more complex and integrated. Here are 5 common problems and how to deal with them effectively:

1. Versioning and Compatibility Issues

Since services are continuously changing and new features or updates are coming out, you will often need to modify service contracts. This might present compatibility problems between existing clients and newer services. In absence of a versioning framework, even small changes can break integrations and cause service crashes.

Use a good version control system, and separate major and minor and patch updates. Follow semantic versioning (i.e., v1.0.0, v1.1.0, v2.0.0) and send release notes with change notification to clients. Smaller updates should have backward compatibility, while major updates should contain migration instruction guides. Also, allows clients to specify the version they wish to use in API calls so it will not cause unwanted interruptions.

2. Ensuring Data Consistency Across Systems

In distributed systems, inconsistent data across services can lead to errors, mismatched expectations, or even system breakdowns. For example, differences in how timestamps or unique identifiers are handled can create discrepancies in records.

Enforce strict data validation rules within the service contract. Use standardized formats (e.g., ISO 8601 for dates) and establish clear guidelines on required fields and acceptable values. Contracts should also include provisions for reconciliation processes, such as periodic data audits or automated correction workflows. Furthermore, adopting idempotent operations ensures that repeated actions do not introduce inconsistencies.

3. Security Vulnerabilities and Compliance Risks

Service contracts often involve sensitive data exchanges, making them a target for breaches. Furthermore, failing to adhere to compliance regulations (e.g., GDPR, HIPAA) can result in hefty fines and reputational damage.

Build comprehensive security protocols into the contract. Use strong encryption (e.g., TLS 1.3 for in-transit data) and secure authentication methods like OAuth2 or JWT. Define clear rules for data retention, deletion, and masking to align with compliance standards. Regularly review contracts for vulnerabilities and update them to reflect evolving security requirements. Integrating automated monitoring tools to detect and address potential threats can also mitigate risks.

4. Lack of Standardization Across Teams or Vendors

When working with multiple teams or vendors, inconsistent practices can result in misaligned expectations or poor integration. For example, one team might use XML schemas while another prefers JSON, leading to compatibility issues.

Establish a universal standard for service contracts across all teams and vendors. This could include using a common data format (e.g., JSON or Protocol Buffers) and adhering to widely accepted API standards like OpenAPI or GraphQL. Regular workshops and training sessions can help ensure everyone understands and follows these standards. Additionally, creating a central repository for service contracts makes it easier to maintain and access them across the organization.

5. Monitoring and Managing SLA Compliance

Service Level Agreements (SLAs) outlined in contracts often set performance benchmarks, such as uptime or response time guarantees. However, monitoring compliance with these SLAs can be challenging, especially in complex ecosystems.

Use automated monitoring tools to track SLA metrics in real-time. Contracts should specify acceptable performance thresholds and penalties for non-compliance, ensuring accountability. For example, a contract might define a minimum uptime of 99.9% and stipulate refunds or credits if this target is not met. Additionally, periodic reviews of SLA performance can identify patterns and areas for improvement, fostering better collaboration between parties.

Conclusion: Optimize Your System Integrations Today

Creating clear service contracts is necessary to build integrated systems that are stable, effective, and scalable. Whether it’s selecting the right communication protocol, performing data validation, handling errors, or optimizing through compression and encoding, every part of a service contract adds resilience and performance to your systems.

Service contracts are not just technical spec sheets, they are the roadmap to easy interaction and set expectations for all integration partners. By implementing thoughtful strategies like content negotiation, versioning, and security, your integrations can adapt without affecting workflow.

Ready to build more reliable and adaptive service integrations? Contact us today! Our development team at IteratorsHQ specializes in optimizing system integrations that scale with your business. Let’s help create a solution tailored to your needs.