When seeking technology to give your company an edge, Machine Learning applications stand out. This groundbreaking force in today’s tech landscape, powers everything from personalized content recommendations to self-driving cars. It’s a revolution shaping our lives and industries through its data-driven magic.

The versatility of machine learning knows no bounds. This technology allows computers to learn from data and find applications in diverse fields, offering customized solutions to complex problems.

Welcome to our exploration of the captivating world of Machine Learning Applications. This article will demystify machine learning fundamentals, delve into intricate algorithms, and unveil real-world industry examples. Join us on this journey into the realm of limitless possibilities.

What is Machine Learning

“With the availability and efficiency of large language models (LLMs), even smaller startups and businesses now have access to capabilities previously out of reach. Leveraging LLMs opens up new avenues for innovation and operational strength. When I think about it, many of the applications we’ve built tap into AI’s power, often seamlessly working behind the scenes to enhance user experiences and streamline processes.”

Sebastian Sztemberg

Machine learning drives today’s technological advancements, enabling computers to learn from data and make intelligent decisions. In this section, we’ll unravel the essence of machine learning and its pivotal role in shaping the digital landscape.

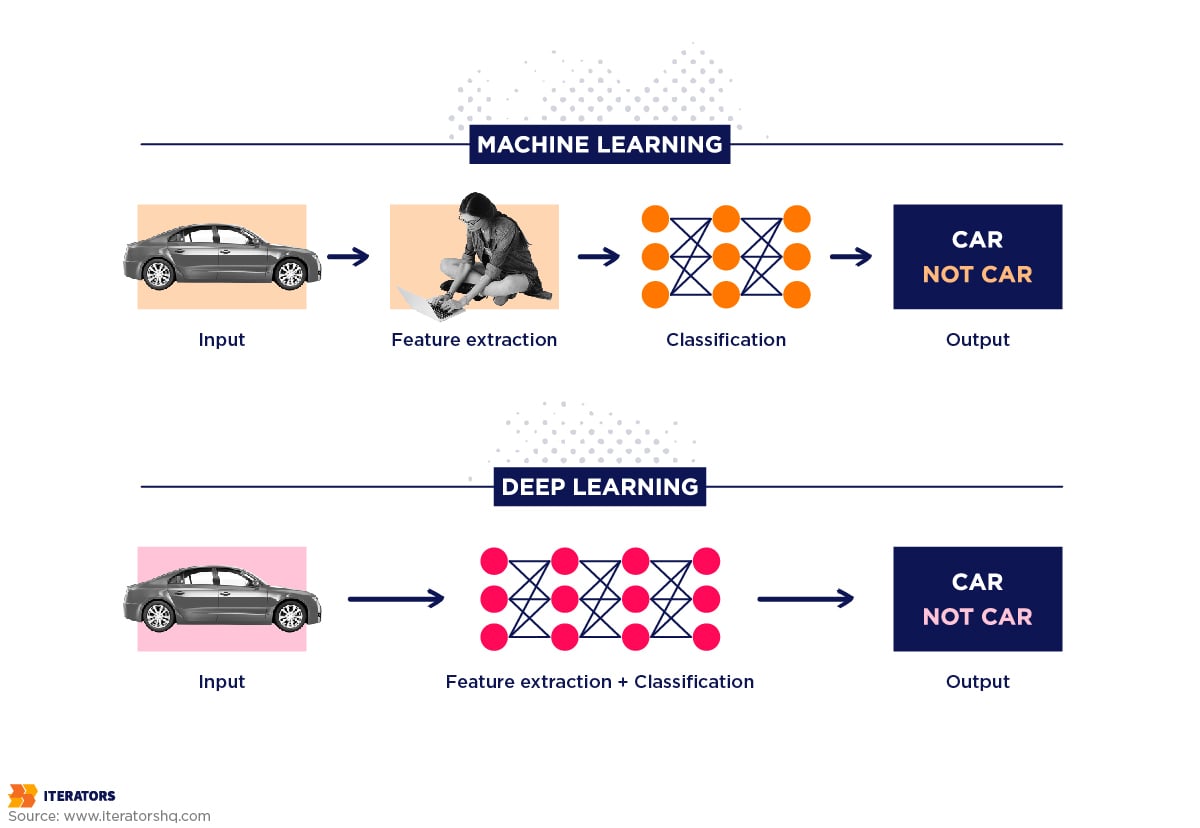

Machine learning, a subset of artificial intelligence (AI), is a transformative technology that empowers computers to learn from data without explicit programming. Unlike traditional software that relies on hard-coded rules and instructions, machine learning systems improve their performance over time through exposure to data.

At its core, machine learning involves the development of algorithms and models that can analyze and interpret data, allowing systems to make predictions, recognize patterns, and automate decision-making processes. This fundamental shift from rule-based programming to data-driven learning is what sets machine learning apart. This makes it such a vital component of modern software development.

The relationship between machine learning and software development is symbiotic. Machine learning thrives within software applications, while software development provides the infrastructure for machine learning to function effectively. Let’s explore this connection.

Need help applying machine learning in your enterprise? The Iterators team can help design, build, and maintain custom software solutions for both startups and enterprise businesses.

Schedule a free consultation with Iterators today. We’d be happy to help you find the right software solution to help your company.

Machine Learning Software Development

Machine learning software development encompasses several key steps:

- Data Collection: The first step is to gather relevant data that the machine learning model will use for training. The quality and quantity of data are critical to the model’s success, such as in cases where you’re tracking customer shopping patterns or product preferences.

- Data Preprocessing: The collected data often requires cleaning, transformation, and preparation to make it suitable for training. It may involve handling missing values, normalizing data, or dealing with outliers. Preprocessing and cleaning helps to ensure that your system differentiates adding products to carts and cart abandonment.

- Model Development: Developers select the appropriate machine learning algorithms and architectures based on the problem at hand. These models are designed to learn patterns and relationships from the data.

- Training: The machine learning model is trained to understand and predict outcomes using historical data. During training, the model fine-tunes its parameters to minimize prediction errors. In training an e-commerce ML model, you need to give it volumes of customer data in order to continuously improve its predictions.

- Evaluation: Validation data and relevant metrics assess the model’s performance in real-world scenarios. This step ensures the model can generalize well to unseen data.

- Deployment: Once a model is trained and evaluated, it’s integrated into production software to make real-time predictions or automate decision-making processes. At this point, in a healthcare setting for example, clinicians should notice improved diagnosis based on text inputs of observed values from patients.

- Monitoring: Continuous monitoring of the model’s performance in production is essential. Updates and improvements may be necessary as new data becomes available or the model’s accuracy changes over time.

Software Development and Machine Learning

On the other hand, software development provides the foundation for machine learning to thrive. It includes:

- Infrastructure: Creating the computing infrastructure and architecture necessary for machine learning tasks, such as scalable data storage, high-performance computing clusters, and efficient data pipelines.

- User Interface: Designing user-friendly interfaces for applications that incorporate machine learning. It ensures that end-users can interact with the machine learning capabilities seamlessly.

- Data Management: Developing data management systems to store, retrieve, and preprocess the data used for machine learning tasks.

- Scalability: Ensuring that software can scale to accommodate the growing demands of machine learning applications, especially in environments with large datasets.

- Integration: Integrating machine learning models with existing software systems enables data-driven insights and application automation.

In summary, machine learning and software development are interconnected, with machine learning enhancing software applications’ capabilities and software development providing the necessary infrastructure and integration for machine learning to flourish. This connection between the two fields has given rise to innovative solutions that have transformed industries, making machine learning a critical component of modern software development.

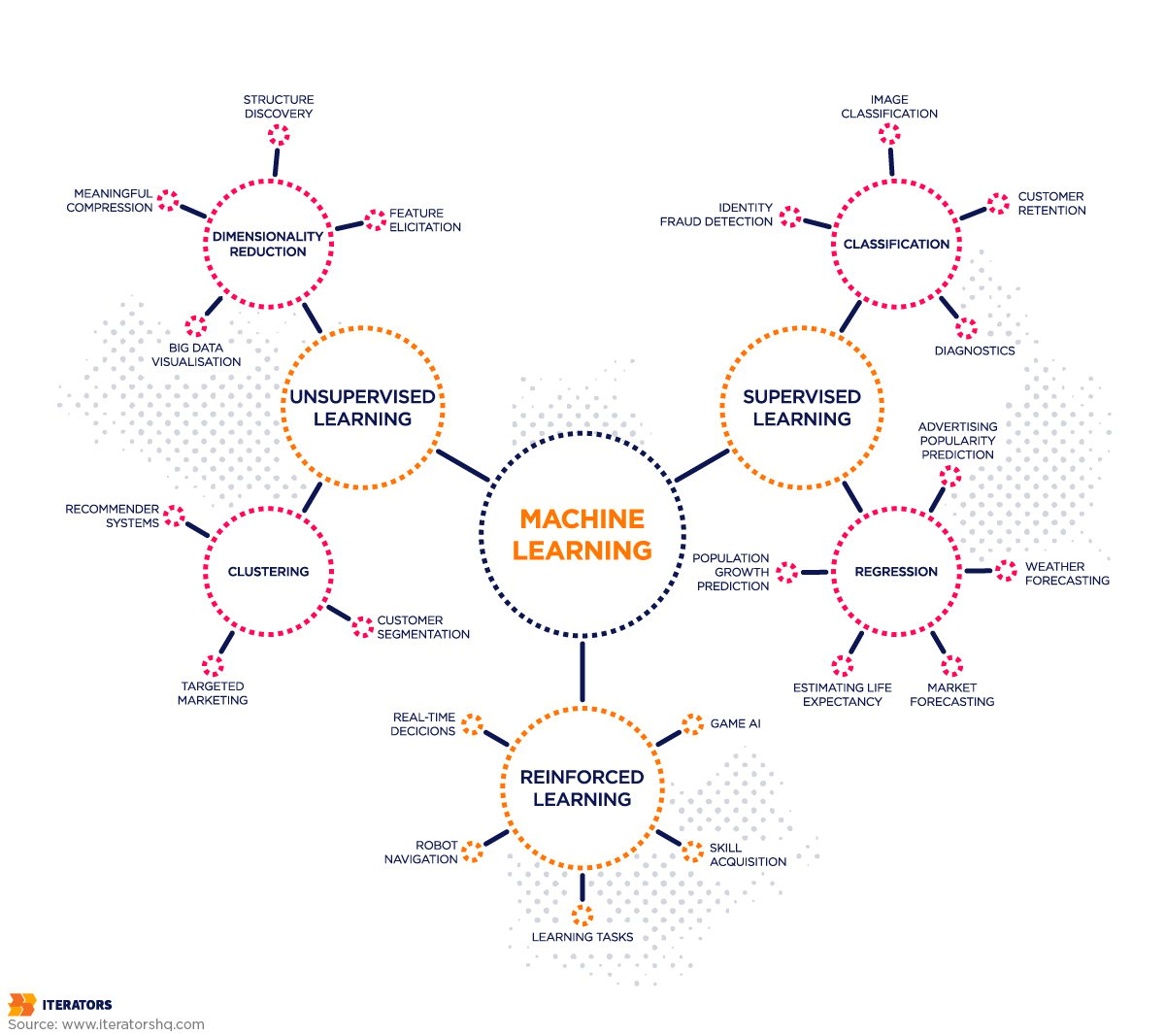

How Do Different Types of Machine Learning Algorithms Work

Machine learning encompasses various algorithmic approaches tailored to specific tasks and domains. This section will explore the fundamental categories of machine learning algorithms and their underlying principles.

Supervised Learning vs. Unsupervised Learning

Supervised Learning

Supervised learning is a type of machine learning where the model is trained using labeled data. Labeled data means that each input is associated with a corresponding desired output or target. The goal of supervised learning is for the model to learn a mapping from inputs to outputs to make accurate predictions or classifications on new, unseen data.

For example, in a supervised learning scenario, you might have a dataset of emails labeled as “spam” or “not spam.” The model learns to distinguish between spam and non-spam emails based on the email content’s features (such as words or phrases).

Examples of Supervised Learning Algorithms

Several supervised learning algorithms are used in various applications, including:

- Linear Regression: Used for predicting a continuous target variable (e.g., house prices based on features like square footage and number of bedrooms). It’s highly beneficial for evaluating trends and making forecasts in the sales.

- Logistic Regression: Employed for binary classification tasks, such as spam detection or customer churn prediction in e-commerce. In medicine, it’s extensively applied in calculating Trauma and Injury Severity Score.

- Decision Trees: Effective for classification and regression, decision trees create a tree-like structure to make decisions based on input features. They work well for analyzing customer data and making marketing decisions.

- Random Forests: An ensemble method that combines multiple decision trees to improve accuracy and reduce overfitting. They help to make efficiency decisions on customer activity, patient history, and safety

- Support Vector Machines (SVM): Useful for classification tasks, SVMs find a hyperplane that best separates different classes in high-dimensional space. They are helpful in biological problems such as classifying patients based on gene data.

- Neural Networks: Deep learning neural networks, including convolutional neural networks (CNNs) for image tasks and recurrent neural networks (RNNs) for sequential data, have shown remarkable performance in various domains. Common applications include advertising, fraud detection, handwriting recognition, healthcare, and social media.

Unsupervised Learning

Unsupervised learning, in contrast, involves training a model on unlabeled data. The objective is to find patterns or structures within the data without specific guidance on what to look for. Unsupervised learning can be used for tasks like clustering similar data points together or reducing the dimensionality of data.

For instance, in unsupervised learning, you might apply clustering algorithms to group customers based on their purchase behavior without knowing how many customer segments there should be.

Reinforcement Learning

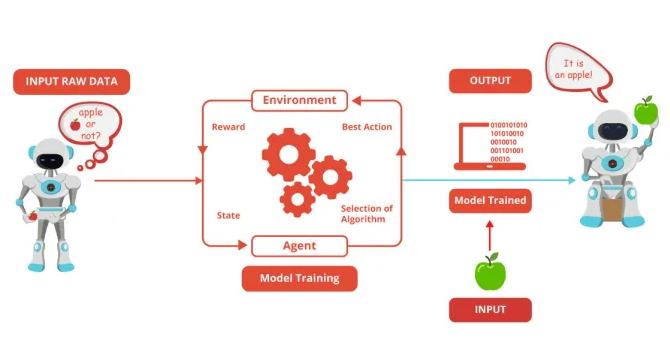

Reinforcement learning (RL) is a type of machine learning where an agent interacts with an environment to learn a sequence of actions that maximizes a cumulative reward. Unlike supervised learning, where the model is trained on labeled data, reinforcement learning operates in an environment where it must explore, take action, and learn from feedback.

The core components of reinforcement learning include:

- Agent: The learner or decision-maker that interacts with the environment.

- Environment: The external system with which the agent interacts and where actions occur.

- State: A representation of the environment’s current condition, providing information for decision-making.

- Action: The set of possible moves or decisions the agent can make.

- Reward: A numerical signal received by the agent after each action, indicating the immediate benefit or cost of that action.

The agent’s goal is to learn a policy—a strategy that maps states to actions—such that it maximizes the cumulative reward over time. It’s achieved through trial and error, where the agent explores different actions, observes the outcomes, and adjusts its policy accordingly.

Reinforcement learning has found applications in various fields, including game playing (e.g., AlphaGo), robotics (e.g., autonomous navigation), and recommendation systems (e.g., personalized content recommendations). It excels in scenarios where an agent must make a sequence of decisions to achieve a long-term objective and where the optimal strategy may not be initially known.

Role of Data in Machine Learning Applications

Data serves as the lifeblood of machine learning, underpinning the ability of models to learn and make informed decisions. In this section, we’ll explore data’s indispensable role in machine learning.

The Crucial Role of Quality Data in Training ML Models

Quality data is the cornerstone of successful machine-learning endeavors. Data quality directly impacts the performance and reliability of machine learning models. Here are key reasons why quality data is paramount:

- Accuracy: High-quality data ensures that models learn accurate patterns and relationships. Inaccurate or noisy data can lead to erroneous predictions and decisions.

- Generalization: Machine learning models aim to make predictions on new, unseen data. Quality data enables models to generalize well, meaning they can make accurate predictions beyond the data they were trained on.

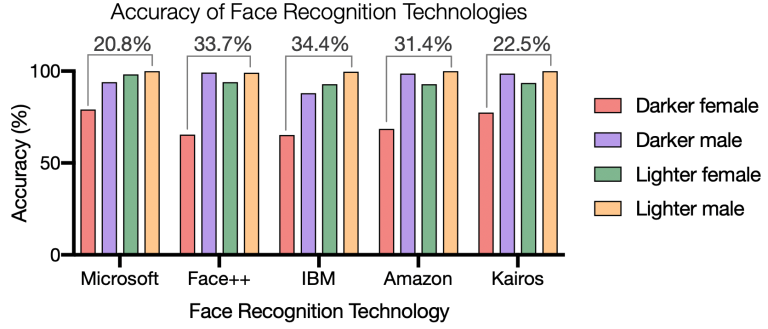

- Bias Mitigation: Biased data can lead to biased models, perpetuating unfair or discriminatory outcomes. Quality data helps reduce bias and promotes fairness in machine learning applications.

- Trust and Confidence: Reliable data instills trust and confidence in the machine learning model and its decisions. It’s essential, especially in critical applications like healthcare or finance.

To ensure data quality, companies must invest in data collection processes, validation, and error handling. Data cleansing techniques, such as removing outliers and handling missing values, play a significant role in data quality assurance.

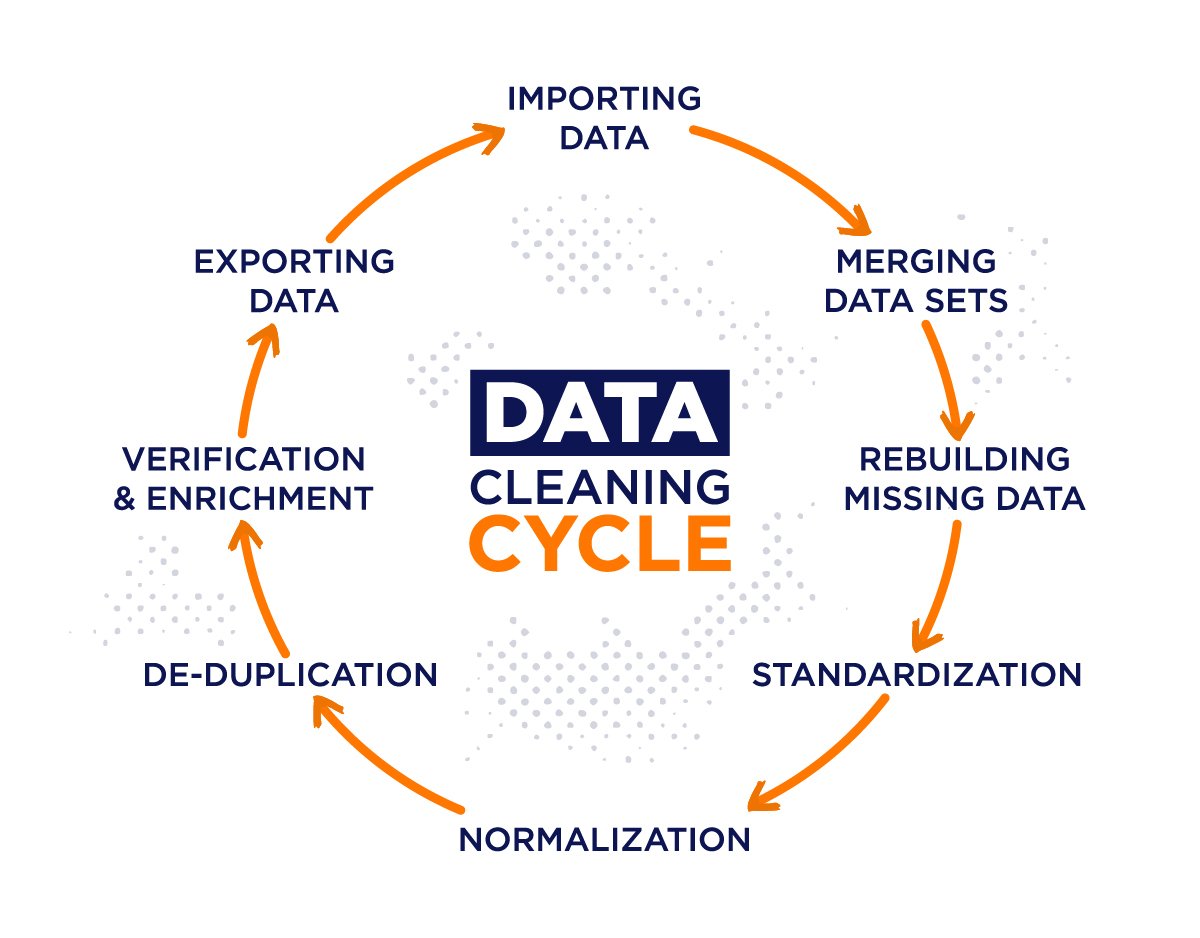

Collecting and Preprocessing Data for Machine Learning Projects

Effective data collection and preprocessing are essential to preparing data for machine learning. Here’s how companies can achieve this:

Data Collection

- Define Objectives: Clearly define the objectives of your machine learning project. Understand what data is required to achieve those objectives.

- Data Sources: Identify data sources, including databases, sensors, web scraping, or user-generated content. Consider both internal and external sources.

- Data Gathering: Collect data in a structured and consistent manner. Ensure data is labeled and annotated correctly, especially for supervised learning.

Data Preprocessing

- Cleaning: Remove or correct inaccuracies, outliers, and inconsistencies in the data. It ensures that the data is reliable for training.

- Normalization and Scaling: Normalize numerical features to a standard range to prevent some features from dominating others. Scaling ensures fair comparisons between features.

- Handling Missing Data: Decide on a strategy for dealing with missing data, such as imputation or removing rows/columns with missing values.

- Feature Engineering: Transform and create new features to improve the model’s ability to capture relevant patterns. Feature engineering can be a critical step in model performance.

- Encoding Categorical Data: Convert categorical data into numerical format, making it suitable for machine learning algorithms.

Possible Challenges Arising During the Data Preprocessing Phase

Data preprocessing is a crucial but often challenging phase of machine learning. Some common challenges include:

- Data Quality Issues: Dealing with data that is noisy, incomplete, or contains errors can be time-consuming and may require creative solutions.

- Imbalanced Data: In classification problems, imbalanced datasets, where one class significantly outnumbers the others, can lead to biased models. Techniques like resampling or synthetic data generation may be needed.

- Feature Selection: Choosing the most relevant features from a large pool can be challenging. Selecting irrelevant or redundant features can lead to overfitting.

- Handling Categorical Data: Converting categorical data into numerical format requires careful consideration, as the choice of encoding method can impact model performance.

- Scalability: For large datasets, preprocessing can be computationally intensive. Efficient data preprocessing pipelines are essential for scalability.

- Data Privacy and Ethics: Ensuring that data is handled in compliance with privacy regulations and ethical considerations is critical. Anonymization and data protection measures are important.

In a nutshell, data is the foundation of machine learning, and its quality and preprocessing are pivotal to the success of any project. Companies must invest in robust data collection and preprocessing practices to harness the full potential of machine learning for informed decision-making and innovation.

Choosing the Right Machine Learning Model

Selecting the right machine learning model is a critical decision that significantly impacts the success of a project. In this section, we’ll explore the factors that influence model selection and the benefits of ensemble learning in enhancing model performance using the example of e-commerce.

Factors Influencing the Machine Model Choice for Tasks

Choosing the most suitable machine learning model for a specific task isn’t a one-size-fits-all endeavor. Several factors are responsible, including:

- Type of Problem: The nature of the problem at hand plays a crucial role. Is it a classification problem, regression problem, clustering problem, or something else? Different problems require different types of models. Our e-commerce problem may involve predicting customer churn for a telecom company using machine learning.

- Size of Dataset: The size of the dataset matters. Simpler models like linear regression or decision trees may work well for small datasets. In contrast, large datasets may benefit from deep learning models. The dataset might be all the customers using the telecom company’s services, easily running into millions per data point.

- Data Complexity: Consider the complexity of the data. Does it have non-linear relationships that require more complex models, or is it relatively simple? Customer churn relationships are typically complex because human behavior is far from linear.

- Interpretability: Some models, like decision trees and linear regression, are highly interpretable, making them suitable when transparency and understanding the model’s decisions are essential.

Depending on the product offerings from the telecoms enterprise, we can use linear regression or decision trees to ensure that the ML model is both interpretable and transparent. - Feature Dimensionality: High-dimensional data may require dimensionality reduction techniques or models designed for handling high-dimensional data, such as support vector machines (SVMs).

- Computational Resources: The availability of computational resources can influence model selection. Deep learning models, for example, require significant computing power.

- Bias-Variance Tradeoff: Consider the bias-variance tradeoff. Some models may have low bias but high variance (e.g., complex deep learning models). In contrast, others may have higher bias but lower variance (e.g., linear models).

- Time Constraints: Simpler models may be preferred if there are time constraints for model training and inference.

- Domain Expertise: Domain knowledge and expertise can guide model selection. In some cases, domain-specific models or adaptations may be necessary.

- Ensemble Methods: Ensemble methods combine multiple models and can improve performance in cases where single models struggle.

Enhancing Model Performance with Ensemble Learning

Ensemble learning is a technique that combines the predictions of multiple machine learning models to produce a more accurate and robust result. It’s preferred in several scenarios:

- High Variance Models: When individual models have high variance (i.e., prone to overfitting), ensemble methods can help by reducing variance and improving generalization.

- Diverse Models: Ensemble learning works best when the component models are diverse. This diversity can come from using different algorithms, feature sets, or subsets of the data.

- Improved Accuracy: In many cases, ensemble methods can significantly improve predictive accuracy compared to a single model. It’s especially beneficial in critical applications like healthcare and finance.

- Stability: Ensemble methods are more stable and less sensitive to outliers or noisy data than individual models.

There are several popular ensemble methods, including:

- Bagging (Bootstrap Aggregating): Involves training multiple instances of the same model on different subsets of the data and averaging their predictions (e.g., Random Forests).

- Boosting: Iteratively trains models where each subsequent model focuses on the errors made by the previous ones (e.g., AdaBoost, Gradient Boosting).

- Stacking: Combines predictions from multiple models using another machine learning model (meta-learner) to make the final prediction.

Ensemble learning leverages the wisdom of the crowd, allowing models to compensate for each other’s weaknesses and make more accurate predictions. However, it’s important to note that ensemble methods can be computationally intensive and may require careful tuning.

Choosing the right machine learning model involves carefully considering various factors, and ensemble learning can be a powerful tool to enhance model performance when dealing with complex, high-variance problems.

Feature Engineering in Machine Learning

Feature engineering is a crucial aspect of machine learning that involves creating and transforming features (input variables) in a dataset to improve model performance. This section will explore why feature engineering is essential and how it impacts machine learning models.

Impact of Feature Engineering on Machine Learning Model Performance

Feature engineering plays a pivotal role in machine learning for several reasons:

- Better Representation: Feature engineering helps create a more informative representation of the data, making it easier for models to capture underlying patterns and relationships. Well-engineered features can highlight relevant information and reduce noise.

- Improved Model Learning: Models perform better when the input features are carefully designed. Feature engineering allows models to learn more efficiently and accurately, leading to higher predictive performance.

- Dimensionality Reduction: Effective feature engineering can reduce the dimensionality of the data by selecting the most relevant features. It not only simplifies the model but also mitigates the risk of overfitting.

- Generalization: Feature engineering contributes to the generalization ability of a model, allowing it to make accurate predictions on new, unseen data by capturing essential information from the training data.

- Domain Knowledge Incorporation: Feature engineering enables domain experts to inject their knowledge into the modeling process, enhancing the model’s ability to understand and interpret data in context.

- Addressing Data Issues: Feature engineering can help address challenges such as missing data, outliers, and skewed distributions by transforming features to be more robust and suitable for modeling.

Techniques for Selecting and Transforming Features for Improved Results

Several techniques and strategies can be employed for feature selection and transformation:

- Feature Selection

- Filter Methods: These methods evaluate the relevance of features based on statistical measures (e.g., correlation, mutual information) and select the most informative ones.

- Wrapper Methods: Wrapper methods involve evaluating feature subsets by training and testing models with different combinations of features. Techniques like forward selection and backward elimination help identify the best feature subset.

- Embedded Methods: Some machine learning algorithms, like L1-regularized models (Lasso regression), perform automatic feature selection by penalizing less important features.

- Feature Transformation

- Scaling: Standardize numerical features to have zero mean and unit variance (z-score scaling) to ensure features contribute equally to the model.

- Normalization: Normalize numerical features to a specific range (e.g., [0, 1]) to prevent some features from dominating others.

- One-Hot Encoding: Convert categorical variables into binary (0/1) vectors to make them suitable for machine learning models that require numerical input.

- Binning or Discretization: Transform continuous variables into discrete intervals to capture non-linear relationships.

- Feature Engineering by Interaction: Create new features by combining existing ones. For example, creating interaction terms can capture synergistic effects between features.

- Feature Extraction: Use dimensionality reduction techniques like Principal Component Analysis (PCA) or t-distributed Stochastic Neighbor Embedding (t-SNE) to extract essential information from high-dimensional data.

- Text and NLP Techniques: In natural language processing (NLP) tasks, techniques like TF-IDF, word embeddings (Word2Vec, GloVe), and topic modeling can be applied for feature engineering.

Effective feature engineering often involves combining these techniques tailored to the specific dataset and problem. It requires a deep understanding of the data and domain knowledge to decide which features to create, select, or transform. Ultimately, well-executed feature engineering can significantly enhance the performance and interpretability of machine learning models.

What You Need to Know to Train ML Models

Training and fine-tuning machine learning models are critical stages in the development process that ultimately determine a model’s performance and effectiveness. In this section, we’ll explore the fundamental steps involved in training a machine learning model, the role of cross-validation in assessing model performance, and the importance of hyperparameter tuning.

Fundamental Steps in Training a Machine Learning Model

Training a machine learning model involves a series of essential steps:

- Data Preparation: Begin by preparing your dataset. It includes data collection, cleaning, preprocessing, and splitting into training and testing subsets. The training data is used to train the model, while the testing data is reserved for evaluating its performance.

- Model Selection: Choose an appropriate machine learning algorithm or model based on the nature of your problem (classification, regression, clustering, etc.) and the characteristics of your data.

- Feature Engineering: As discussed earlier, feature engineering is crucial. Create, select, or transform features to suit the chosen model.

- Model Training: Use the training data to train the selected model. During training, the model learns to recognize patterns and relationships in the data.

- Model Evaluation: Assess the model’s performance using the testing data. Common evaluation metrics include accuracy, precision, recall, F1-score, and mean squared error, among others, depending on the problem type.

- Model Fine-Tuning: Fine-tuning is required if the model’s performance is unsatisfactory. It may involve adjusting hyperparameters, modifying the model architecture, or performing further feature engineering.

- Validation: To ensure that the model performs well on new, unseen data, validating it on a separate validation dataset is essential. It helps detect overfitting, where the model performs well on the training data but poorly on new data.

- Deployment: Once the model meets performance criteria, it can be deployed in a production environment to make predictions or assist in decision-making.

Assessing Model Performance through Cross-Validation

Cross-validation is a crucial technique for assessing the performance of machine learning models, especially when you have limited data. It helps obtain a more robust and reliable estimate of a model’s performance by simulating its performance on multiple random splits of the data. Here’s how it works:

- K-Fold Cross-Validation: The dataset is divided into K subsets (or “folds”). The model is trained and evaluated K times, with each fold serving as the test set once while the remaining K-1 folds are used for training.

- Performance Metrics: Performance metrics (e.g., accuracy) are computed for each fold. The final performance estimate is typically the average of these metrics across all folds.

- Benefits: Cross-validation provides a more accurate assessment of a model’s generalization performance than a single train-test split. It helps identify issues like overfitting, which may need to be more apparent when using a single split.

Importance of Hyperparameter Tuning

Hyperparameter tuning is optimizing the hyperparameters of a machine learning model to achieve the best possible performance. Hyperparameters are parameters not learned from the data but set before the training process. Examples include the learning rate, the number of hidden layers in a neural network, and the decision tree depth.

The power of hyperparameter tuning lies in its ability to fine-tune a model for optimal performance. Here’s why it matters:

- Optimized Performance: Hyperparameter tuning helps find the combination of hyperparameters that leads to the best model performance. It can significantly improve a model’s accuracy and ability to generalize to new data.

- Avoiding Overfitting: Careful tuning can prevent overfitting. In this common issue, a model performs well on the training data but needs to improve on unseen data. Proper hyperparameter settings make the model more robust.

- Efficient Resource Utilization: Tuning helps in optimizing computational resources. It allows you to find the right balance between model complexity and training time, making the most efficient use of available resources.

Hyperparameter tuning is typically performed using techniques like grid search, random search, or more advanced methods like Bayesian optimization. Automated tools and libraries are available to streamline the process.

While cross-validation is vital for obtaining a robust performance estimate, especially with limited data, hyperparameter tuning plays a pivotal role in optimizing a model’s settings to achieve peak performance. These meticulously orchestrated steps collectively ensure that a machine learning model performs well and aligns with the desired objectives and requirements of the task at hand.

Ethical Considerations In Machine Learning Applications

Machine learning applications have raised significant ethical concerns that demand attention and responsible handling. This section will explore the ethical challenges of biased training data and discuss how companies can address fairness and bias issues in building their machine learning models.

Ethical Challenges in Using Biased Training Data in ML

Biased training data can introduce a range of ethical challenges, including:

- Algorithmic Bias: If training data reflects historical biases or prejudices, machine learning models can perpetuate and amplify these biases. For instance, biased training data in a hiring algorithm may discriminate against certain demographic groups.

- Unfair Treatment: Biased models may lead to unfair or discriminatory treatment of individuals or groups, impacting decisions related to loans, hiring, criminal justice, and more.

- Reinforcing Stereotypes: Biased data can reinforce harmful stereotypes, leading to unfair judgments and perpetuating social inequalities.

- Lack of Inclusivity: Bias can result in the underrepresentation or misrepresentation of certain groups, affecting the inclusivity and diversity of the model’s outcomes.

- Trust and Accountability: Biased machine learning models erode trust in technology and organizations that deploy them, raising concerns about accountability for biased decisions.

How Your Company Can Add Fairness and Bias in ML Models

Addressing fairness and bias issues in machine learning models is a complex but essential endeavor. Based on our experience working with all kinds of companies, we recommend you take several steps to mitigate these concerns:

- Diverse Data Collection: Ensure training data is diverse and representative of the population or domain of interest. Collect data from a wide range of sources and perspectives.

- Bias Detection and Analysis: Implement techniques for detecting bias in data and model outcomes. Analyze model predictions to identify potential sources of bias.

- Fair Data Preprocessing: Apply preprocessing techniques to make data more balanced and reduce bias. Techniques like resampling, re-weighting, and data augmentation can help.

- Fair Model Design: Choose machine learning algorithms and model architectures known for their fairness and transparency. Some algorithms are less prone to bias than others.

- Algorithmic Fairness Tools: Use specialized tools and libraries for fairness assessment and mitigation. These tools can help quantify and address bias in models.

- Fairness Metrics: Define fairness metrics and evaluation criteria specific to your application. Metrics like equal opportunity, demographic parity, and disparate impact can be used to assess fairness.

- Regular Auditing: Periodically audit models for bias and fairness, especially in rapidly changing environments. Models may become biased over time as data distributions shift.

- Transparency and Explainability: Make model decisions more transparent and explainable. It can help identify and rectify biased outcomes and provide accountability.

- Diverse Teams: Promote diversity within your development team to ensure a variety of perspectives and insights are considered throughout the machine learning pipeline.

- Bias Mitigation Strategies: Develop and implement strategies to mitigate bias, such as adversarial training, de-biasing algorithms, or fairness-aware machine learning.

- Ethical Guidelines: Establish clear ethical guidelines and principles for using machine learning in your organization, emphasizing fairness and responsible AI.

- Stakeholder Engagement: Engage with stakeholders, including impacted communities and advocacy groups, to gather feedback and ensure that machine learning applications align with societal values.

Addressing ethical considerations related to fairness and bias in machine learning applications is imperative. If you make the decision to work with Iterators today, we’ll help your company to adopt a proactive and multi-faceted approach, encompassing data collection, model design, transparency, accountability, and ongoing monitoring to ensure that machine learning serves the broader goal of fairness and responsible AI deployment.

Deploying and Monitoring Machine Learning Applications

Deploying and monitoring machine learning applications is a critical phase in the development process, ensuring that models operate effectively and reliably in real-world scenarios. This section will focus on the steps required to deploy a trained machine learning model into production.

Steps to Deploy Trained ML Models in Apps

Deploying a machine learning model into production involves a series of well-defined steps to ensure its seamless integration and continued performance:

- Data Preparation for Deployment

- Ensure that the data used for model training aligns with the data format and schema expected in the production environment.

- Implement data pipelines or preprocessing scripts to handle incoming data and transform it to match the model’s input requirements.

- Model Serialization and Serialization

- Serialize the trained model into a format that can be easily loaded and used in production. Common formats include pickle (for Python), ONNX, or TensorFlow’s SavedModel format.

- Package any preprocessing steps or feature transformations used during training into the deployment pipeline.

- Integration with Production Systems

- Integrate the machine learning model into the production system or application where it’ll be used. It may involve deploying it as part of a web service, microservice, or containerized application.

- Ensure the model’s inputs and outputs are compatible with the application’s data flow and user interface.

- Scalability and Performance Optimization

- Optimize the model’s performance and scalability to handle the expected production workload. It may involve parallelization, distributed computing, or specialized hardware (e.g., GPUs).

- Implement monitoring and load balancing to ensure smooth operation under varying demand levels.

- Model Versioning and Management

- Establish a versioning system for your machine learning models to track changes and updates over time.

- Implement model management practices, including the ability to roll back to previous versions if issues arise.

- Security and Privacy Measures

- Implement security measures to protect the model and data against unauthorized access and attacks.

- Ensure compliance with data privacy regulations by anonymizing or encrypting sensitive data as necessary.

- Monitoring and Logging

- Set up comprehensive monitoring and logging of model performance and usage in production. Monitor metrics like accuracy, response time, and resource utilization.

- Implement alerting systems to notify stakeholders of anomalies or issues that require attention.

- Testing and Validation

- Perform thorough testing of the deployed model to ensure it behaves as expected in the production environment.

- Implement A/B testing or experimentation frameworks to assess the impact of model changes and optimizations.

- Documentation and Knowledge Sharing

- Document the deployed model’s configuration, input/output specifications, and integration details.

- Share knowledge about the model’s behavior, limitations, and troubleshooting procedures with relevant teams and stakeholders.

- Continuous Improvement

- Establish a process for model retraining and updates to adapt to changing data patterns and requirements.

- Continuously gather feedback from end-users and stakeholders to inform model improvements.

- Compliance and Governance

- Ensure that the deployment complies with legal and regulatory requirements in your domain or industry.

- Establish governance mechanisms to oversee the responsible use of machine learning models in production.

- User Training and Support

- Train end-users and support teams on how to interact with and troubleshoot issues related to the deployed model.

Deploying a machine learning model into production is an ongoing process that requires collaboration between your data scientists, engineers, DevOps teams, and domain experts. Effective deployment and monitoring are critical to realizing the value of machine learning applications and ensuring their reliability in real-world scenarios.

Examples of Machine Learning Applications

Machine learning applications have become increasingly common across various industries due to their ability to automate tasks, improve decision-making, and enhance processes. Here are some common examples of machine learning applications in various industries:

- Agriculture

- Crop Monitoring: ML models analyze satellite imagery and sensor data to monitor crop health and predict yields.

- Pest Detection: Computer vision identifies pest infestations in real time, enabling targeted interventions.

- Precision Agriculture: ML optimizes resource use (water, fertilizer) based on crop needs, conserving resources.

- Automotive

- Autonomous Vehicles: Machine learning powers self-driving cars, enabling them to navigate and make decisions based on sensor data.

- Driver Assistance Systems: ML algorithms provide lane-keeping, adaptive cruise control, and collision avoidance features.

- Predictive Maintenance: Predictive models help identify vehicle maintenance needs, reducing breakdowns.

- Education

- Personalized Learning: ML adapts educational content to individual student needs, improving learning outcomes.

- Predictive Analytics: Models identify students at risk of dropping out, allowing for early interventions.

- Grading Automation: Natural language processing automated essay grading and assessment.

- Energy

- Energy Consumption Forecasting: Predictive models help utilities optimize energy generation and distribution.

- Grid Management: ML manages power grid stability and identifies faults for rapid response.

- Energy Efficiency: Algorithms analyze building data to optimize energy use in commercial and residential properties.

- Entertainment

- Content Recommendation: ML algorithms recommend movies, music, and articles tailored to individual preferences.

- Content Creation: AI generates creative content, including art, music, and literature.

- Real-time Analytics: ML analyzes user interactions in online games, enhancing gameplay.

- Finance

- Fraud Detection: Machine learning detects fraudulent transactions by identifying unusual patterns and behaviors, reducing financial losses.

- Credit Scoring: ML models assess creditworthiness by analyzing customer data, leading to more accurate loan approvals.

- Algorithmic Trading: Algorithms use historical data and real-time market information to optimize portfolio trading decisions.

- Healthcare

- Disease Diagnosis: Machine learning models can analyze medical images (e.g., X-rays, MRIs) to assist in diagnosing diseases such as cancer, retinopathy, and pneumonia.

- Drug Discovery: ML algorithms are used to analyze chemical compounds, predict drug interactions, and identify potential candidates for new drug development.

- Patient Risk Stratification: Predictive models help healthcare providers identify patients at risk of readmission, allowing for early intervention and cost savings.

- Manufacturing

- Predictive Maintenance: Machine learning predicts equipment failures, allowing for timely maintenance and reduced downtime.

- Quality Control: Computer vision systems identify product defects during manufacturing, improving quality.

- Supply Chain Optimization: ML optimizes supply chain logistics, reducing costs and improving delivery times.

- Retail

- Recommendation Systems: ML powers product recommendations based on user behavior, increasing sales and customer satisfaction.

- Inventory Management: Demand forecasting models optimize inventory levels, reducing overstock and stockouts.

- Price Optimization: ML algorithms analyze market dynamics and competitor pricing to optimize pricing strategies.

These examples demonstrate the versatility and transformative potential of machine learning across industries, improving efficiency, decision-making, and customer experiences. The application of machine learning continues to evolve and expand, driving innovation and reshaping traditional business processes.

The Takeaway

In summary, we’ve covered how machine learning’s pervasive presence across industries has increasingly underscored its transformative potential. The versatile applications range from healthcare’s disease diagnosis and personalized treatment to finance’s credit scoring and fraud detection.

Education relies on personalized learning, while the entertainment sector offers content recommendations and creative content generation. Retail benefits from recommendation systems and inventory optimization, while manufacturing enjoys predictive maintenance and quality control improvements. Other areas tha ML benefits include the automotive and agriculture sectors now implementing autonomous driving and precision agriculture, respectively.

Finally, the energy industry uses machine learning for consumption forecasting and grid management.

As machine learning revolutionizes industries globally, reshaping how organizations operate and adapt. We have stepped into a future where data-driven insights are imperative, machine learning drives innovation and redefines possibilities.

Ready to harness the power of machine learning for your enterprise? Look no further. At Iterators, we’re your trusted partner in ML-focused enterprise software development. Our expertise in machine learning applications ensures that you stay at the forefront of technology, delivering exceptional value to your customers. Let us help you enhance your customer experience and shape the future of your industry—contact Iterators today and unlock the endless potential of data-driven solutions.