Data labeling is your one-stop solution to end frustrations due to inaccurate data and create the most competent machine learning model. Data labeling is all about identifying and annotating data with tags based on its properties for understanding and accurate predictions.

It’s all quite like naming spices in jars with labels, so when someone with absolutely no kitchen expertise has to cook, they can identify these spices based on the labeled jars.

When supervised machine learning models are fed training data, this raw data needs to be ‘labeled’ according to its content for the model to comprehend and respond properly. This labeled data can be anything, from the caption of an Instagram post to the topic of an important conference or even a tweet!

Need help with data labeling? The Iterators team can help design, build, and maintain custom software solutions for both startups and enterprise businesses.

Schedule a free consultation with Iterators today. We’d be happy to help you find the right software solution to help your company.

The Importance of Accurate Data Labeling

Mislabeling or a lack of labeling can lead to a dysfunctional algorithm. For example, if an algorithm fails to generalize new data, its lack of performance will create ineffective results.

Think about it as an essay with no title. Time and resources will be wasted trying to decode what the essay is truly about, and if the topic is not what you thought it was—you’ll have to start from scratch!

Foregoing initial data labeling entirely can lead to a lack of trust in technology, especially in delicate industries like healthcare, banking, or automotive industries.

For example, the current rate of customer trust in AI chatbots in healthcare stands at 72% in the U.S. Think about if, for instance, a chatbot trained on incorrect data offers incorrect advice to a patient. Now, what if the effects were not too detrimental and went unrecognized? This issue can translate itself into something horrific and lead to a loss of trust in chatbots altogether.

Accurately labeled data—or the ground truth—ensures the model is trained on high-quality data. The more accurately the data is labeled, the more reliable the decision-making process will be. Businesses use this efficient practice to visualize trends and patterns effectively.

Now, let’s get down to what is data labeling?

Consider a hospital where a radiologist labels X-rays of patients showing multiple diseases and conditions. They label the data showing arthritis, chest infections, and pneumonia against the X-rays that do not show these issues.

Machine learning will detect what X-rays should look like for patients diagnosed with the respective diseases. The radiologist will continuously monitor the quality of the output the machine learning model generates to reinstate accuracy.

Techniques and Practices of Data Labeling

First, let us understand the key aspects of labeling data.

1. Data Collection

You wouldn’t put chocolate on pizza—or so I hope. Similarly, it’s very important to collect the raw data relevant to the desired outcome. For example, if your model is being trained to translate languages for users, there’s no need to gather data about what the weather is or if the stock market is flourishing. In smaller ML projects, even stay away from adding too many complexities that may seem relevant at first. Conduct your research to know if the data is essential to the purpose or not. Check for anomalies or errors:

Before labeling data, the raw data needs to be checked for inaccuracies or misinformation. This will help identify anomalies and narrow down the root causes of undesired labeled data outputs. For instance, think of this stage as sifting through the data to reveal the important information from the erroneous and problematic.

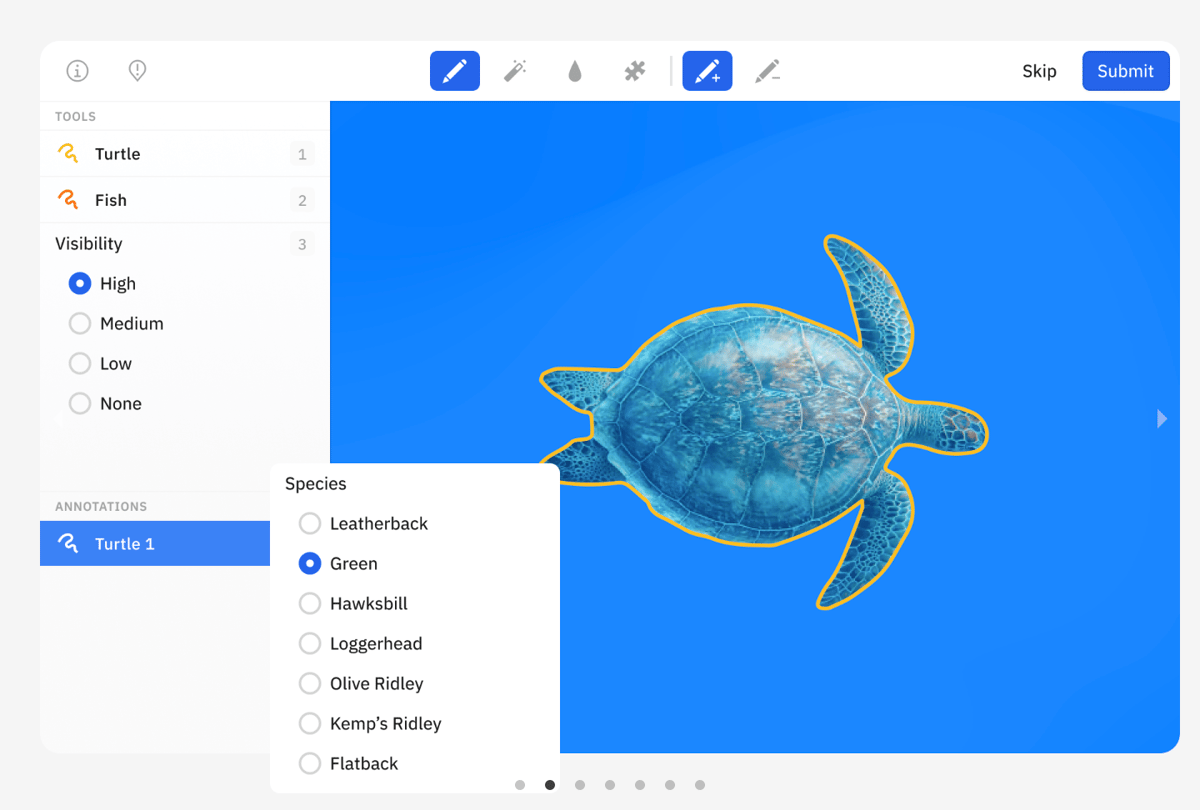

2. Labeling Data

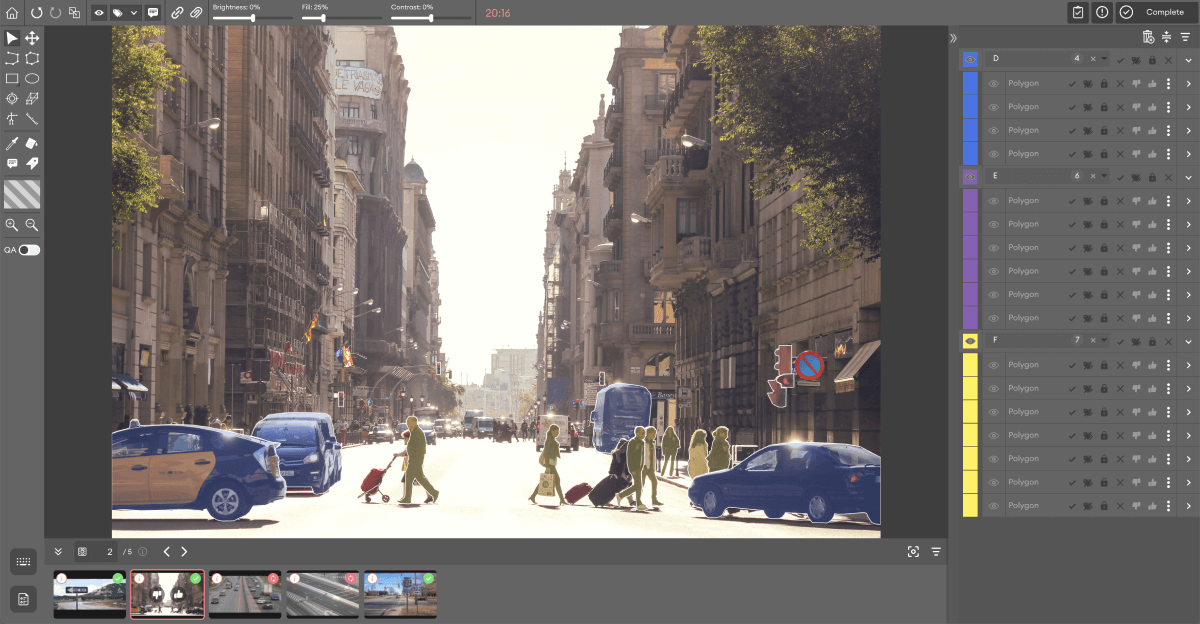

Label the data accordingly. For example, an engineer can label all pictures showing streetlights to help the ML perform this object’s identification. The way you label the data is the turning point that determines the success of your model. The labeling should be done based on research and appropriate reasoning, as this will be used to base your model’s intelligence and output.

3. QA testing

Consistent quality assurance is important to a smooth ML model. Don’t take breaks in running tests and understand that even the most successful ML models face some issues, but regular QA testing is the way to keep them in the ‘solvable’ range.

4. Training the model

Implement the model by using the tested data. At this stage, closely monitor any changes, even seemingly negligible ones, that you would not want as a result.

Methods of Data Labeling

1. Manual Labeling

Manual labeling is done by human annotators based on defined guidelines. This type is usually most practiced in areas with no room for error, needing human expertise to understand correct contexts.

This method is relatively more costly and unscalable because of its dependence on human resources. For example, to label big data, such as the types of plantations found in North American forests, a huge amount of labor needs to be employed. In such cases, other types of labeling are preferred.

2. Automated

This method is performed by automated or predefined algorithms that automatically annotate data. Though time-effective, this solution requires validation for operation. Its initial costs are higher, but features of promised scalability and mass categorization of data give it the upper edge.

For example, automated labeling is used in image identification to distinguish between images containing a particular object and the ones without it.

3. Active Learning

Active Learning is all about redirecting human intervention towards uncertain data samples and points.

The automation will actively label informative and accurate data with minimal human resource needs. Though costly, this method has unmatched scalability and can make up for these initial costs in the long run.

4. Crowdsourcing

Crowdsourcing involves outsourcing data labeling to a large number of contributors. This diversely sourced method of a distributed workforce helps gain insights into data labels. Crowdsourcing is a transformative solution with applications in speech recognition, computer vision, and NLP (Natural Language Processing).

5. Programmatic and Synthetic Learning

Programmatic labeling is when the model follows patterns or predefined steps. Synthetic labeling is a time-effective solution that creates data based on existing datasets.

Quick Question!

A company aims to create an ML model for its clothing business but has limited human resources. What method of data labeling would be best?

Types of Data Labeling

1. Supervised

Supervised data labeling relies solely on labeled data to be trained, including both the inputted data and its corresponding label.

The end goal is to provide the model with as many examples as possible to ensure the model works correctly.

2. Semi-Supervised

Semi-supervised data labeling takes a ratio of data where the unlabeled is higher than the labeled data.

Like supervised, this model studies the labeled data, which further acts as a prototype for the unlabeled data. Semi-supervised data labeling minimizes labor costs and enhances productivity and performance.

For example, the model learns from 150 labeled pictures of “eagles” and “hawks” and applies the learned identification technique to 1500 pictures.

3. Unsupervised

This type of labeling leaves the interpretation and structuring of data labeling up to the learning model entirely.

The model is trained on diverse examples without definitive labels, leaving the data organization entirely up to the model. Unsupervised labeling uses techniques like dimensionality reduction, anomaly detection, and clustering.

Even though the applications of unsupervised data labeling aren’t explicitly wide, there are still many areas in research that can benefit from it. Let’s look at a case scenario to understand this better:

Case Study

An e-commerce business wants to understand its customers better to design marketing strategies.

The process:

The ML model collects data first and then extracts features like purchase frequency and average spend per customer. The company applied an unsupervised learning algorithm to identify patterns in the data.

It will then start labeling groups in clusters like frequent and occasional, which are then sent off to the marketing team.

The outcome:

This approach dismisses manual labeling and helps save time and resources.

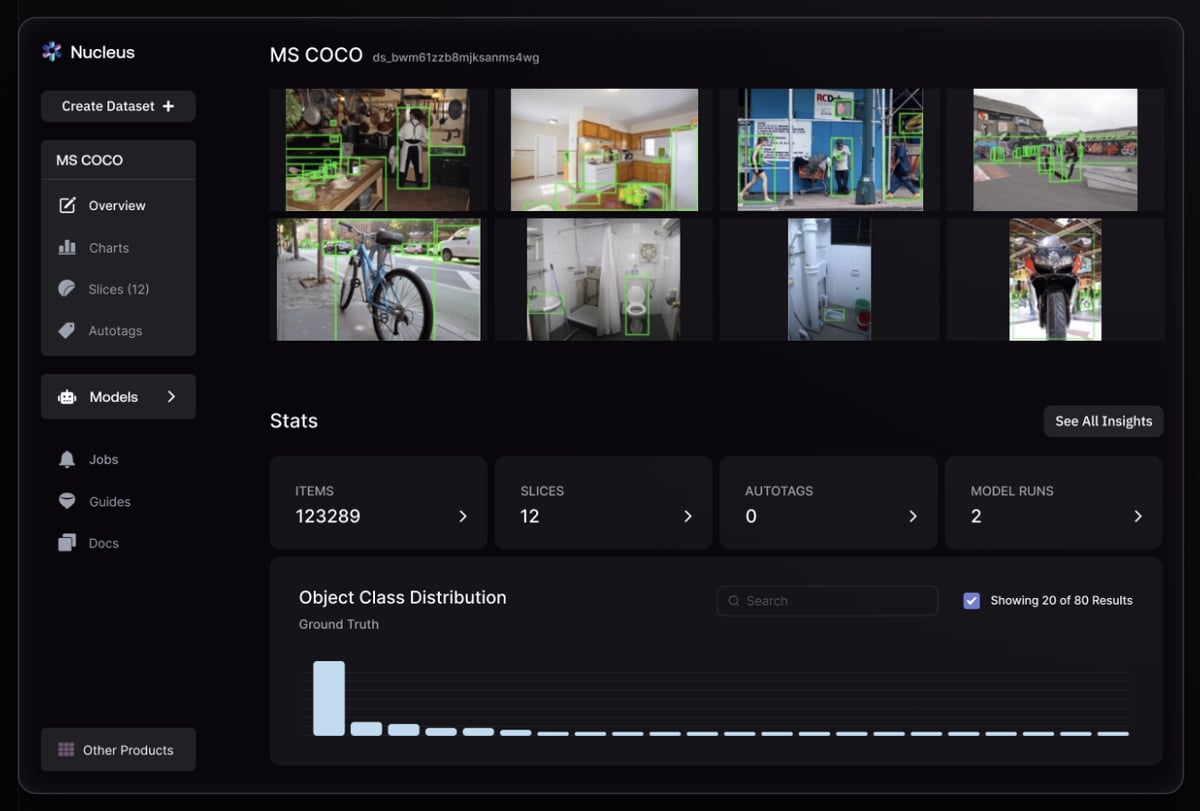

Tools and Technologies in Data Labeling

Most data labeling tools offer these key features:

1. Collaborative tools

These tools are structured to support collaborative learning and project management. Google Sheets is a good example, allowing teams to track their progress, compile results, and assign tasks.

These tools have a user-friendly interface designed to manage less complex data handling.

2. Crowdsourcing platforms

Crowdsourcing platforms are shared computing platforms that handle tasks by utilizing human intelligence through a collaborative approach. Amazon Mechanical Turk is a popular tool for assigning tasks to humans.

It can handle sentiment analysis and image annotation with set quality control measures.

3. Automation and AI

AI-assisted tools can help streamline operations or carry out labeling with minimal human intervention.

Amazon’s SageMaker Ground Truth is a leading data labeling platform that uses machine learning models to label data automatically. It features built-in workflows and integrates smoothly with other AWS services.

Popular tools

1. SuperAnnotate

An annotation tool must support multiple annotation types. Annotating can be performed by a variety of software tools like VGG image annotator.

Advantages:

- Super Annotate is a popular AI-assisted annotating option with advanced 3-D and 2-D capabilities, multi-format support, and diverse project and collaborative options.

- This tool works exceptionally for large-scale organizations. Its AI automation streamlines workflows and enhances productivity.

Use Cases:

- This tool is excellent for handling computer vision. With one of its main advantages being dealing with object identification, SuperAnnotate is designed for projects that require detailed graphic annotation.

- Apart from this, developers can start using SuperAnnotate when they begin their large-scale projects. In this way, it supports automation in the labeling processes.

2. Scale AI

Scale AI is an annotation tool, particularly invaluable for large datasets in autonomous vehicle industries. However, it can be costly for small-scale organizations looking for a data volume above 200k or 2M.

Advantages:

- It can easily merge into existing machine-learning tools and channels.

- One-of-a-kind workflows that provide quick setup and execution.

- It features strong API integration tools, Human intervention to reinforce quality and accuracy, and AI-assisted labeling. Scale Rapid, Scale Studio, and Scale Pro are industry-specific solutions for different organizations.

Use Cases:

- ScaleAI is a popular choice when developing AI applications. It produces highly reliable data and has applications across all industries.

3. LabelBox

LabelBox supports various data types and is ideal for teams.

Advantages:

- It offers features such as workflow automation, advanced analytics, data management tools, vector and similarity searches, multi-format support, and collaboration between tools.

- It is highly scalable and has a user-friendly interface.

- On-demand services for labeling, which provides flexible resource management.

Use Cases:

- Very competent in labeling different data types like images, videos, etc.

- Can be used to pre-label data and assess the ML model.

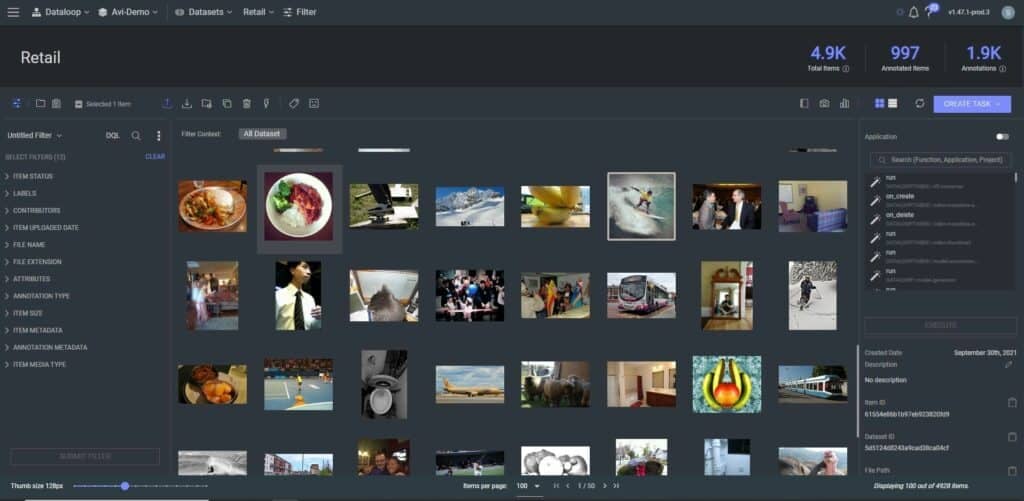

4. DataLoop

DataLoop provides an exceptional set of features with real-time collaboration tools. DataLoop offers free trial and custom pricing but can be expensive for small-scale teams and individuals.

Advantages:

- It supports multiple formats, feedback loops, customizable workflows, and AI-powered assistance.

- Uses a human-in-the-loop approach to data labeling that adds a touch of authenticity and security for users.

Use Cases:

- If your model is in the field of agriculture or robotics, DataLoop is a great choice because it works remarkably well in computer vision applications.

Best Practices

To ensure that quality assurance remains top-notch in data labeling, here are some key practices you can follow.

1. Clear Labeling Guidelines

Develop a formal document that specifies the annotation and labeling criteria and terminologies.

All supervised, semi or unsupervised annotators should follow these guidelines strictly to understand techniques. Defining the objectives and requirements helps maintain uniformity.

The open Annotation Data Model is a framework for interoperable annotations across multiple systems, and organizations can implement it to ensure smooth operations.

2. Collecting Data From Every Angle

Your model will only pick up on the data it was trained on. For example, if your model only fed images of white, black, and orange cats, it would have problems identifying a calico.

For this reason, it is essential to cater to every possible variation that the model might have trouble recognizing.

3. Training

AI models are as good as the data on which they are trained. This isn’t a myth; similarly, human annotators, whether supervising, intervening, or handling tasks top-to-bottom, need comprehensive training to understand requirements and techniques.

Exemplified practice ensures that all labelers—automated or Human—are equipped with knowledge of data labeling terminologies, best practices, and challenges.

4. Implementing cohesive labeling strategies and tools

It is important to research the most compatible tools for the data labeling planned. Using advanced tools can help reduce variability in the process.

These tools have a user-friendly outlook that can make the data labeling process a breeze.

Understand what type and strategy would be best to reduce anomalies and confusion. For example, think back to the early days of COVID-19. In this case, a specific test was taken and showed the presence or absence of the disease. The process is pretty straightforward, isn’t it?

However, we saw a wide range of misinterpreted data that showed false test results. This is because, at that time, the data available to confirm the disease was insufficient to be left entirely to automation.

For cases like this, it is essential to implement active learning or supervised data labeling rather than unsupervised.

5. Strict Quality Control Measures

After your selection, it is imperative to work on quality control. Regularly check labeled data for errors or inconsistencies to avoid data bias or inaccuracies.

- Double Annotating: Assign the same task to two labelers. This will help ensure the output is consistent. If any inconsistency is found, sort it out through consensus methods before finalizing. Though double annotating can be costly, it ensures the datasets are foolproof.

- Regular Auditing: Create subsets of the labeled data and send them off for auditing. Labelers should receive the feedback on this audit promptly to help improve accuracy. You can use strategies like F1 scores and precision to track the annotation quality over time.

- Feedback Loops: Implement inclusive systems to get real-time feedback from users.

- Incentives: Apart from unsupervised data labeling, your best quality-assuring labelers might be incentives for motivation. Set an example of their work and consistently introduce workshops and training sessions.

6. Using modern tools

Avoid skepticism when it comes to integrating modern technologies like AI. These guys are here to assist us and do what we tell them to do. In data labeling, this can range from:

- Pre-labeling: AI can shorten periods by pre-labeling the data for human labelers to recheck. For example, Google uses AI to help organizations automatically label data.

- Active Learning: This technique of data-labeling is very effective in producing accurate results. The AI will automatically flag sketchy data to be assigned to human labelers.

- Assistance in QA: AI can serve as a helping hand in maintaining quality control. AI tools can identify inconsistencies promptly.

Hear from industry experts:

Domain experts can provide detailed dos and don’ts on how to go about data labeling.

They can provide specific guidelines and invaluable insights in review and validation sessions. Due to their experience in error analysis, especially in highly complex tasks, experts can single out inconsistencies from the start, saving time and resources.

Data Privacy and Data Labeling

Think about if your ML model was at the top of its game. Keep in mind this ML Model followed weak data privacy standards. What happens now is if an unauthorized person—like a hacker or cybercriminal—gains access to the ML model, they can manipulate the labeling and mess up the entire model.

Your model and the information of clients will be compromised and become a source of legal issues and penalties.

1. Protection Measures

Data protection accounts for the most crucial elements. To protect sensitive information, taking preliminary measures is the way to go:

2. Data Cleaning

Data cleaning removes anomalies, duplications, and inconsistent and inaccurate data within a dataset. Our blog has more about it

3. Manage access control

Limiting access controls to authorized personnel primarily impacts data mishandling. Choose the most compatible access control model to ensure only labelers can view relevant data.

4. Encrypt data

Transfer and storage are data points most likely to be intercepted. Sensitive data should be encrypted both at rest and in transit to mitigate these occurrences.

5. Data security training

Provide appropriate training and workshops on how to handle data correctly. Most of the time, labelers are unaware of how to deal with exemplified data.

Proper insights can emphasize best practices and strategies to take and how to implement techniques securely.

6. Audit trails

Know where and when specific data was accessed. Audit trails record all modifications made to data and ensure accountability.

Anonymization techniques: Data Masking and Perturbations are innovative anonymization techniques that limit data privacy leaks.

- Data masking: swaps personal information for fictional placeholders.

- Perturbations: alters original values or adds random noises to preserve privacy.

7. Regular validation

Anonymous datasets can derive large labeled data. Consistently run validation processes to confirm that this data meets quality assurance and ethical standards.

8. Industry Standards

Industry standards are subject to change, regardless of whether they follow different standards set for elements of data labeling.

ISO STANDARDS:

- For example, stay compliant with standards like ISO 25012 to assess the data quality of the organization.

- ISO 27001 certification is an international framework for information security management.

Each organization can form its specific data annotation guidelines.

The open Annotation Data Model is a framework for interoperable annotations across multiple systems, and organizations can implement it to ensure smooth operations.

Comply with HIPAA:

The Health Insurance Portability and Accountability Act ensures that only certified data labelers can interact with healthcare data.

CCPA:

Data Privacy acts like the CCPA can assure clients their data is not mishandled. When deploying exemplified data, set definitive guidelines on where and how data is being utilized.

DPIAs:

Organizations might need to conduct DPIAs (Data Protection Impact Assessment) to evaluate data processing risks.

The more compliant the data labeling company is, the more likely clients and users will interact with them.

Data handling is a sensitive domain, and labeling can collect huge amounts of data as examples. Always prioritize laws and regulations over shortcuts to limit disastrous outcomes.

Challenges in Data Labeling

1. Financial constraints

Data labeling primarily requires human intervention for accurate results. This means the larger the dataset, the more the workforce is needed.

This workforce would need to be highly skilled in specialized fields like healthcare and banking, further increasing operational costs.

Take the example of a healthcare startup that wants to deploy medical imaging with the model aim of detecting different types of cancers. This startup is facing financial issues and will need to employ radiologists to analyze accurate data. Now because of this reason, it will eventually compromise on the dataset size, which will lower its accuracy and have negative effects on the model.

2. Contextual misunderstandings

AI and Automation are based on human input. Now, consider whether that human-inputted data is erroneous. All data collected is susceptible to mistakes and biases, which can have even more detrimental consequences in big data and sensitive industries.

Ambiguity: Ambiguous data points can lead to variations in interpretation. This results in inconsistent and faulty labeling, especially in complex cases that have inadequate categorization or examples to learn from.

Subjectivity: Crowdsourcing is about including different backgrounds and experiences in labeling data. However, this can also have a very opposite effect as every labeler may unconsciously include personal bias and preconceived notions.

For example, a retail store wants to record the fabric’s material before designing the clothes to ensure it remains 100% cotton. If the ML model fails to correctly estimate this percentage, it can lead to customers buying deficient products. The business can face backlash and legal consequences for this.

3. Accuracy

Accuracy is possibly the most impacting challenge of data labeling.

The machine learning model will produce varying results from low-quality data.

Proper reviewing, double annotating, and pilot testing are key to consistent results, but they can be resource-intensive and harder to manage for a startup.

Consider an educational mathematics-based ML model trusted by students to provide them with solutions. If its fed data has some inaccuracy, this can cause incorrect solutions to be provided to the students.

Complexity of Data and Time-constraints

Different data types need to be handled accordingly with unique annotation techniques.

Specialized skills are necessary for properly decoding these data types, especially in cases of nuanced meanings where misunderstandings are common.

Applying these steps under a tight deadline requires next-level time management skills. Annotators can feel stressed, making their labeling error-prone.

Case Study:

Scenario: Consider a financial company looking to develop an ML model for fraud detection. This model needs to have appropriate features to support extensive labeled datasets,

Challenges it would face:

Data privacy: The company can face hurdles in complying with regulatory standards. However, they should remain dedicated to ensuring data integrity and continue pursuing governance policies for this challenge.

Volume of data: Traditional labeling practices can make it complicated to manage the large number and diversity of transactions.

For this reason, maintaining the quality of labeled data is quite a task.

Scalability Issues: As the company expanded, the amount of information to be labeled significantly increased.

To solve these problems, the company should implement a cloud-based platform that allows better collaboration and resources to scale.

Lesson to learn from:

To sum things up, here are some points we can learn from the case study:

- Always invest in training your team.

- Use modern data labeling technology and tools technology after adequate research.

- Pay attention to governance standards from the get-go to avoid data privacy issues in the long run.

- Receive guidance from field experts on how to label data.

Do’s of Overcoming Challenges

1. Create Guidelines

Create easy-to-follow guidelines that labelers can adapt to. These should include every step of the labeling process, from understanding big data applications to handling them, data privacy regulations to follow, and how to minimize ambiguity and subjectivity. For example, to make sure every labeler is aware of the guidelines, they can review sample data produced by the ML and dissect where the guidelines were implemented. This helps revise if any changes need to be made and adds to the understanding of the labelers.

Strategies to follow:

- Regular auditing and Feedback loops

- Updating guidelines based on changing scenarios.

2. Focus on QA

Quality assurance is the factor major contributes to success in machine learning models. As the quality of the ML model improves, the results it generates automatically improve. For example, if a small bug appears in a healthcare-based ML model when diagnosing a skin issue, regular QA testing can single this out quickly and nip the evil in the bud.

Strategies to adopt:

- Double annotating and calibration meetings.

- Automated flag checking for inconsistencies.

3. Stay compliant with standards and legal regulations

Data privacy compliance is mandatory for the model to retain its capabilities. Think about how important your security is in your day-to-day life and activities. Similarly, transfer this logic to an example of an ML model that operates for financial accounting. For each activity done by the ML model, there’s a dire need for its security to be especially top-notch because of the nature of the industry. You can adopt these strategies to ensure compliance:

- Manage data governance by drawing a compliance framework.

- Use this framework to monitor data during the entire labeling process.

4. Balance inclusive diversity with supervision for biases

Diverse viewpoints are essential in data labeling. However, proper monitoring should be performed to remove any unintended biases that may have seeped into the final product. Sometimes, the model’s entire data may not be at fault, but it might have undertones of biases that the model picks up.

Strategies:

- Detect bias through statistical analysis.

- Encourage collaboration between labelers with conflicting viewpoints to lower bias rates.

Don’ts

1. Rush the process

Rushing the process is a disaster waiting to happen. The labelers will stress out and hastily label the data without paying attention to context or data type. Implementing this to the ML models can be detrimental; you can land a failed attempt and possible legal repercussions.

Always provide ample time for annotators to label data correctly.

2. Ignore ambiguities and biases

The guidelines should mention how to handle ambiguities. If biases and ambiguity persist, don’t overlook them; instead, hold sessions where annotators can reach a conclusion. Make sure labeling is done with proper contextual understanding.

3. Skip out on tool selection

Outdated or lacking tools must not be used. Though costly, investing in top industry tools can yield better results. All labelers should know how to select the best tools and adjust them based on user feedback.

4. Ignore feedback

Turning a blind eye to user or annotator feedback is like hitting yourself with a nail. By not listening to complaints, you will just be delaying inevitable issues. Always address concerns seriously, set up a system to collect this feedback, and change accordingly.

What The Future Looks Like

1. Automated data labeling

Automated data labeling is on and will continue to rise. As machine learning algorithms are effectively implemented, techniques like self-supervised learning will reduce manual labeling.

Impact to Expect:

Having automated data labeling has transformed AI and ML model development. It has become a go-to solution because of the time-saving approach. When handling larger datasets, it’s an automatic response to use this practice as it reduces the workload of current employees and the need to have a large workforce.

This isn’t to say there aren’t any negative effects of this. The increased use of automated data labeling is directly proportional to the amount of human oversight required.

2. Synthetic Data Generation

Synthetic data generation is all about creating mock statistical results of actual data. A combination of computational techniques and simulations creates it.

Impact to Expect:

Synthetic data will allow faster data generation and labeled datasets to augment real-world data. It will continue to provide quality and diversity in datasets while safeguarding against data privacy issues.

3. Crowdsourcing and Active Learning

These data labeling techniques are expected to gain traction for their balanced approach of human annotating and ML models. Crowdsourcing will provide platforms with better quality control measures and real-time feedback.

On the other hand, active learning will continue optimizing resources by assigning complex data labeling to human annotators.

Impact to Expect:

Crowdsourcing keeps the data fresh and up to date by gaining inputs from labelers of all backgrounds. It’s a great practice to diversify data and make it go through automatic validation. It’s a quick method to label data quickly, especially for larger, more complex datasets. However, we can expect the issue of multiple clashing insights to persist.

Now, active learning is expected to become the most popular choice, especially in healthcare and finance. This is because of the human supervision element it requires. It’s not too costly, and there’s no need to employ a larger team as only the areas of confusion will be handled by the humans.

4. AR and VR

Augmented and virtual reality technologies can help label complex environments and 3D objects by aiding in visualization. This trend will only increase as data types continue to become more complex.

Impact to Expect:

AR and VR will continue to bring an interactive approach to data labeling. Its uses in simulations and training can be expected to rise, especially in complex environments. For example, in the automotive industry, using VR and AR can be used for practice on racetracks with life-like interactions with objects and traffic.

Impact of Blockchain on Data Labeling

Blockchain technology is already being incorporated in major industries, and data labeling is no exception.

Transparency

Labelers can track the origin of their labels and whether any modifications have occurred because blockchain provides a transparent ledger and clear documentation.

Decentralized nature

Blockchain can decentralize the labeling process, helping create a distributed network of annotators and increasing the diversity and reliability of the labels generated.

Data Integrity

Blockchain records the data’s history, ensuring the integrity of labeled data.

Labor Incentives:

Blockchain offers smart contracts, an agreement to create incentives to ensure fair compensation for their work.

AI and Data Labeling

AI systems are bound to become more complex, and comprehensive labeling will help mitigate bias in AI models and create fair systems, particularly in sensitive fields like healthcare and criminal justice.

Supervised learning

Labeled datasets are the foundation for supervised learning. Training supervised models will require high-quality labeled data as learning patterns for AI models. Labeled data can be used as a standard, up against which model performance can be measured.

Unstructured Data

Organizations find raw, unstructured data frustrating. Data labeling structures this data by annotating it for AI models to use. These models then make predictions based on this data.

Ethical and reputable AI

Labeled datasets can help define accountability and provide transparency in how AI models make their decisions. This is especially helpful in maintaining trust in AI.

These datasets can detect any algorithmic bias that AI models may have.

Collaboration between humans and AI

With human-in-the-loop data labeling, AI’s capabilities can blend well with human supervision. Human annotators can define the context that AI systems could miss.

In the future, we can expect tools to assist human annotators as they work. This includes pop-up suggestions or automated preliminary labels, enhancing productivity and inspiration while retaining the human element.

The Bottomline

Data labeling is a key step in the machine learning pipeline. ML models depend on accurate and consistent data labeling for functioning. In this guide, we’ve explored what data labeling is, its methods and types, as well as some deeper insights into how you should go about it.

Remember, data labeling isn’t just about tagging, it’s about unlocking the potential of machine learning models.

Keep updated and be the first to know about defining knowledge of related topics.