Got data?

Make sure it’s clean!

If you make your decisions based on incorrect or inconsistent data, you can be sure the business results will not be good.

How does losing time and money sound?

How about lost clients and business opportunities?

Pretty bad, right?

Using unverified or wrongly interpreted data can have dire consequences.

And with many data cleaning solutions available, choosing the right one can be quite a challenge too.

So how do you do it?

Fortunately, there are a couple of things you can do to ensure the high quality of your data cleaning, and we’ll tell you all about them.

In this article, you’ll find the necessary information on:

- What is data cleaning.

- Why data cleaning matters.

- Benefits of data cleansing.

- Five steps to clean your data.

- How to choose a data cleaning solution.

Already know you need a custom data cleaning solution for your business? We can help! At Iterators, we design, build, and maintain custom software solutions for your business.

Schedule a free consultation with Iterators today! We’d be happy to help you design and build your data cleaning solution so you don’t have to worry about it.

Data Cleaning – What you need to know first.

“In data processing, ‘Garbage in, garbage out’ isn’t just a catchphrase; it’s a reality. When you’re working with small datasets, poor-quality data skews every insight, every model. Sure, with larger datasets and deep learning models, some noise might be absorbed, but in a competitive landscape, quality data still makes the difference. If you want reliable models, don’t overlook data cleaning.”

Łukasz Sowa

In the world of data processing, there is a wise saying:

“Garbage in, garbage out.”

It means your insights are only as good as the data you’re using to get them.

So how do you make sure that you’ve got the juiciest, quality data and not garbage?

Yep, you guessed it.

Data cleaning.

What is data cleaning?

Data cleaning is the process of identifying, deleting, and/or replacing inconsistent or incorrect information from the database. This technique ensures high quality of processed data and minimizes the risk of wrong or inaccurate conclusions. As such, it is the foundational part of data science.

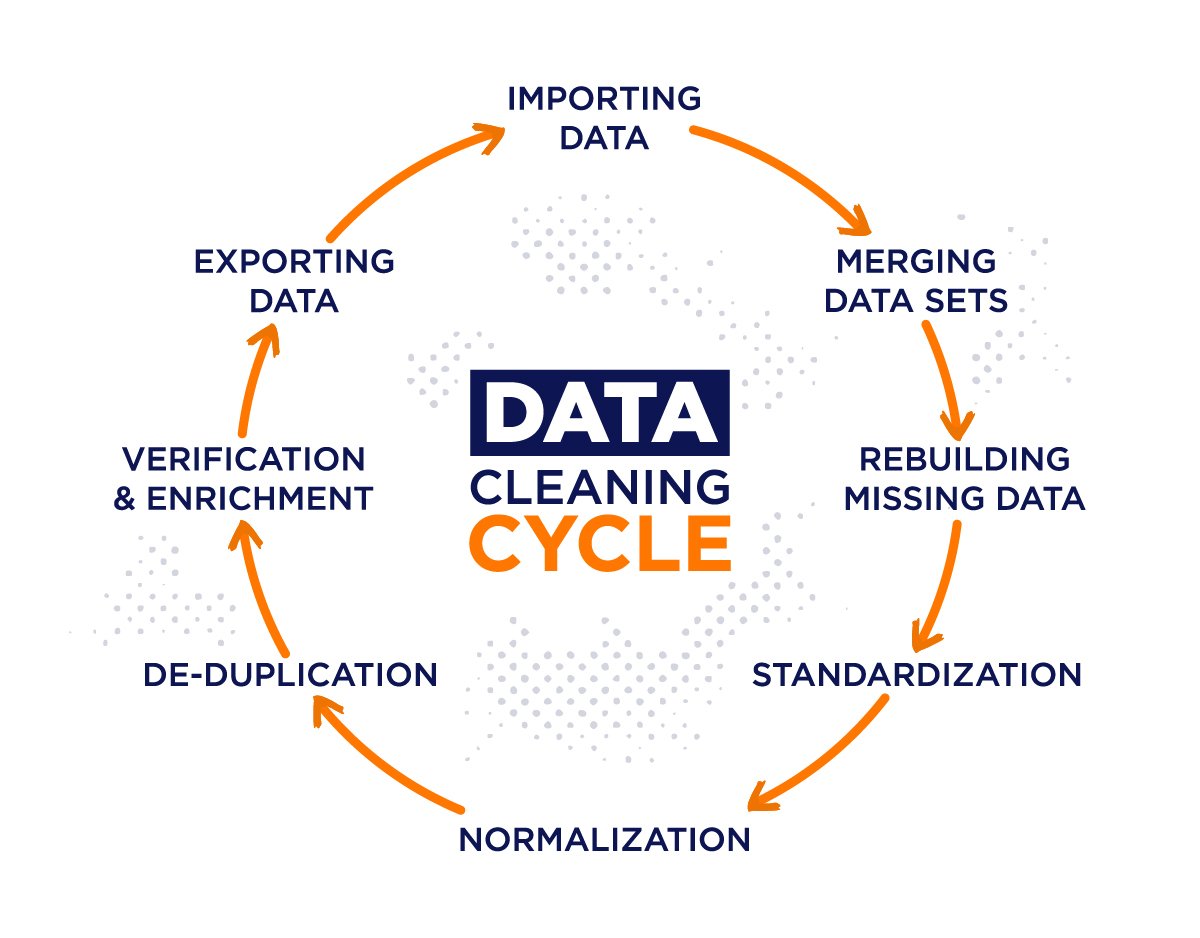

The standard data cleaning process consists of the following stages:

- Importing Data

- Merging data sets

- Rebuilding missing data

- Standardization

- Normalization

- Deduplication

- Verification & enrichment

- Exporting data

And it can be easily visualized as a cycle.

Everyone intuitively understands the premise of data cleaning.

It’s a pretty straightforward idea – In order to get the best possible results out of your data, you need to make sure it’s clean.

But what is “clean” data?

Clean data is a piece of information that meets the requirements of quality data and will contribute to uncovering valuable business insights.

Fortunately, there is a consensus as to what can be considered “clean” quality data.

Let’s take a look at what properties quality data consists of:

- Accuracy. Measured by a degree of compatibility between the data in question and an outside data source. It is our “true value” or a “golden standard.”

- Completeness. The degree to which all required measures are known.

- Consistency. It relates to the degree of compatibility of the databases across the whole system.

- Relevancy. The degree of “usefulness” of data, determining how closely a piece of information is related to an issue you’re researching.

- Validity. The degree to which data in question conforms to defined rules or constraints, e.g., information containing dates, should fall within a specific numerical range.

- Timeliness. Most data loses relevance pretty quickly, so this parameter is related to how “fresh” and up-to-date a piece of information is.

- Uniformity. Related to the consistency of the units of measure in all systems, e.g., data sets coming from the US and Germany might use different units of weight (pounds vs. kilos)

Why does data cleaning matter?

Data analytics is a complex, time-consuming, and expensive effort.

If you’re working with large sets of data, chances are there are significant business consequences involved. Like deciding where to allocate your funds best or how to reach peak productivity.

That’s why it’s imperative to minimize the risk of a fiasco.

How do you do that?

Data cleaning.

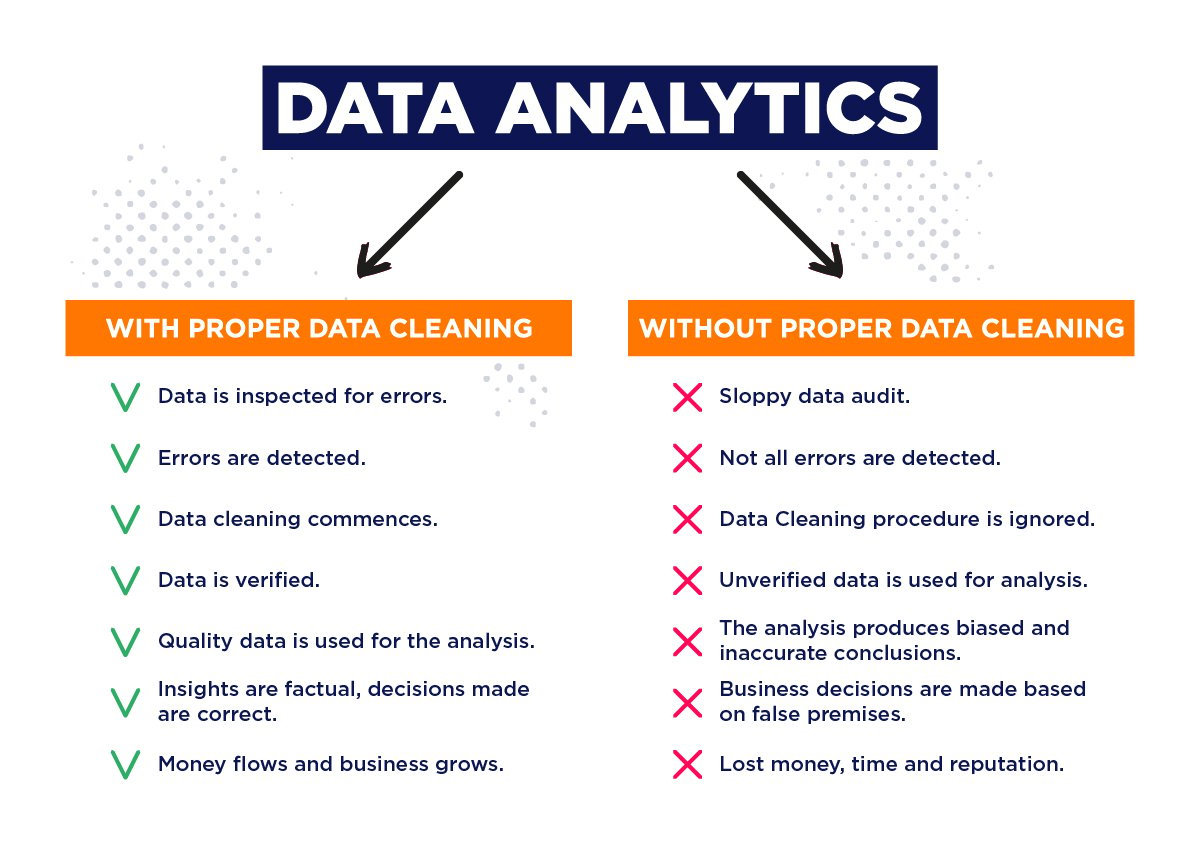

Without proper data cleaning procedures, one can’t be sure if their data analytics results will provide real insights. IBM’s study shows that low data quality costs 3.1 trillion dollars every year in the U.S. alone.

To demonstrate the gravity of the situation, let’s analyze two data analysis scenarios — one with data cleaning and the other without it.

Benefits of Data Cleaning.

So you know what happens when you neglect the data cleaning stage.

But what are the upsides of cleaning your data?

- Saved time and money – Inaccurate data leads to business strategies based on false assumptions. Data cleaning saves your company from potentially wasting both time and money, developing an ineffective strategy.

- Increased productivity – Effective data cleansing leads to consistent and highly functional databases. No errors mean faster, more effective workflows, which directly impacts productivity.

- Improved business results – Data cleaning is the key to a properly functioning data analytics solution. Whenever these two things occur, you can expect

- Better decision-making – There is a direct correlation between clean, quality data and reliable business insights: the cleaner the former, the more abundant the latter.

- Maintained reputation – Bad business decisions cost more than money. If you make a decision based on inaccurate data, it makes you look bad and unprofessional. But when your insights are useful, people will notice, and your reputation will grow.

Interested in other benefits that big data analytics can generate for your business? Make sure you check out our article: Big Data and Its Business Impacts (+8 Examples)

Data Cleaning Process – 5 Steps To Ensure Clean Data

The following process is a set of standard data cleaning practices, and it will help you keep your data in check.

Let’s break it down into the following stages.

1. Data Audit

Any data cleaning process starts with taking a close look at your data. You have to determine what kind of errors your data set contains and where they’re located.

How do you do that?

Through the use of statistical and database methods that help you detect anomalies and contradictions.

What are some of those methods?

Let’s take a look:

Software packages allow you to set a particular type of constraint and then generate code that checks the dataset for errors based on the violation of those constraints. Software packages can also generate reports of what constraints have been violated, how many times, and create a visualization of those findings.

Data profiling is a technique that helps to examine the data and create general, informative reports about what’s in the data set. This method might not be very in-depth but gives you a good initial idea of the types of data you’re dealing with.

For instance, you get to find out if a data column fits a particular standard, if there are any missing values or if a data set is linked to another.

2. Workflow Execution

This stage is where you specify what operations are a part of the sequence that cleans the data sets. That sequence is called the workflow.

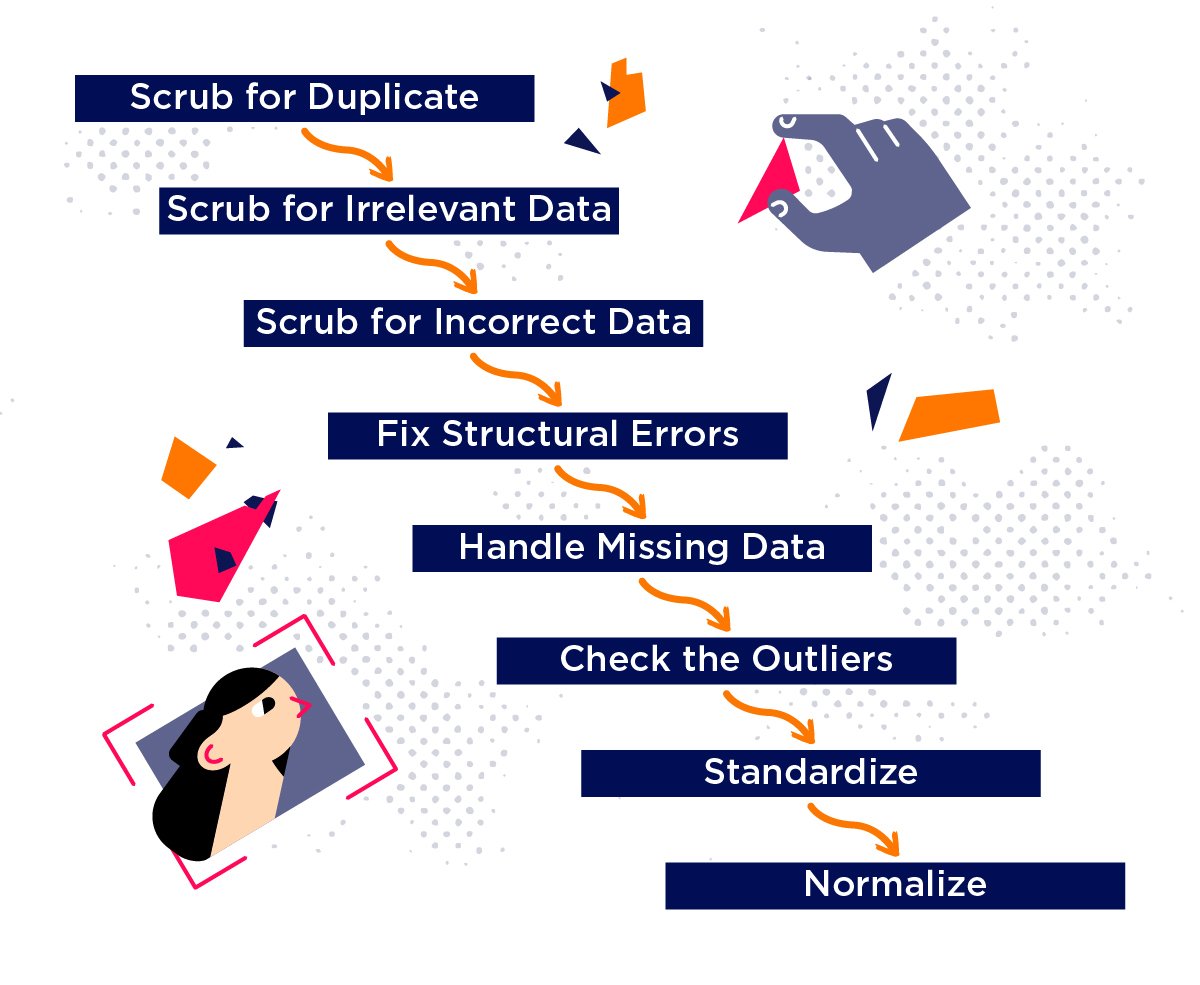

Here’s an example of a typical data cleaning workflow, featuring a series of operations performed on the data repeatedly until reaching sufficient data quality.

3. Data Cleaning (Workflow Execution)

The cleaning stage is the execution of operations specified in the workflow.

The data cleaning process might feature different techniques relative to the project’s nature and the data type. But the final objective is always the same – removal or correction of data.

So let’s take the aforementioned exemplary workflow and elaborate a little bit on what each step entails.

Scrub for Duplicate

One type of mistake that you’re going to encounter when performing data cleaning is repeated data entries.

It happens when data is coming from different sources or users, for any reason, submit their entry more than once.

Action: Remove.

Scrub for Irrelevant Data

Irrelevant data is the type of information that doesn’t have any formal errors but is just not useful for your project.

It might be because it just doesn’t fit under a particular angle you’re analyzing, or it’s just not relevant at all.

For example, if you were analyzing data about the number of electronic music events organized in New York annually, you wouldn’t necessarily need to know the venue’s phone number.

Same way when you’re looking for events of a specific genre. You’re not going to need to include all the techno events in analysis when you’re only looking at country music.

Action: Remove.

Scrub for Incorrect Data

Incorrect data is often easy to spot, as it’s just illogical.

For example, you’re preparing a report about the app users’ average age, and you see entries like -1 or 420.

The reason for incorrect data lies within the processing stage, be it preparation or cleaning. It is usually attributed to imprecisely defined functions, and transformations data went through.

Action: Amend the functions that caused the wrong calculations. If not possible, then remove the data.

Fix Structural Errors

Another issue you might encounter when performing data cleaning is structural errors.

These types of errors occur during measurement, data transfer, or maintenance activities. They include odd naming, typos, inconsistent capitalization.

Structural errors lead to inconsistent data, duplicates, and mislabeled categories.

Action: Review your data collection and data transformation process to prevent data issues.

Handle Missing Data

Missing data is just unavoidable. You’re likely to find even whole rows and columns of missing values in your data sets during the data cleaning process.

This situation is tricky because filling in the missing fields with “probable” values may produce biased results. On the other hand, ignoring it is suboptimal because missing data can be telling us something.

So what do you do?

Action: There three main methods of dealing with missing data.

- Drop. When the missing values in a column are few and far between, the easiest way to handle them is to drop the missing data rows.

- Impute. This method involves calculating the missing values based on other observations. Statistical techniques like median, mean, or linear regression are helpful if there aren’t many missing values. You can also handle this issue by replacing missing data with entries from another “similar” database. This method is called the hot-deck imputation.

- Flag. Missing data can be informative, especially if there is a pattern in play. For example, you conduct a survey, and most women refuse to answer a particular question. That’s why sometimes just flagging the data can help you with those subtle insights:

- For numeric data – just put in 0.

- For categorical data – introduce the ‘missing’ category.

Check the Outliers

Another thing you have to remember during the process of data cleaning are the outliers.

Outliers are values that stand out and are significantly different from the others. They are not necessarily mistakes, but they can be.

So how do you differentiate?

What you need to watch out for is the context.

For example, you’re researching your app users’ age, and you find entries like 72 and 2.

The former might be a senior citizen who is up to date with the technology. But the latter is most likely an error since toddlers don’t use apps.

Action: Don’t remove an outlier unless you know for a fact that it’s a mistake.

Standardize + Normalize

Standardization and normalization are crucial to the effectiveness of the data cleaning process.

Why?

Because they make data ripe for statistical analysis and easy to compare and analyze.

Standardization is a process during which you’re making sure all your values adhere to a specific standard, such as deciding whether to go with kilos or grams, upper or lower case letters.

Normalization is the process of adjusting the values to a common scale. For example, you can rescale values into the 0-1 range. This action is necessary if you want to use statistical methods that require normally distributed data to work.

4. Validation

The next critical stage of the data cleaning process is quality assurance.

When you’ve finished the workflow execution, you should audit the data again and make sure all the rules and constraints were in fact executed.

To be sure that your data cleansing process is correct and effective, you should consider the following questions:

- What conclusions can you draw from the dataset?

- Does it prove or disprove your hypothesis?

- Are there any insights that help you form the next idea?

Validating the data before actually presenting it to a client or your boss is a must. False conclusions can be a source of embarrassment at best and a reason for the wrong business strategy at worst.

5. Reporting

Last, but not to be overlooked, there’s the reporting stage.

Creating reports and summaries of the data cleaning is essential as far as streamlining and efficiency goes. Especially if you’re processing a lot of data and working with many people.

Reports allow you and your co-workers to compare findings and access the insights quickly and effortlessly.

How to choose a data cleaning solution?

As you might suspect, there are many data cleansing tools and techniques out there.

Choosing the right one might be a difficult task. Especially since the effectiveness of particular tools can vary based on:

- Quality of input data

- Types of data

- Size of the database

- Your budget

- Your business goal

- Your data management strategy

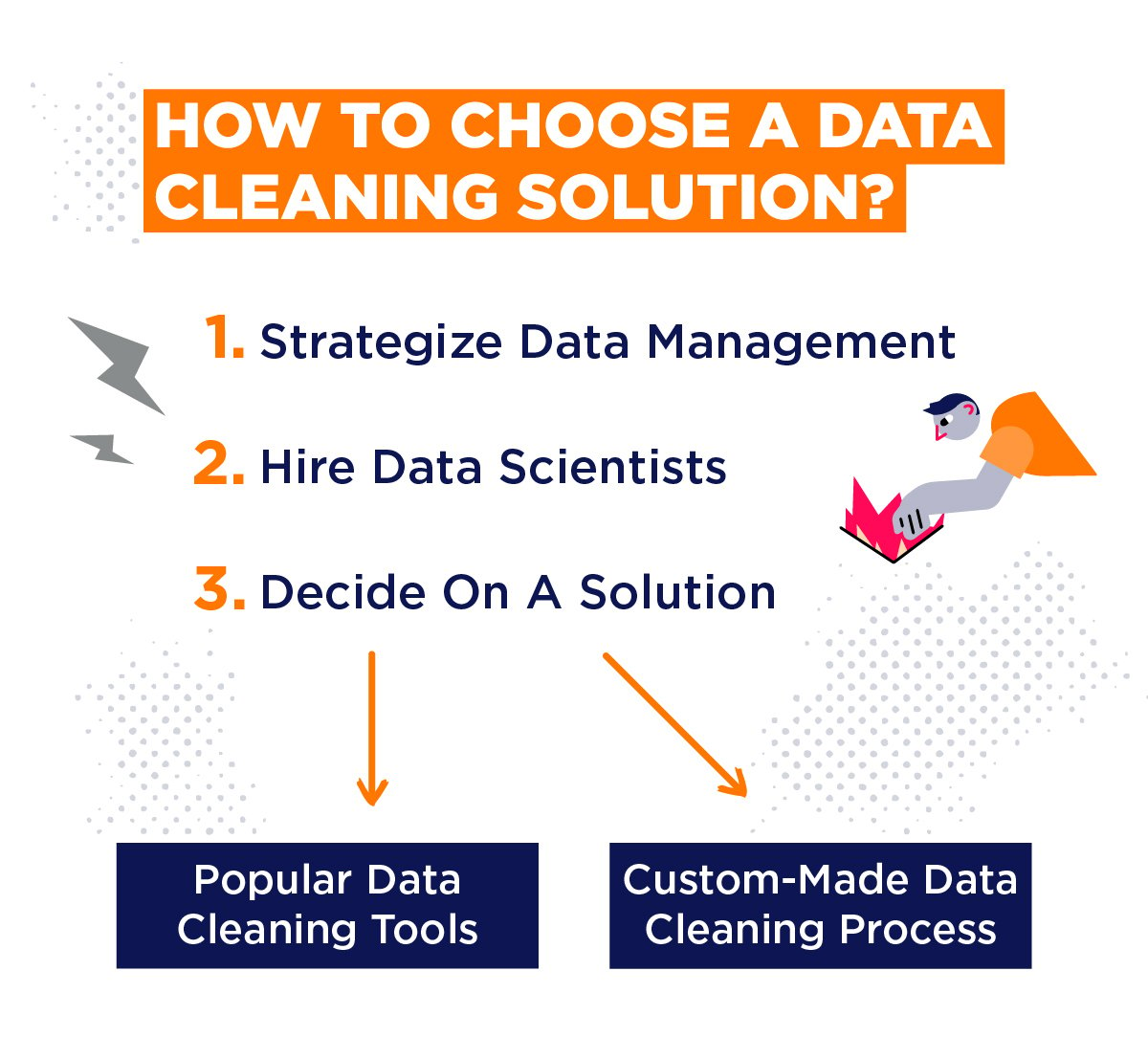

That said, let’s look at what steps you should take when you’re first deciding on a data cleaning solution.

1. Strategize Data Management

Make sure you’ve got a data management strategy laid out. Data cleaning is only a part of a bigger process of data analytics. To choose the right solution, you have to know where the pieces fit.

2. Hire Data Scientists

Chances are if you’re processing large amounts of data, you’re already hiring data scientists. But remember that regardless of the type of data cleansing solution you’ll choose, you’re going to need skilled employees supervising the whole process. And that’s not an easy task if you’re a non-technical person.

Find out how to hire programmers for your project! Check out our article: How to Hire a Programmer for a Startup in 6 Easy Steps

3. Decide On a Solution

Once you hit this stage, you will know how complex of a solution you need. In most cases, choosing a popular data cleaning tool will suffice. However, if you need a more robust technology to deal with your data, you should look into building a custom data cleaning process.

Popular Data Cleaning Tools

There are plenty of ready-made data cleansing tools and solutions to pick from. Here are a selected few industry standards:

- Google Cloud AI Platform Data Labeling Service

- Data Ladder

- Talend Data Quality

- Validity DemandTools

- TIBCO Clarity

- Cloudingo

- OpenRefine

What are the benefits of going forward with standard data cleaning tools?

- Proven and reliable ways of cleaning data.

- Covering most common data cleaning needs.

- Wide range of products.

- Customer Support

- Regular Updates

Custom Made Data Cleaning Process

Outsourcing the data cleaning process can be an interesting option, especially when dealing with big data. Vast amounts of information, coupled with various data types, can challenge traditional data cleaning methods.

So what are the benefits of a custom made data cleaning solution?

- Suitable for non-standard data cleaning.

- Effective in handling big data.

- No “fat” in the process; it’s tailor-made for your needs.

- A dedicated team of professionals working on your project.

That said, you have to be extremely careful choosing that option. Especially when you’re handling sensitive data belonging to your clients.

Conclusion

Working with huge data sets has become the bread and butter of modern enterprises. And you don’t have to be Facebook or Google to take advantage of the big data technologies.

But there are still many obstacles that await those who want to benefit from data analytics’ goodness. And there is no denying the importance of data cleaning in that process.

That’s why good data hygiene is paramount if you care about building a proper data quality culture in your company. And you should care, because ‘Quality data in – quality result out.’