Conventional wisdom has it that using too many functional abstractions in Scala is detrimental to overall program performance.

Yet, these abstractions are an immense help if you want to write clean and abstract code. So, should practitioners of FP drown in guilt for writing inefficient code? Should they give way to less functional code?

Let’s find out!

The question I’ve been hearing a lot recently is:

I have used EitherT all through my code base because it helps with concise error handling. But I heard it is very slow. So, should I abandon it and write error handling myself? But if I do that, isn’t the pattern-matching slow? Meaning the best solution would be to simply throw exceptions?

It’s not so easy to answer that…

Yes, the gut feeling every Scala developer has is that all the fancy monadic transformers add a lot of non-optimizable indirection (at the bytecode level) that throws JIT off and is slower than what your Java colleagues might have written. But how bad is it?

On the other hand, if you stop using the benefits of functional abstractions made possible by Scala’s powerful type system, then you’re left with just a “better Java” kind of language. You may as well throw in the towel and rewrite everything in Kotlin.

Another gut feeling you might have happens when your code starts calling other systems via network. It’s then that whatever you are doing in your code is mostly irrelevant because communication costs dwarf any benefits or losses.

So let’s try to go beyond these hunches and try to measure the impact of being uncompromising functional programmers. I’ll use JMH to do that.

Devising an Experiment

The first step is to create a piece of code that’s representative of the problems you want to measure. Which, in this case, means a typical code that deals with error-handling in business logic. This usually means a code that takes some sort of data (an input) and validates it. Once validated, the code kicks off a transformation, fetches additional “data,” calls the outside world, and waits for a result.

If the result is correct, the code performs some additional processing and returns the final result. If it isn’t, the code performs some bookkeeping and propagates the error back to the caller.

This pattern is generic enough to be applicable in a wide variety of circumstances – e.g., authentication and calling external services – and allows for the measurement of the impact of various techniques – e.g., EitherT and exceptions – without being restraining.

So, let’s start with:

case class Input(i: Int)

case class ValidInput(i: Int)

case class Data(i: Int)

case class Output(i: Int)

case class Result(i: Int)

sealed trait ThisIsError extends Product with Serializable

case class Invalid(input: Int) extends ThisIsError

case class UhOh(reason: String) extends ThisIsError

@State(Scope.Benchmark)

class BenchmarkState {

@Param(Array("80"))

var validInvalidThreshold: Int = _

val max: Int = 100

@Param(Array("0.1"))

var failureThreshold: Double = _

@Param(Array("5"))

var timeFactor: Int = _

@Param(Array("10"))

var baseTimeTokens: Int = _

def getSampleInput: Input = Input(Random.nextInt(max))

}

trait BenchmarkFunctions {

def validateEitherStyle(threshold: Int)(

input: Input): Either[Invalid, ValidInput] =

if (input.i < threshold) Right(ValidInput(input.i))

else Left(Invalid(input.i))

def transform(baseTokens: Int)(validInput: ValidInput): ValidInput = {

Blackhole.consumeCPU(baseTokens)

validInput.copy(i = Random.nextInt())

}

def fetchData(baseTokens: Int, timeFactor: Int)(input: ValidInput)(

implicit ec: ExecutionContext) = Future {

Blackhole.consumeCPU(timeFactor * baseTokens)

Data(input.i)

}

def outsideWorldEither(threshold: Double, baseTokens: Int, timeFactor: Int)(

input: Data)(

implicit ec: ExecutionContext): Future[Either[UhOh, Output]] = Future {

Blackhole.consumeCPU(timeFactor * baseTokens)

if (Random.nextDouble() > threshold) Right(Output(input.i))

else Left(UhOh(Random.nextString(10)))

}

def doSomethingWithFailure(baseTokens: Int, timeFactor: Int)(error: UhOh)(

implicit ec: ExecutionContext): Future[Unit] = Future {

Blackhole.consumeCPU(timeFactor * baseTokens)

()

}

def doSomethingWithOutput(baseTokens: Int, timeFactor: Int)(output: Output)(

implicit ec: ExecutionContext): Future[Result] = Future {

Blackhole.consumeCPU(timeFactor * baseTokens)

Result(output.i)

}

}

The parameters of functions represent some benchmark parameters you’d like to control. So, you start with some random Input – it holds a number [0, 100) and validInvalidThreshold controls how often the validation function returns Right– initially 80% of cases pass.

We also simulate (with failureThreshold) how often our interaction with The Dark Side ends with an error (we’ll be using these parameters to check if the performance of error handling techniques depends on error distribution).

Last but not least, you’ll want to use JMH Blackhole. It helps simulate a long-running code by consuming an arbitrary amount of time in a way that won’t be messed with via JIT.

Two additional state params, baseTimeTokens and timeFactor, control the timings. baseTimeTokens sets an arbitrary delay inside the transform function. Let’s say that your transformation is a bit more complex than just copying the input. timeFactor specifies how many times slower the other functions are – i.e., initially you’d say that interacting with the outside world, AKA ‘The Dark Side,’ is 5 times slower than what you’re doing within your system. You’ll be using these parameters to simulate more complex code.

Let’s start with Scala Future – while I’m sure you’re aware that it is rarely the recommended effect these days, it’s still very popular.

Future

EitherT vs Either

Let’s measure the impact of EitherT compared to hand-rolled handling of Either in Future

object FutBenchmark {

implicit val executionContext: ExecutionContext = ExecutionContext.global

def await[A](fut: Future[A], bh: Blackhole) = {

while (fut.value.isEmpty) {}

bh.consume(fut.value)

fut.value.get

}

}

@BenchmarkMode(Array(Mode.AverageTime))

@Warmup(iterations = 10, time = 1, timeUnit = TimeUnit.SECONDS)

@Measurement(iterations = 30, time = 5, timeUnit = TimeUnit.SECONDS)

class FutBenchmark extends BenchmarkFunctions {

import FutBenchmark._

import cats.instances.future._

@Benchmark

@Fork(1)

def eitherT(benchmarkState: BenchmarkState, blackhole: Blackhole) = {

val baseTokens = benchmarkState.baseTimeTokens

val timeFactor = benchmarkState.timeFactor

val failureThreshold = benchmarkState.failureThreshold

val validInvalidThreshold = benchmarkState.validInvalidThreshold

val fut = EitherT

.pure[Future, Invalid](benchmarkState.getSampleInput)

.subflatMap(validateEitherStyle(validInvalidThreshold))

.map(transform(baseTokens))

.semiflatMap(fetchData(baseTokens, timeFactor))

.flatMapF(outsideWorldEither(failureThreshold, baseTokens, timeFactor))

.biSemiflatMap(

{

case err: UhOh =>

doSomethingWithFailure(baseTokens, timeFactor)(err).map(_ => err)

case otherwise => Future.successful(otherwise)

},

doSomethingWithOutput(baseTokens, timeFactor)

)

.value

await(fut, blackhole)

}

@Benchmark

@Fork(1)

def either(benchmarkState: BenchmarkState, blackhole: Blackhole) = {

val baseTokens = benchmarkState.baseTimeTokens

val timeFactor = benchmarkState.timeFactor

val failureThreshold = benchmarkState.failureThreshold

val validInvalidThreshold = benchmarkState.validInvalidThreshold

val fut = Future

.successful(benchmarkState.getSampleInput)

.map(input =>

validateEitherStyle(validInvalidThreshold)(input).map(

transform(baseTokens)))

.flatMap {

case Right(data) =>

fetchData(baseTokens, timeFactor)(data)

.flatMap(

outsideWorldEither(failureThreshold, baseTokens, timeFactor))

.flatMap {

case Right(output) =>

doSomethingWithOutput(baseTokens, timeFactor)(output)

.map(Right(_))

case l @ Left(err) =>

doSomethingWithFailure(baseTokens, timeFactor)(err).map(_ =>

l.asInstanceOf[Either[ThisIsError, Result]])

}

case left =>

Future.successful(left.asInstanceOf[Either[ThisIsError, Result]])

}

await(fut, blackhole)

}

}

The two benchmarks above perform the same routine we devised earlier. The latter is what a human would write without EitherT.

Quirks

You may be wondering why you need await at the end of each benchmark and why await is implemented as a busy loop instead of handy Scala Await.

First, if you do not await a future, that future will still run when the next benchmark is performed, occupying the thread pool (execution context) and affecting the results. You’ll no longer be measuring the average time each method takes to execute independently.

Second, Scala’s Await tends to put your threads to sleep – which will skew the results, as you’ll be adding random (and potentially long) times of thread scheduling “tax” to each run.

The Use of Inliner

Benchmarks are compiled with -opt:l:inline, -opt-inline-from:**. These make a lot of higher-order methods disappear from the call-stack, for instance, this code:

biSemiflatMap(err =>

doSomethingWithFailure(baseTokens, timeFactor)(err)

.map(_ => err),

doSomethingWithOutput(baseTokens, timeFactor))Becomes:

new EitherT(

catsStdInstancesForFuture(executionContext)

.flatMap(eitherT.value) { f }

)

in the generated byte-code

(compare with

def biSemiflatMap[C, D](fa: A => F[C], fb: B => F[D])(implicit F: Monad[F]):

EitherT[F, C, D] =

EitherT(F.flatMap(value) { f })

)

You can read more about these optimizations here. believe that they’re beneficial for FP-heavy code because they eliminate megamorphic callsites. So, I recommend that everyone turn them on unless you’re building a library.

Results

| Method | ns/op (tf = 2) | ns/op (tf = 5) | ns/op (tf = 100) | ns/op (tf = 200) |

| EitherT | 9681 (+- 14) | 9871 (+- 8) | 29288 (+- 41) | 48674 (+- 99) |

| Future[Either[…]] | 6443 (+- 11) | 6775 (+- 21) | 26657 (+- 42) | 45970 (+- 66) |

Observations:

- Yay! The hand-coded version is 1.5x faster than

EitherTfor short tasks. - For long tasks, the differences are probably too small (~10%) to make any practical difference unless performance is your main concern. In that case, stay away from this combination.

- With increase of

timeFactorparameter, the relative speedup of not usingEitherTtends to become negligible.

Once your computations start to hit a db/external service etc…, which you are simulating by setting timeFactor to, say, 200 – meaning that it’s 200x more costly to call some functions – not an unreasonable setting if you pretend that these are calling an HTTP service, your real worry should not be EitherT.

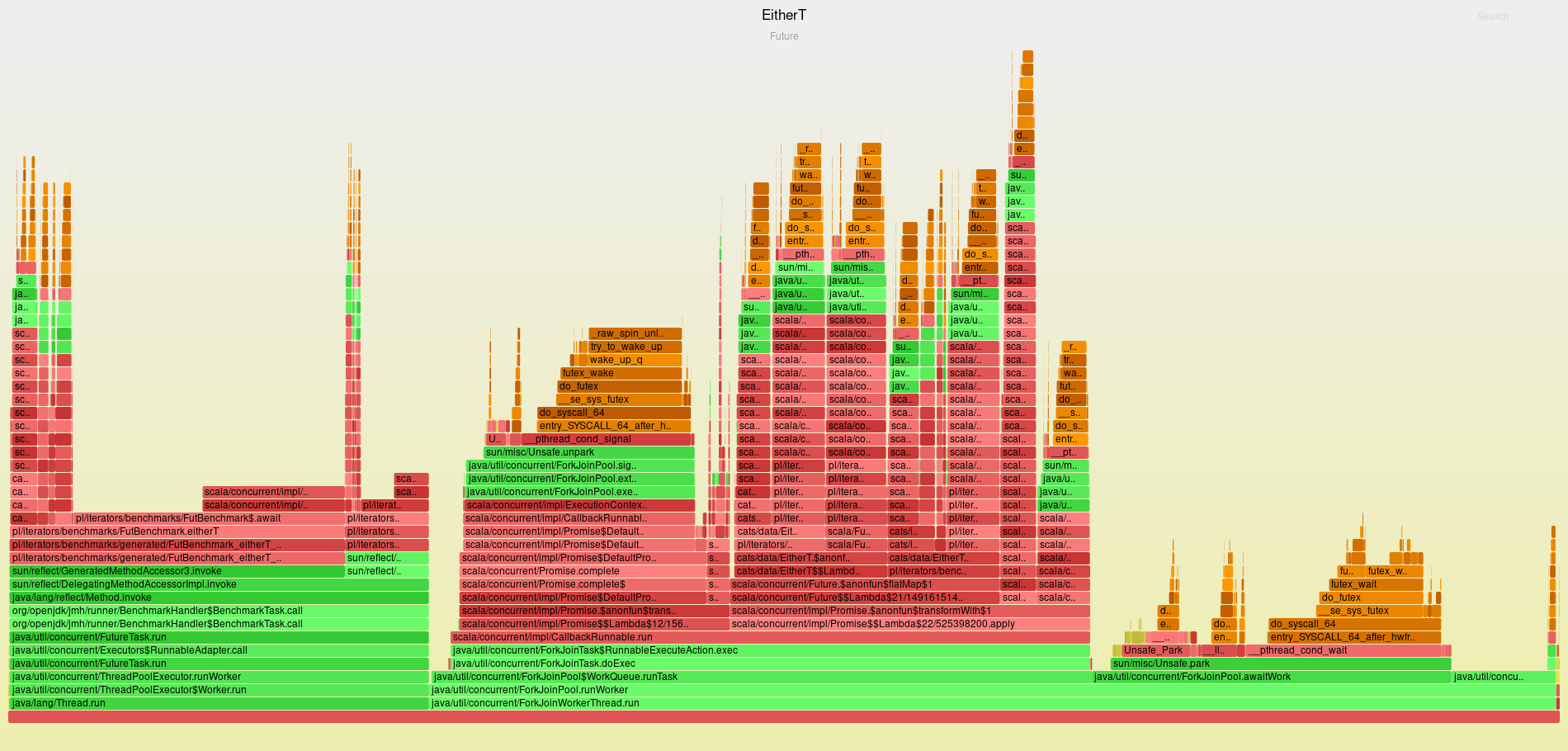

Analysis

Insights:

- There is a considerable price to be paid for creating

EitherTinstances viaright,pure, and extramapcalls. EitherTcode compiles to a lot of extrainvokedynamic,invokeinterfaceinstructions compared to the plainFutureversion, but it does not seem to be that much of a problem. Please note that it is quite possible that JIT has been able to perform aggressive monomorphization due to the fact that there is only one instance ofMonad,Functor, etc… On the other hand, I wasn’t able to obtain different results even if I experimented with force-loading otherMonadimplementations.- Inliner is helpful. It can inline all the

EitherT.{subflatMap, biSemiflatMap, flatMapF, map}calls, reducing one level of indirection. - The biggest factor is the cost of submitting tasks to the thread pool.

If your tasks are short, you’ll experience a substantial performance gain if you utilize thread-pool sparingly – e.g., by coalescing long chains of Future calls into a single call. If, on the other hand, your tasks are long, the cost of thread-pool management will be amortized over the time it takes to run tasks.

Performance problems with EitherT wrapped around Future seem to be centered around a certain mismatch between these two. While Future favors a small number of bigger chunks of work, EitherT, being effect agnostic, interacts with its effect through generic abstractions like Functor or Monad, which tend to break down programs into a larger number of smaller steps translated into chains of map, flatMap calls. But, as you observed, Future makes these calls expensive for short computations. This effect largely diminishes when tasks perform a lot of work – just use EitherT as it leads to a clean and concise code (again, unless performance is your main concern).

Either vs Exceptions

The source of doubts for almost everyone:

Is it better to forgo Either and go with exceptions?

After all, exceptions are by default caught by both Future and IO, making them effectively isomorphic to Either[Throwable, A]. In consequence, you can use it effectively without explicit Either at the expense of losing some precision because of unrestricted Throwable, as opposed to a more specific error type.

Let’s then create a set of functions that, instead of signaling an error by constructing a Left instance of Either, throws an exception.

case class InvalidException(input: Int) extends RuntimeException("Invalid")

case class UhOhException(uhOh: UhOh) extends RuntimeException(uhOh.reason)

trait BenchmarkFunctions {

// ...

def validateExceptionStyle(threshold: Int)(input: Input): ValidInput =

if (input.i < threshold) ValidInput(input.i)

else throw InvalidException(input.i)

def outsideWorldException(threshold: Double,

baseTokens: Int,

timeFactor: Int)(input: Data)(

implicit ec: ExecutionContext): Future[Output] =

Future {

Blackhole.consumeCPU(timeFactor * baseTokens)

if (Random.nextDouble() > threshold) Output(input.i)

else throw UhOhException(UhOh(Random.nextString(10)))

}

// ...

}

@BenchmarkMode(Array(Mode.AverageTime))

@Warmup(iterations = 10, time = 1, timeUnit = TimeUnit.SECONDS)

@Measurement(iterations = 30, time = 5, timeUnit = TimeUnit.SECONDS)

class FutBenchmark extends BenchmarkFunctions {

//...

@Benchmark

@Fork(1)

def exceptions(benchmarkState: BenchmarkState, blackhole: Blackhole) = {

val baseTokens = benchmarkState.baseTimeTokens

val timeFactor = benchmarkState.timeFactor

val failureThreshold = benchmarkState.failureThreshold

val validInvalidThreshold = benchmarkState.validInvalidThreshold

val fut = Future {

validateExceptionStyle(validInvalidThreshold)(

benchmarkState.getSampleInput)

}.map(transform(baseTokens))

.flatMap(fetchData(baseTokens, timeFactor))

.flatMap(data =>

outsideWorldException(failureThreshold, baseTokens, timeFactor)(data)

.recoverWith {

case err: UhOhException =>

doSomethingWithFailure(baseTokens, timeFactor)(err.uhOh).flatMap(

_ => Future.failed(err))

})

.flatMap(doSomethingWithOutput(baseTokens, timeFactor))

await(fut, blackhole)

}

}

Since functions throwing exceptions are not composable, you needed to rewrite things a bit.

Results

| Method | ns/op (tf = 2) | ns/op (tf = 5) | ns/op (tf = 100) | ns/op (tf = 200) |

| Future (exceptions) | 8482 (+- 14) | 8778 (+- 8) | 28039 (+- 33) | 47604 (+- 63) |

| Future[Either[…]] | 6443 (+- 11) | 6775 (+- 21) | 26657 (+- 42) | 45970 (+- 66) |

| Method | 10% failures, 20% invalid | 25% failures, 30% invalid | 45% failures, 30% invalid | 45% failures, 50% invalid |

| Future (exceptions) (tf = 5) | 8778 (+- 8) | 8775 (+-8) | 9775 (+- 7) | 8275 (+- 9) |

| Future (Either) (tf = 5) | 6775 (+- 21) | 6075 (+-16) | 6385 (+- 16) | 5246 (+- 20) |

| Future (exceptions) (tf = 100) | 28039 (+- 33) | 25729 (+- 38) | 26365 (+- 41) | 20736 (+- 34) |

| Future (Either) (tf = 100) | 26657 (+- 42) | 23489 (+- 43) | 23769 (+- 38) | 17514 (+- 30) |

Observations:

- All things equal, exceptions aren’t really faster than their

Either-based counterparts. In extreme cases, exceptions can be 50% slower. - Exceptions get relatively faster the more you throw them. (50% slower for short tasks and high-error ratio vs. around 15% for longer tasks.) But even with the growth of failure rate, it’s unlikely that you’ll ever reach a point where exception-based methods are on par with

Either, so don’t bother.

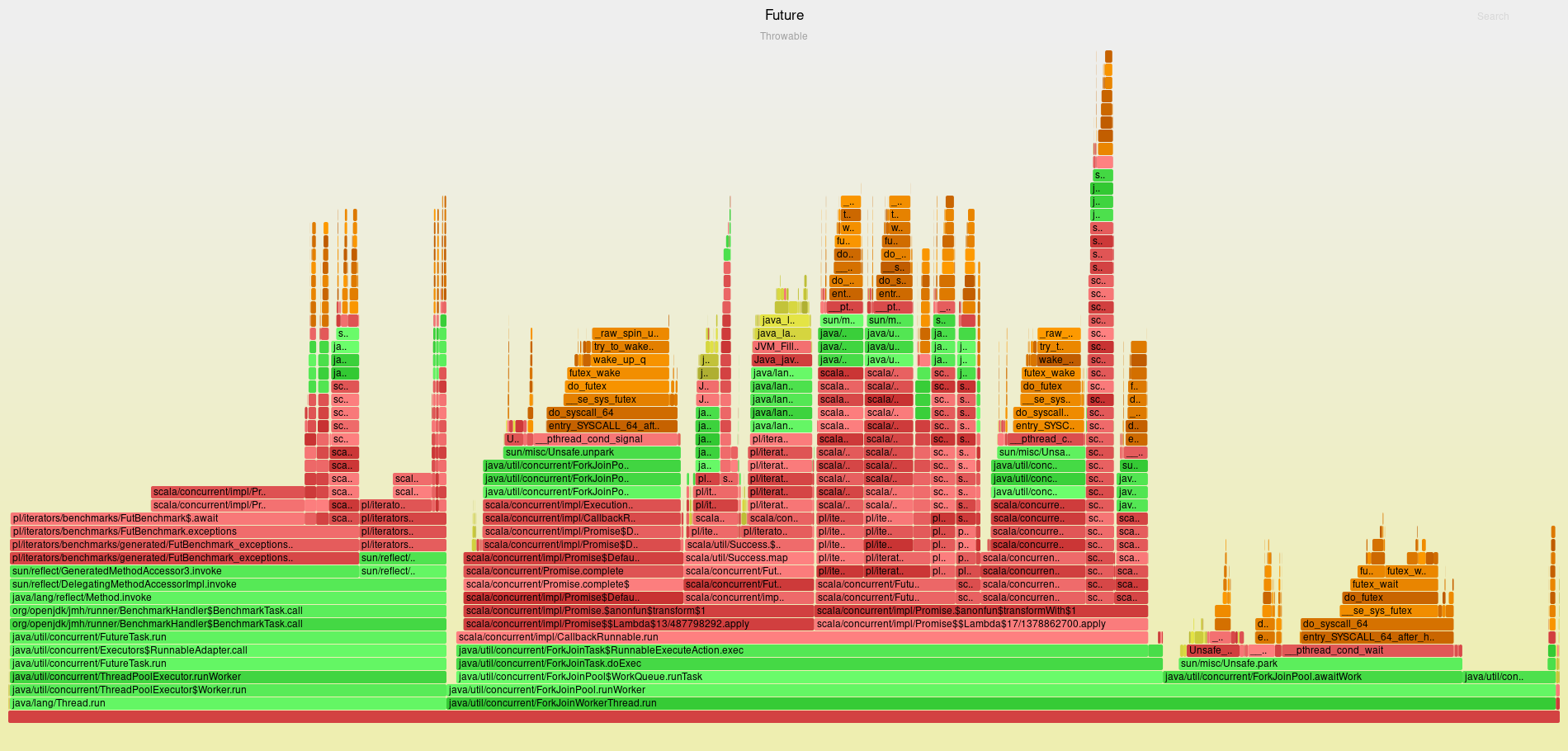

Analysis

Insights:

- Filling stack traces can cost a lot – the more you throw, the more you’ll pay.

- Stack traces are filled in the

Throwableconstructor – you do not even have to throw. - So, there is a conflict between the cost of throwing an exception and short-circuiting and recovery – in this case, more than 5% of samples are devoted to filling stack traces.

Verdict

| Method | ns/op (tf = 2) | ns/op (tf = 5) | ns/op (tf = 100) | ns/op (tf = 200) |

| Future[Either[…]] | 1 | 1 | 1 | 1 |

| EitherT | 1.5 | 1.45 | 1.09 | 1.05 |

| Future (exceptions) | 1.31 | 1.29 | 1.05 | 1.04 |

EitherT: Only use for long-running tasks.- Exceptions: Don’t bother.

IO

You observed that under some circumstances, EitherT is not so performant when the underlying effect is expensive to transform.

Let’s see how it fares against effects where that is not the case – the IO monad..

EitherT vs Either

trait IoBenchmarkFunctions {

def outsideWorldEitherIo(threshold: Double, baseTokens: Int, timeFactor: Int)(

input: Data): IO[Either[UhOh, Output]] = IO {

Blackhole.consumeCPU(timeFactor * baseTokens)

if (Random.nextDouble() > threshold) Right(Output(input.i))

else Left(UhOh(Random.nextString(10)))

}

def fetchDataIo(baseTokens: Int, timeFactor: Int)(input: ValidInput) = IO {

Blackhole.consumeCPU(timeFactor * baseTokens)

Data(input.i)

}

def doIoWithFailure(baseTokens: Int, timeFactor: Int)(error: UhOh): IO[Unit] =

IO {

Blackhole.consumeCPU(timeFactor * baseTokens)

()

}

def doIoWithOutput(baseTokens: Int, timeFactor: Int)(

output: Output): IO[Result] = IO {

Blackhole.consumeCPU(timeFactor * baseTokens)

Result(output.i)

}

}

object IoBenchmark {

implicit val executionContext: ExecutionContext = ExecutionContext.global

def shift[A](io: IO[A])(implicit ec: ExecutionContext) =

IO.shift(ec).flatMap(_ => io)

}

@BenchmarkMode(Array(Mode.AverageTime))

@Warmup(iterations = 10, time = 1, timeUnit = TimeUnit.SECONDS)

@Measurement(iterations = 30, time = 5, timeUnit = TimeUnit.SECONDS)

class IoBenchmark extends BenchmarkFunctions with IoBenchmarkFunctions {

import IoBenchmark._

@Benchmark

@Fork(1)

def eitherT(benchmarkState: BenchmarkState) = {

val baseTokens = benchmarkState.baseTimeTokens

val timeFactor = benchmarkState.timeFactor

val failureThreshold = benchmarkState.failureThreshold

val validInvalidThreshold = benchmarkState.validInvalidThreshold

val io = EitherT

.pure[IO, Invalid](benchmarkState.getSampleInput)

.subflatMap(validateEitherStyle(validInvalidThreshold))

.map(transform(baseTokens))

.flatMapF(input =>

shift {

EitherT

.right(fetchDataIo(baseTokens, timeFactor)(input))

.flatMapF(

outsideWorldEitherIo(failureThreshold, baseTokens, timeFactor))

.biSemiflatMap(

err => doIoWithFailure(baseTokens, timeFactor)(err).map(_ => err),

doIoWithOutput(baseTokens, timeFactor)

)

.value

})

.value

io.unsafeRunSync()

}

@Benchmark

@Fork(1)

def either(benchmarkState: BenchmarkState) = {

val baseTokens = benchmarkState.baseTimeTokens

val timeFactor = benchmarkState.timeFactor

val failureThreshold = benchmarkState.failureThreshold

val validInvalidThreshold = benchmarkState.validInvalidThreshold

val io = IO

.pure(benchmarkState.getSampleInput)

.map(input =>

validateEitherStyle(validInvalidThreshold)(input).map(

transform(baseTokens)))

.flatMap {

case Right(validInput) =>

shift {

fetchDataIo(baseTokens, timeFactor)(validInput)

.flatMap(

outsideWorldEitherIo(failureThreshold, baseTokens, timeFactor))

.flatMap {

case Right(output) =>

doIoWithOutput(baseTokens, timeFactor)(output).map(Right(_))

case l @ Left(err) =>

doIoWithFailure(baseTokens, timeFactor)(err).map(_ =>

l.asInstanceOf[Either[ThisIsError, Result]])

}

}

case left => IO.pure(left.asInstanceOf[Either[ThisIsError, Result]])

}

io.unsafeRunSync()

}

}

These benchmarks correspond to the ones where you tested Future: an EitherT version and a version where Either is handled manually.

Quirks

Since IO is lazy, stopping the benchmark after an instance of IO is produced is going to measure only construction costs. To be comparable with Future benchmarks, you need to force an evaluation (via unsafeRunSync) of every IO at the end of each benchmark.

This generally “pollutes” results with the cost of running the IO loop, which would not be present in a real setting where users are encouraged to run the computation as late as possible. This means you should not cross-compare actual timings between – i.e., IO and ZIO – because this kind of benchmark favors effect systems optimized toward short-running computations.

Results

| Method | ns/op (tf = 2) | ns/op (tf = 5) | ns/op (tf = 100) | ns/op (tf = 200) |

| EitherT[IO[…]] | 4974 (+- 13) | 5531 (+- 14) | 23988 (+- 27) | 43247 (+- 53) |

| IO[Either[…]] | 4791 (+-13) | 5360 (+- 15) | 23805 (+- 20) | 43064 (+- 47) |

Observations:

- There are almost no differences between using

EitherTor coding by hand – which confirms the observations.EitherTis well-suited toIO– no more than 1.5x slowdown as is in the case of Future.

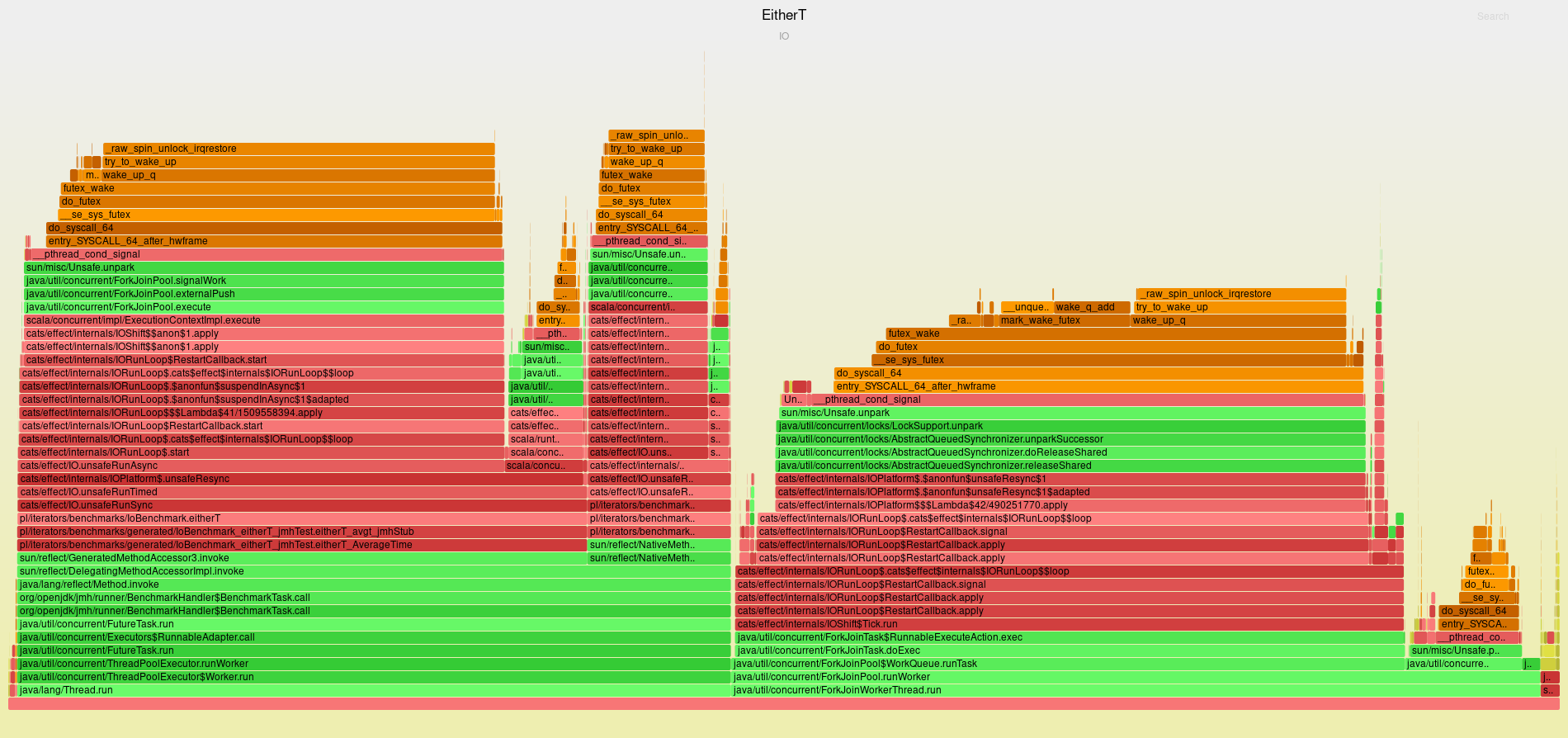

Analysis

Insights:

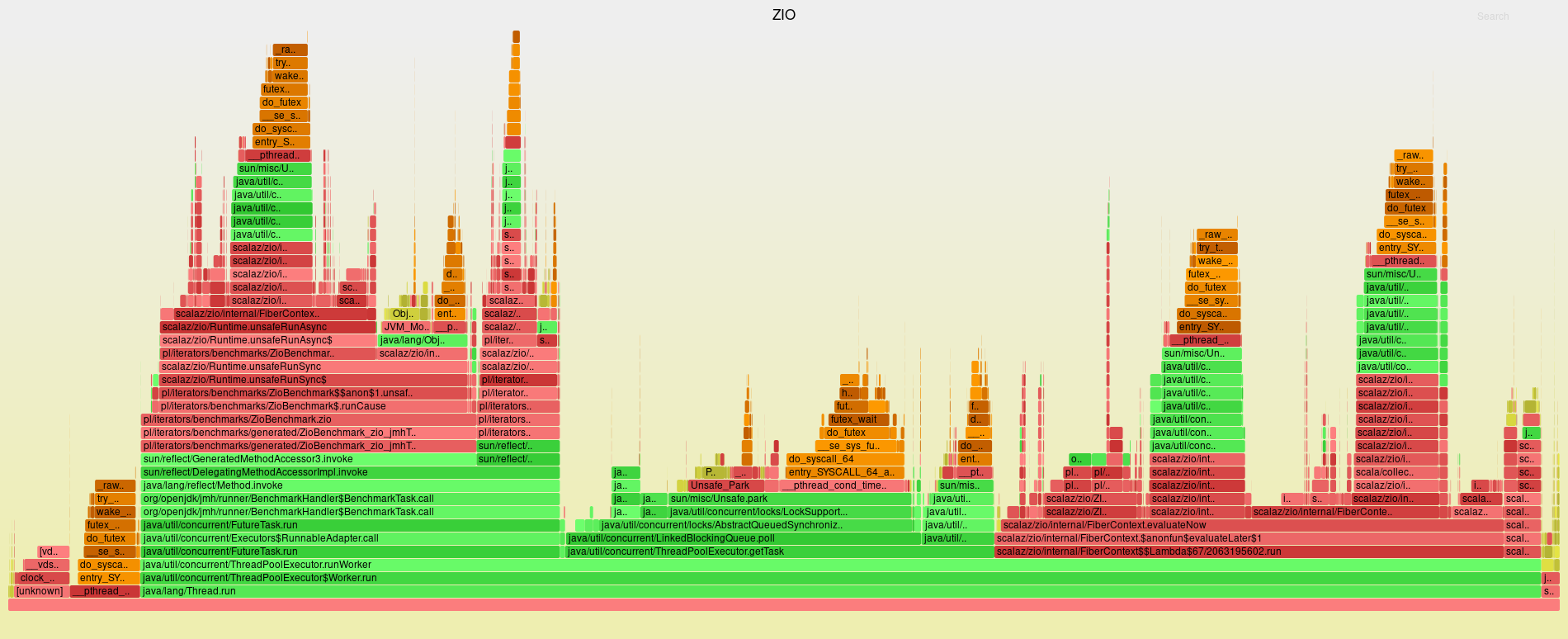

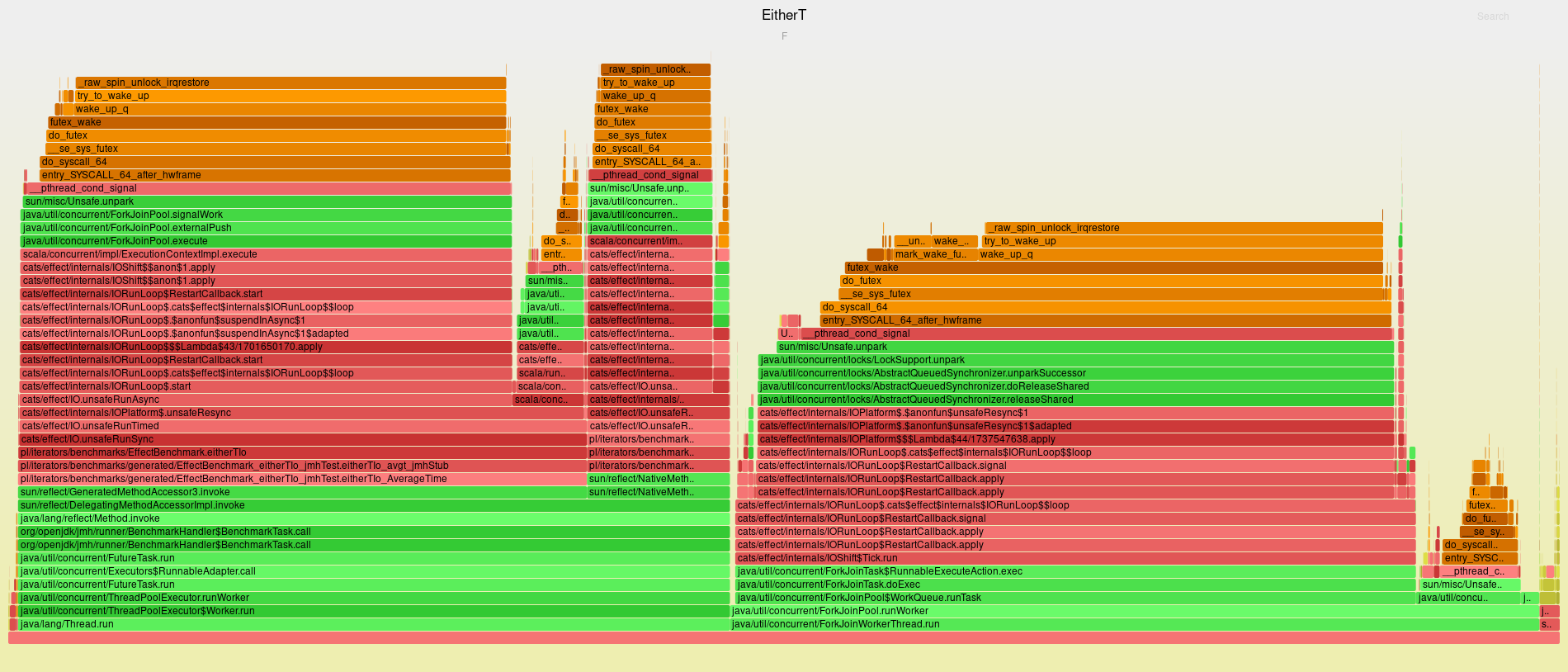

unsafeRunSynctakes a significant share of time. I guess that this is expected – this is theIOinterpreter running.EitherTmethods do not even show up on the flamegraph. You can conclude that it does not matter how anIOinstance has been constructed.- Async boundaries are costly. You need to make sure you introduce them in the right place – before long-running, potentially blocking operations, otherwise pointless context shifts can seriously degrade performance.

- As a corollary – fine-tuning execution aspects (context shifts) seems to be far more important than obsessing over monad transformers in this kind of code.

Either vs Exceptions

trait IoBenchmarkFunctions {

// ...

def outsideWorldIo(threshold: Double, baseTokens: Int, timeFactor: Int)(

input: Data): IO[Output] =

IO {

Blackhole.consumeCPU(timeFactor * baseTokens)

if (Random.nextDouble() > threshold) Output(input.i)

else throw UhOhException(UhOh(Random.nextString(10)))

}

}

@BenchmarkMode(Array(Mode.AverageTime))

@Warmup(iterations = 10, time = 1, timeUnit = TimeUnit.SECONDS)

@Measurement(iterations = 30, time = 5, timeUnit = TimeUnit.SECONDS)

class IoBenchmark extends BenchmarkFunctions with IoBenchmarkFunctions {

// ...

@Benchmark

@Fork(1)

def exceptions(benchmarkState: BenchmarkState) = {

val baseTokens = benchmarkState.baseTimeTokens

val timeFactor = benchmarkState.timeFactor

val failureThreshold = benchmarkState.failureThreshold

val validInvalidThreshold = benchmarkState.validInvalidThreshold

val io = IO {

validateExceptionStyle(validInvalidThreshold)(

benchmarkState.getSampleInput)

}.map(transform(baseTokens))

.flatMap(input =>

shift {

fetchDataIo(baseTokens, timeFactor)(input)

.flatMap(outsideWorldIo(failureThreshold, baseTokens, timeFactor))

.redeemWith(

{

case err: UhOhException =>

doIoWithFailure(baseTokens, timeFactor)(err.uhOh).flatMap(_ =>

IO.raiseError(err))

case otherThrowable => IO.raiseError(otherThrowable)

},

doIoWithOutput(baseTokens, timeFactor)

)

})

io.attempt.unsafeRunSync()

}

}

Note how you could use specialized methods for dealing with exceptions.

Results

| Method | ns/op (tf = 2) | ns/op (tf = 5) | ns/op (tf = 100) | ns/op (tf = 200) |

| EitherT[IO[…]] | 4974 (+- 13) | 5531 (+- 14) | 23988 (+- 27) | 43247 (+- 53) |

| IO[Either[…]] | 4791 (+-13) | 5360 (+- 15) | 23805 (+- 20) | 43064 (+- 47) |

| IO (exceptions) | 5257 (+- 13) | 5795 (+- 15) | 24233 (+- 28) | 43567 (+- 52) |

| Method | 10% failures, 20% invalid | 25% failures, 30% invalid | 45% failures, 30% invalid | 45% failures, 50% invalid |

| IO (exceptions) (tf = 5) | 5795 (+- 15) | 5398 (+-13) | 5629 (+- 17) | 4374 (+- 12) |

| IO[Either[…]] (tf = 5) | 5360 (+- 15) | 4729 (+-13) | 4991 (+- 119) | 3681 (+- 223) |

| IO (exceptions) (tf = 100) | 24233 (+- 28) | 21462 (+- 23) | 21939 (+- 36) | 15871 (+- 22) |

| IO[Either[..] (tf = 100) | 23805 (+- 20) | 20806 (+- 27) | 20940 (+- 19) | 14966 (+- 20) |

Observations:

- As before, exceptions are not faster than

Either. The relative differences are not as large as before, though, which makes it a less painful choice if you really have to deal with functions that throw exceptions.

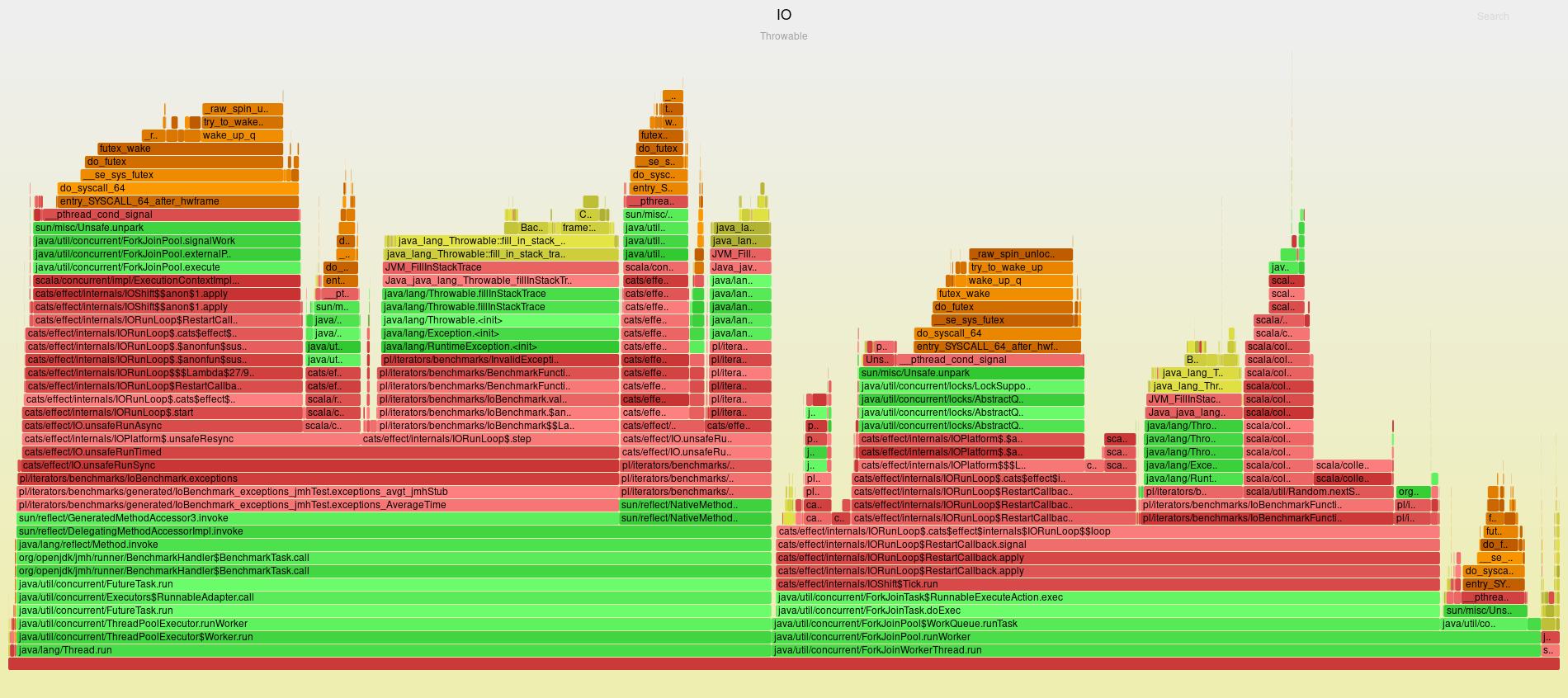

Analysis

Insights:

- You see that a whopping 25% of samples consist of filling stack traces. Not only does that mean that exceptions are costly, but also that

IOis much better optimized thanFuturewhere the dominating cost is thread pool management.

Verdict

| Method | ns/op (tf = 2) | ns/op (tf = 5) | ns/op (tf = 100) | ns/op (tf = 200) |

| IO[Either[…]] | 1 | 1 | 1 | 1 |

| EitherT | 1.03 | 1.03 | 1.00 | 1.00 |

| IO (exceptions) | 1.09 | 1.08 | 1.01 | 1.01 |

EitherT: Yes, by all means, don’t waste your time codingEitherby hand.- Exceptions: Don’t bother. But if you deal with a code that throws exceptions, then use

IOrather thanFuture.

ZIO

Measuring the performance of ZIO, as was outlined to me by John De Goes, is tricky. That’s because, as opposed to IO, ZIO is more optimized towards long-running or even infinite processes.

That means that such short-lived benchmarks are polluted by the high costs of setup/teardown times for the interpreter. As a corollary, you should not use this benchmark to conclude which effect system is faster. Instead, given the effect system, check which programming style is the most effective to use.

EitherT vs Either

trait ZioBenchmarkFunctions {

def outsideWorldEitherZio(

threshold: Double,

baseTokens: Int,

timeFactor: Int)(input: Data): UIO[Either[UhOh, Output]] = UIO {

Blackhole.consumeCPU(timeFactor * baseTokens)

if (Random.nextDouble() > threshold) Right(Output(input.i))

else Left(UhOh(Random.nextString(10)))

}

def doZioWithFailure(baseTokens: Int, timeFactor: Int)(

error: UhOh): UIO[Unit] = UIO {

Blackhole.consumeCPU(timeFactor * baseTokens)

()

}

def doZioWithOutput(baseTokens: Int, timeFactor: Int)(

output: Output): UIO[Result] = UIO {

Blackhole.consumeCPU(timeFactor * baseTokens)

Result(output.i)

}

def fetchDataZio(baseTokens: Int, timeFactor: Int)(input: ValidInput) = UIO {

Blackhole.consumeCPU(timeFactor * baseTokens)

Data(input.i)

}

object ZioBenchmark extends CatsInstances {

import blocking._

val runtime = new DefaultRuntime {

override val Platform = PlatformLive.Default.withReportFailure(const(()))

}

def run[R1 >: runtime.Environment, A1](zio: ZIO[R1, _, A1]): A1 =

runtime.unsafeRun(zio)

def runCause[R1 >: runtime.Environment, E1, A1](

zio: ZIO[R1, E1, A1]): Exit[E1, A1] = runtime.unsafeRunSync(zio)

def block[R1, E1, A1](zio: ZIO[R1, E1, A1]) = blocking(zio)

}

@BenchmarkMode(Array(Mode.AverageTime))

@OutputTimeUnit(TimeUnit.MICROSECONDS)

@Warmup(iterations = 10, time = 1, timeUnit = TimeUnit.SECONDS)

@Measurement(iterations = 30, time = 5, timeUnit = TimeUnit.SECONDS)

class ZioBenchmark extends BenchmarkFunctions with ZioBenchmarkFunctions {

import ZioBenchmark._

@Benchmark

@Fork(1)

def eitherT(benchmarkState: BenchmarkState) = {

val baseTokens = benchmarkState.baseTimeTokens

val timeFactor = benchmarkState.timeFactor

val failureThreshold = benchmarkState.failureThreshold

val validInvalidThreshold = benchmarkState.validInvalidThreshold

val zio = EitherT

.pure[ZIO[Blocking, Nothing, ?], Invalid](benchmarkState.getSampleInput)

.subflatMap(validateEitherStyle(validInvalidThreshold))

.map(transform(baseTokens))

.flatMapF(

input =>

block(

EitherT

.right(fetchDataZio(baseTokens, timeFactor)(input))

.flatMapF(

outsideWorldEitherZio(failureThreshold, baseTokens, timeFactor))

.biSemiflatMap(

err =>

doZioWithFailure(baseTokens, timeFactor)(err).andThen(

ZIO.succeed(err)),

doZioWithOutput(baseTokens, timeFactor)

)

.value))

.value

run(zio)

}

@Benchmark

@Fork(1)

def either(benchmarkState: BenchmarkState) = {

val baseTokens = benchmarkState.baseTimeTokens

val timeFactor = benchmarkState.timeFactor

val failureThreshold = benchmarkState.failureThreshold

val validInvalidThreshold = benchmarkState.validInvalidThreshold

val zio = ZIO

.succeed(benchmarkState.getSampleInput)

.map(input =>

validateEitherStyle(validInvalidThreshold)(input).map(

transform(baseTokens)))

.flatMap {

case Right(validInput) =>

block {

fetchDataZio(baseTokens, timeFactor)(validInput)

.flatMap(

outsideWorldEitherZio(failureThreshold, baseTokens, timeFactor))

.flatMap {

case Right(output) =>

doZioWithOutput(baseTokens, timeFactor)(output).map(Right(_))

case l @ Left(err) =>

doZioWithFailure(baseTokens, timeFactor)(err).andThen(

ZIO.succeed(l.asInstanceOf[Either[ThisIsError, Result]]))

}

}

case left => ZIO.succeed(left.asInstanceOf[Either[ThisIsError, Result]])

}

run(zio)

}

}

Results

| Method | ns/op (tf = 2) | ns/op (tf = 5) | ns/op (tf = 100) | ns/op (tf = 200) |

| EitherT[ZIO[…]] | 10694 (+- 33) | 11224 (+- 14) | 29673 (+- 64) | 49393 (+- 68) |

| ZIO[Either[…]] | 10420 (+-28) | 11046 (+- 20) | 29625 (+- 15) | 49046 (+- 75) |

Observations:

- You can repeat everything that was written for

IO. There is almost no difference between usingEitherTand coding by hand. EitherT is well-suited to ZIO

Either vs Exceptions vs Bifunctor

ZIO contains a unique, bifunctor-based approach to handling exceptions. Read – ZIO can encode error values of an arbitrary type along the result type and retain the precise type of an error.

It makes sense to include the mechanism in this comparison as it has the potential of not using “expensive” throwables with all the benefits of optimized error handling paths.

trait ZioBenchmarkFunctions {

//...

def outsideWorldZio(threshold: Double, baseTokens: Int, timeFactor: Int)(

input: Data): Task[Output] =

Task {

Blackhole.consumeCPU(timeFactor * baseTokens)

if (Random.nextDouble() > threshold) Output(input.i)

else throw UhOhException(UhOh(Random.nextString(10)))

}

//...

}

object ZioBenchmark extends CatsInstances {

//...

def runCause[R1 >: runtime.Environment, E1, A1](

zio: ZIO[R1, E1, A1]): Exit[E1, A1] = runtime.unsafeRunSync(zio)

}

@BenchmarkMode(Array(Mode.AverageTime))

@OutputTimeUnit(TimeUnit.MICROSECONDS)

@Warmup(iterations = 10, time = 1, timeUnit = TimeUnit.SECONDS)

@Measurement(iterations = 30, time = 5, timeUnit = TimeUnit.SECONDS)

class ZioBenchmark extends BenchmarkFunctions with ZioBenchmarkFunctions {

import ZioBenchmark._

//...

@Benchmark

@Fork(1)

def exceptions(benchmarkState: BenchmarkState) = {

val baseTokens = benchmarkState.baseTimeTokens

val timeFactor = benchmarkState.timeFactor

val failureThreshold = benchmarkState.failureThreshold

val validInvalidThreshold = benchmarkState.validInvalidThreshold

val zio = ZIO {

validateExceptionStyle(validInvalidThreshold)(

benchmarkState.getSampleInput)

}.map(transform(baseTokens))

.flatMap(input =>

block {

fetchDataZio(baseTokens, timeFactor)(input)

.flatMap(data =>

outsideWorldZio(failureThreshold, baseTokens, timeFactor)(data)

.catchSome {

case err: UhOhException =>

doZioWithFailure(baseTokens, timeFactor)(err.uhOh).andThen(

ZIO.fail(err))

})

.flatMap(doZioWithOutput(baseTokens, timeFactor))

})

runCause(zio)

}

@Benchmark

@Fork(1)

def zio(benchmarkState: BenchmarkState) = {

val baseTokens = benchmarkState.baseTimeTokens

val timeFactor = benchmarkState.timeFactor

val failureThreshold = benchmarkState.failureThreshold

val validInvalidThreshold = benchmarkState.validInvalidThreshold

val zio = ZIO

.succeed(benchmarkState.getSampleInput)

.map(input =>

validateEitherStyle(validInvalidThreshold)(input).map(

transform(baseTokens)))

.absolve

.flatMap(validInput =>

block {

fetchDataZio(baseTokens, timeFactor)(validInput)

.flatMap(

data =>

outsideWorldEitherZio(failureThreshold, baseTokens, timeFactor)(

data).absolve.catchAll(err =>

doZioWithFailure(baseTokens, timeFactor)(err).andThen(

ZIO.fail(err))))

.flatMap(doZioWithOutput(baseTokens, timeFactor))

})

runCause(zio)

}

//...

}

Results

| Method | ns/op (tf = 2) | ns/op (tf = 5) | ns/op (tf = 100) | ns/op (tf = 200) |

| EitherT[UIO[…]] | 10694 (+- 33) | 11224 (+- 14) | 29673 (+- 64) | 49393 (+- 68) |

| UIO[Either[…]] | 10420 (+-28) | 11046 (+- 20) | 29625 (+- 15) | 49046 (+- 75) |

| ZIO (exceptions) | 11170 (+- 14) | 11739 (+- 19) | 30510 (+- 175) | 49864 (+- 156) |

| ZIO (bifunctor) | 10547 (+- 15) | 11156 (+- 14) | 29596 (+- 39) | 49257 (+- 72) |

| Method | 10% failures, 20% invalid | 25% failures, 30% invalid | 45% failures, 30% invalid | 45% failures, 50% invalid |

| ZIO (exceptions) (tf = 5) | 11739 (+- 19) | 10944 (+-17) | 11230 (+- 16) | 8795 (+- 23) |

| UIO[Either[…]] (tf = 5) | 11046 (+- 20) | 9723 (+- 16) | 9906 (+- 15) | 7277 (+- 21) |

| ZIO (bifunctor) (tf = 5) | 11156 (+- 14) | 9970 (+- 16) | 10203 (+- 18) | 7475 (+- 12) |

| ZIO (exceptions) (tf = 100) | 30510 (+- 175) | 27332 (+-93) | 27769 (+- 51) | 20565 (+- 54) |

| UIO[Either[…]] (tf = 100) | 29625 (+- 15) | 25940 (+- 50) | 26151 (+- 45) | 18874 (+- 29) |

| ZIO (bifunctor) (tf = 100) | 29596 (+- 39) | 26164 (+- 84) | 26226 (+- 67) | 19058 (+- 42) |

Observations:

- The bifunctor mechanism offers excellent performance and principled error handling.

- Its performance is a lot better compared to mechanisms based on throwables, so I’d favor it over those as much as possible.

Analysis

Insights:

- You’re seeing implementation internals almost exclusively, which means that you’re not utilizing

ZIOto its full potential. In that case, it’s best not to draw conclusions from the absolute numbers. - Again, as was in the case

IO, construction details almost do not matter. So,ZIOseems locally well suited to any programming style. - Because of the richer (and heavier) interpreter,

ZIOshould not be used for one-shot or short-lived methods in isolation.

Verdict

| Method | ns/op (tf = 2) | ns/op (tf = 5) | ns/op (tf = 100) | ns/op (tf = 200) |

| UIO[Either[…]] | 1 | 1 | 1 | 1 |

| EitherT | 1.02 | 1.01 | 1.00 | 1.00 |

| ZIO (exceptions) | 1.07 | 1.06 | 1.02 | 1.01 |

| ZIO (bifunctor) | 1.01 | 1.00 | .99 | 1.00 |

EitherT: No problem, but ZIO has its own unique mechanism which offers a slightly more ergonomic model.- Exceptions: If you have to, but

ZIOhas its own unique mechanism… - Bifunctor: Yes!!

Tagless final

As a bonus, let’s measure the impact of having an abstract effect wrapper. This technique, sometimes called tagless final, lets you write your logic in terms of an abstract higher-kinded type accompanied by a set of known capabilities used for operating the wrapper without knowing its exact implementation.

It’s wildly popular these days, and it would be interesting to know if this abstraction boost adds any significant performance penalty.

Rewritten code used to benchmark the abstract effect:

@BenchmarkMode(Array(Mode.AverageTime))

@OutputTimeUnit(TimeUnit.MICROSECONDS)

@Warmup(iterations = 10, time = 1, timeUnit = TimeUnit.SECONDS)

@Measurement(iterations = 30, time = 5, timeUnit = TimeUnit.SECONDS)

class EffectBenchmark

extends BenchmarkFunctions

with IoBenchmarkFunctions

with ZioBenchmarkFunctions {

import EffectBenchmark._

@noinline

private def eitherTF[F[_]: Monad: ContextShift](

benchmarkState: BenchmarkState)(

fetch: ValidInput => F[Data],

outsideWorld: Data => F[Either[UhOh, Output]],

onFailure: UhOh => F[Unit],

onOutput: Output => F[Result]) = {

val baseTokens = benchmarkState.baseTimeTokens

val validInvalidThreshold = benchmarkState.validInvalidThreshold

val F = Monad[F]

EitherT

.pure[F, Invalid](benchmarkState.getSampleInput)

.subflatMap(validateEitherStyle(validInvalidThreshold))

.map(transform(baseTokens))

.flatMapF(

input =>

F.productR(ContextShift[F].shift)(

EitherT

.right(fetch(input))

.flatMapF(outsideWorld)

.biSemiflatMap(

err => F.as(onFailure(err), err.asInstanceOf[ThisIsError]),

onOutput

)

.value))

.value

}

@noinline

private def feitherNoSyntax[F[_]: Monad: ContextShift](

benchmarkState: BenchmarkState)(

fetch: ValidInput => F[Data],

outsideWorld: Data => F[Either[UhOh, Output]],

onFailure: UhOh => F[Unit],

onOutput: Output => F[Result]) = {

val baseTokens = benchmarkState.baseTimeTokens

val validInvalidThreshold = benchmarkState.validInvalidThreshold

val F = Monad[F]

F.flatMap(

F.map(F.pure(benchmarkState.getSampleInput))(input =>

validateEitherStyle(validInvalidThreshold)(input)

.map(transform(baseTokens)))) {

case Right(validInput) =>

F.productR(ContextShift[F].shift)(

F.flatMap(F.flatMap(fetch(validInput))(outsideWorld)) {

case Right(output) =>

F.map(onOutput(output))(Right(_): Either[ThisIsError, Result])

case l @ Left(err) =>

F.as(onFailure(err), l.asInstanceOf[Either[ThisIsError, Result]])

})

case left => F.pure(left.asInstanceOf[Either[ThisIsError, Result]])

}

}

@noinline

private def feitherSyntax[F[_]: Monad: ContextShift](

benchmarkState: BenchmarkState)(

fetch: ValidInput => F[Data],

outsideWorld: Data => F[Either[UhOh, Output]],

onFailure: UhOh => F[Unit],

onOutput: Output => F[Result]) = {

import cats.syntax.apply._

import cats.syntax.flatMap._

import cats.syntax.functor._

val baseTokens = benchmarkState.baseTimeTokens

val validInvalidThreshold = benchmarkState.validInvalidThreshold

val F = Monad[F]

F.pure(benchmarkState.getSampleInput)

.map(input =>

validateEitherStyle(validInvalidThreshold)(input).map(

transform(baseTokens)))

.flatMap {

case Right(validInput) =>

ContextShift[F].shift *> {

fetch(validInput).flatMap(outsideWorld).flatMap {

case Right(output) =>

onOutput(output).map(Right(_): Either[ThisIsError, Result])

case l @ Left(err) =>

onFailure(err).as(l.asInstanceOf[Either[ThisIsError, Result]])

}

}

case left => F.pure(left.asInstanceOf[Either[ThisIsError, Result]])

}

}

}

As you can see, I rewrote the measured functionality to operate on the abstract effect. Additionally, I created two versions of non-EitherT functions both using syntax extensions (you can write f.map(..)) and not, to further quantify the impact of the Scala way of enriching existing classes.

As you probably know, the compiler must create a new instance of a class implementing the “pimped” method under the hood, which can have a negative impact on overall performance.

Armed with these, you can write benchmarks that call effect-oblivious functions with concrete effect types and compare them with non-tagless measurements from previous benchmarks.

object EffectBenchmark extends AllInstances {

implicit val executionContext: ExecutionContext = ExecutionContext.global

implicit val cs: ContextShift[IO] = IO.contextShift(executionContext)

}

@BenchmarkMode(Array(Mode.AverageTime))

@OutputTimeUnit(TimeUnit.MICROSECONDS)

@Warmup(iterations = 10, time = 1, timeUnit = TimeUnit.SECONDS)

@Measurement(iterations = 30, time = 5, timeUnit = TimeUnit.SECONDS)

class EffectBenchmark

extends BenchmarkFunctions

with IoBenchmarkFunctions

with ZioBenchmarkFunctions {

import EffectBenchmark._

@Benchmark

@Fork(1)

def eitherTIo(benchmarkState: BenchmarkState) = {

val baseTokens = benchmarkState.baseTimeTokens

val timeFactor = benchmarkState.timeFactor

val failureThreshold = benchmarkState.failureThreshold

val io = eitherTF[IO](benchmarkState)(

fetchDataIo(baseTokens, timeFactor),

outsideWorldEitherIo(failureThreshold, baseTokens, timeFactor),

doIoWithFailure(baseTokens, timeFactor),

doIoWithOutput(baseTokens, timeFactor)

)

io.unsafeRunSync()

}

@Benchmark

@Fork(1)

def eitherIoNoSyntax(benchmarkState: BenchmarkState) = {

val baseTokens = benchmarkState.baseTimeTokens

val timeFactor = benchmarkState.timeFactor

val failureThreshold = benchmarkState.failureThreshold

val io = feitherNoSyntax[IO](benchmarkState)(

fetchDataIo(baseTokens, timeFactor),

outsideWorldEitherIo(failureThreshold, baseTokens, timeFactor),

doIoWithFailure(baseTokens, timeFactor),

doIoWithOutput(baseTokens, timeFactor)

)

io.unsafeRunSync()

}

@Benchmark

@Fork(1)

def eitherIoSyntax(benchmarkState: BenchmarkState) = {

val baseTokens = benchmarkState.baseTimeTokens

val timeFactor = benchmarkState.timeFactor

val failureThreshold = benchmarkState.failureThreshold

val io = feitherSyntax[IO](benchmarkState)(

fetchDataIo(baseTokens, timeFactor),

outsideWorldEitherIo(failureThreshold, baseTokens, timeFactor),

doIoWithFailure(baseTokens, timeFactor),

doIoWithOutput(baseTokens, timeFactor)

)

io.unsafeRunSync()

}

}

Quirks

To be more fair, I tried to eliminate the effect of various compiler/JIT tricks that could not be possibly performed if the code would have been a part of a larger system.

AllInstances is extended to have a lot of Monad to choose from – possibly eliminating monomorphization tricks. Additionally, methods are marked as noinline to prevent inliner from doing its job.

Results

| Method | ns/op (tf = 2) | ns/op (tf = 5) | ns/op (tf = 100) | ns/op (tf = 200) |

| Effect | 1 | 1 | 1 | 1 |

| F[Either[..]] – no syntax | 1 | 1.01 | 1 | 1 |

| F[Either[..]] – syntax | 1 | 1 | 1 | 1 |

| Method | ns/op (tf = 2) | ns/op (tf = 5) | ns/op (tf = 100) | ns/op (tf = 200) |

| EitherT[Effect] | 1 | 1 | 1 | 1 |

| Eithert[F[..]] | .99 | 1 | .99 | 1 |

Observations:

- Do not be afraid of

syntaxextensions. This use case (short-lived object with no state) is well optimized by JIT. - I did not find the tagless final style to be slower, so do not avoid it if it suits you.

Analysis

Insights:

- When looking for signs of performance degradation caused by

F[..]on the following flamegraph, I decided to look for itable stubs, and I noticed that they are responsible for only 0.6% of all samples, which seems small. - I tried to do various tricks to observe the effects of megamophic dispatch (like importing

AllInstances) but did not notice any significant discrepancies.

Final conclusions

- Unless you’re building a library, compile with inliner enabled (“-opt:l:inline”, “-opt-inline-from:**”).

- If your workload mainly comprises calling DBs, REST, or, generally, long computations – avoid

Futureand use more efficient and optimized effect systems likeIOorZIO. Also, use the most readable FP-ish methods for error handling. In my case, that would beEitherT[IO]orZIOs bifunctor. Obviously, you have to think about context-shifts to control blocking and fairness, but at least you control it fully.Futuredoes not give you a choice, and it suffers when combined withEitherT. - If you really have to live with

Future– optimize for optimal thread-pool utilization. Generally, that means you can’t rely on generic mechanisms likeEitherTas they’re not written with thread-pool in mind. - Forget about exceptions. They do not seem to have any performance advantages (but they can have disadvantages if you throw them a lot) and you lose composability. I’d reserve usage of exceptions for system failures (good thing that all the effect systems catch them) and use

Eitherfor logical errors. - Do not trust my benchmarks. Make your own. And if they’re interesting, I will post them here. 🙂

- If you see any stupid things, please leave a comment.

- If you have some extra insights, please comment as well. 🙂

- If you’re interested in more benchmarks – e.g., measuring long-running effects – please let us know.

13 Comments

Great article!!

But after reading everything, I’ve got confused about @nonline. Shouldn’t inline always improve performance? Why on this case it didn’t happen?

Best!

Hey, thanks a lot! I used @noinline because I wanted to measure effects of the tagless-final style more precisely. Letting the inliner do its job could lead to a method being inlined at the call site where the exact effect type is known and not polymorphic, wheras I wanted exactly the opposite. So this the only reason I used noinline. Hope it makes sense

Got it!! Made a lot of sense now. You didn’t want compiler to inject any bias on benchmark.

Besides, it’s great to see how methods called on monad (map, flatMap, etc) were completely erased from execution.

Again, great article dude!! Keep on! 🙂

Thanks so much for your kind words!

The ZIO Benchmark is invalid because it doesn’t disable ZIO Stacktraces. They’re turned on by default due to being extremely useful (as you might imagine), but result in a performance degradation of about 2.4x at worst. The runtime should be created with `.withTracing(Tracing.disabled)` to disable them:

“`scala

val runtime = new DefaultRuntime {

override val Platform = PlatformLive.Default.withReportFailure(const(())).withTracing(Tracing.disabled)

}

“`

I think tracing is not present in the version used in the benchmarks. Someone on Reddit told me that they introduced this feature in RC5 and I’m on RC4. Can you confirm it?

Yes, I stand corrected!

Awesome article!

Thanks for your work!

It would be interesting to see benchmarks with bigger monad stack like ReaderT[StateT[EitherT[Option[…]]]] vs popular effect monads.

Regards!

Thanks a lot! I’m going to prepare benchmarks for long-running effects where you’ll find deeper MT stack – good to know people are interested! To be honest, JDG himself suggested that 🙂

Hi,

I like this article a lot. It’s so cool! Can you share please your code?

Yeah, sure, it’s at https://github.com/theiterators/error-benchmarks

Nice work, Marcin! It would be interesting to see the improved Scala 2.13 Future performance tested.

I am interested to see the benchmark which compares Either vs Exception with 0% failures, 0% invalid. So that we can see the overhead of Either. The use case for this is for a microservice where external service is available 99.999% of the time and returns the correct result.