Big data is huge.

We are generating 2.5 quintillion bytes of it daily and that number is only getting bigger.

So, why is everybody talking about the big data hype being over?

Because there are only a handful of companies that deal with the really BIG data.

But you don’t have to be Facebook or Google to take advantage of big data technologies.

As a matter of fact, big data technologies are still one of the best tools you can use to optimize your business. Even if your data load is medium rather than big.

So, how do you do that?

With tons of available tools, choosing the right thing can be quite a challenge.

To give you an idea of what you should be looking for, we’ve put together a clear and comprehensive rundown of the most essential big data technologies for 2020.

In this article you’ll find information on:

- How to get started with big data technologies.

- How to apply them to your business needs.

- Which big data technologies are the most promising.

Already know you need a big data solution for your business? We can help! At Iterators, we design, build, and maintain custom software solutions for your business.

Schedule a free consultation with Iterators today! We’d be happy to help you decide which big data solution is right for you so you don’t have to worry about it.

Big Data Technologies – How to Get Started

So, you’re looking for a big data solution and you’re not sure where to start?

No wonder! The industry goes pretty deep.

There are many big data technologies that power countless tools and products. New innovations are constantly discovered and the edge is moving quickly.

Fortunately, there are a couple of “battle-tested” tips for those who seek to expand their businesses using big data technologies.

“With AI driving the future of data collection, RAG models and embedding-based databases are becoming essential. These technologies enable efficient search and summarization, turning massive datasets into actionable insights for AI-driven business optimization.”

Jacek Głodek

Here are some tips on how to get started.

1. Make sure you’re leveraging your business.

When implementing big data technologies, you should make a business-driven decision, not an IT one.

Start by analyzing and understanding your business needs and look for solutions that fulfill them. Most successful big data implementations are a result of building an infrastructure that serves a specific purpose, rather than being a technological novelty.

At this stage, you’ll want to look at the main benefits of big data technologies and tools:

- Growth Potential Identification

- Real-time Business Monitoring

- Fraud Prevention

- Operation Optimization

- Resource Management Improvement

- Effective Management of Large Data Sets

- Handling Varied Data Sources

- Data-based Business Insights

If you find any one of those things to be important for your business, then you’ve got a good case for a big data solution.

Here’s an example:

PROBLEM

I need to grow my business.

BIG DATA TECHNOLOGY RESULTS

Grow your business by selling more items that are similar.

FINAL SOLUTION

Big Data Technology + Recommender System

Another way to figure out if you need to leverage big data is to look at use cases. There are many applications for big data technologies across the board.

Want to know more about recommender systems? We got just the right thing for you! Check out An Introduction to Recommender System (+9 Easy Examples)

2. Assess your data requirements.

To choose the right big data solution for your business, you need to have a clear view of your current situation.

What does your data flow look like?

What type of data are you processing?

How much data are you processing?

These are all important questions that you need to answer before you go shopping for big data technologies.

So, let’s take a closer look at the things you’ll need to assess.

Where is all of your data coming from?

It’s important to have a clear picture of what your data flow looks like. You want to know where your data is coming from and where it’s going because it might influence the type of solution you’ll need.

For example:

If you’re building autonomous vehicles or IoT devices, you’re going to be dealing with a lot of sensor data. In that case, a solution like edge computing makes sense because it allows for the preprocessing of important data near the place of origin.

And where does it originate?

Well, pretty much everywhere.

Since the world has become so digitized, most of our actions leave some kind of data trail.

That said, let’s take a look at the biggest data sources:

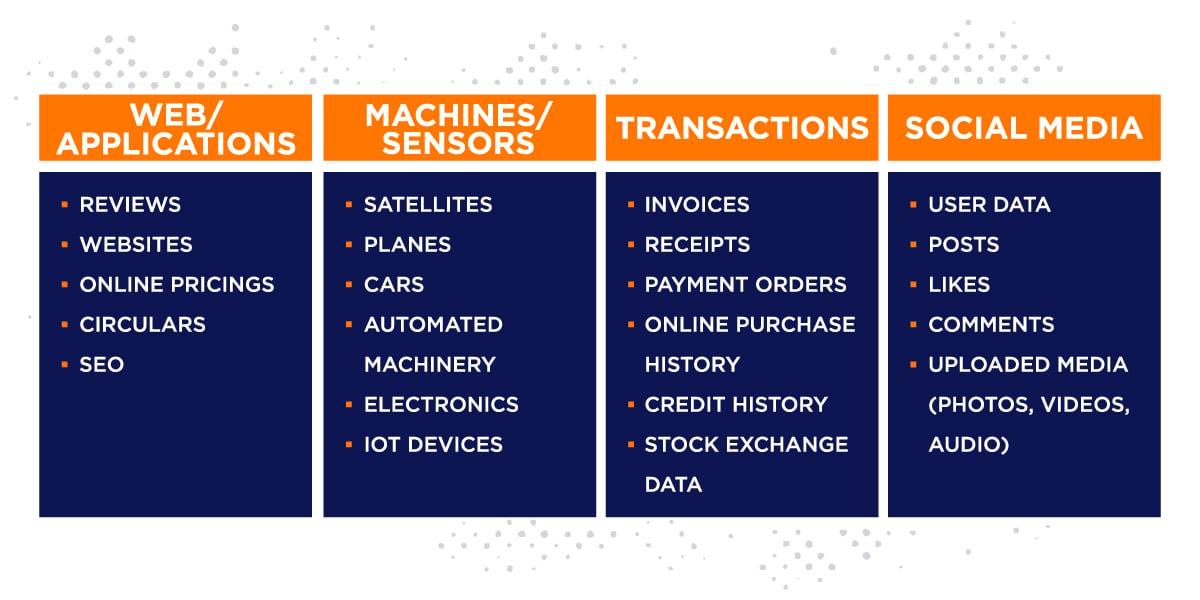

Web/Applications – The Internet is a whole universe of data waiting to be scraped, mined, and processed with big data technologies. Things like online reviews, website content, pricing lists, and SEO results are all examples of valuable data that you can use.

Machines/Sensors – A big chunk of the datasphere is operational data coming from sensors built into IoT devices, satellites, automated machinery, and electronics.

Transactions – Another huge big data source is the trillions of daily transactions people make. That includes stock exchange data, invoices, receipts, payments, orders, online purchase history, and credit history.

Social Media – With 3 billion users worldwide, it’s no wonder that most of the data generated today comes from social media. This constitutes all the user data, posts, likes, comments, and uploaded media like photos, videos, and audio.

How much data is coming in?

So, how do you know you’re processing enough data to invest in big data technology?

Well, it’s tricky. There is no rule that says if you process more than “x” amount of data, you’re eligible for a big data solution.

As a matter of fact, most companies aren’t processing extreme amounts of data like Google, Facebook, and Amazon.

Fortunately, that doesn’t mean you can’t still benefit from using certain big data technologies. That’s why medium data has been the new hot topic in recent years.

But how do you know if your data is even “medium?”

The general rule of thumb here would be:

If your data volume is too big or moves too fast for traditional databases and software techniques to handle it, you’re due for a big data upgrade.

When assessing your data requirements, the concept of the 3 V’s can also be helpful in determining the characteristics of your data flow.

What are the 3 V’s of big data?

The three V’s are Volume, Variety, and Velocity. And they describe the three main properties of big data:

- Volume – Volume is the key property defining big data. You can have “big data” or “medium data,” but if you’re not processing more than hundreds of terabytes of information – you’re not there yet.

- Variety – Big data comes in many shapes and forms. Large data sets include multiple formats such as images, audio, video, text, or spreadsheets. Even if you’re only mining, let’s say, online reviews as text documents – your data will change its original form as it’s being processed.

- Velocity – Data velocity is the speed at which data is created, gathered, and shared. That includes the capacity for collecting information at varying speeds in real time. (Your data might be coming in batches or in a continuous stream.)

Here’s an example:

Facebook’s user profile database contains records of an astounding 2.4+ billion user profiles. That includes a variety of data such as photos, videos, comments, posts, likes, and telephone numbers. It is also in a constant state of expansion, as thousands of new users register every day.

Side note: You might also encounter definitions describing the five V’s of big data. The extra V’s being Veracity and Validity. They are related to the quality and credibility of the data.

What types of data are you handling?

The answer – all of it.

That is, if you intend to use big data technologies for data analytics.

During the processing stages, your data will likely transform back and forth, even if only for the sake of data visualization.

So, what are the types of big data?

We can distinguish 3 main types based on the level of processing they went through.

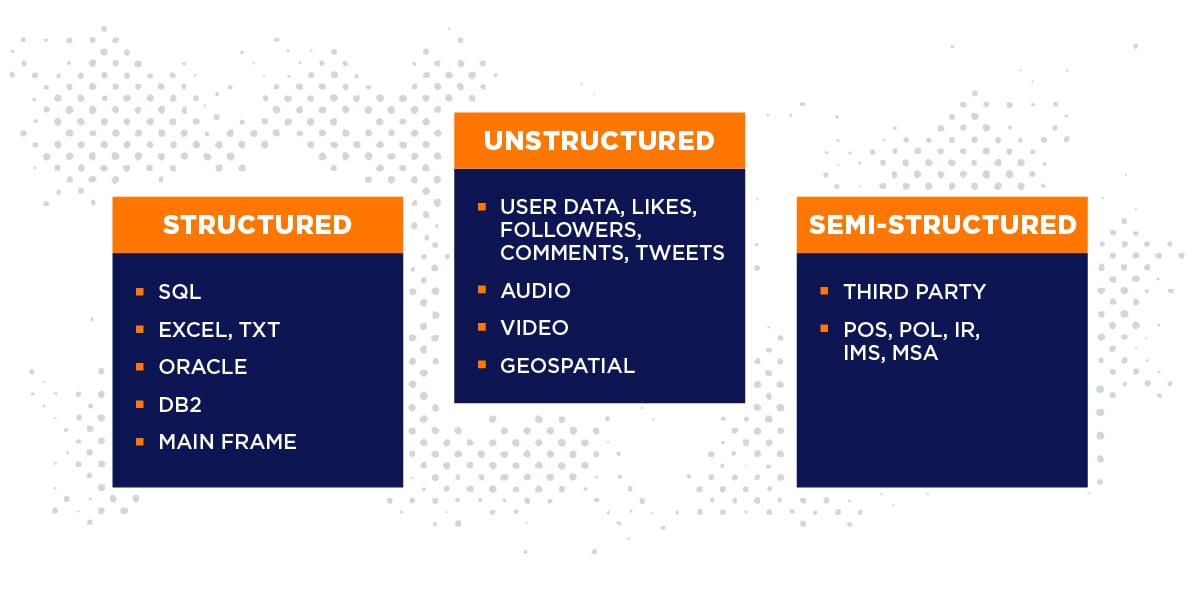

- Structured – Organized and processed data stored in tables or text. Accessible and ready for operational use.

- Unstructured – Raw, unprocessed data. Referring to data sets that contain information that isn’t organized or processed. You’ll have difficulty obtaining insights from it.

- Semi-structured – Data sets containing both structured and unstructured data. Some of the information contains tags and is partially segregated, but the data is not fully operational and organized.

Establishing what type of data you’re going to be dealing with can influence your choice of big data technologies and tools, but it shouldn’t be the only factor you take into consideration.

Here’s an example:

You’re handling a lot of structured data in the form of excel and text files. Your data load is starting to exceed your storage capabilities.

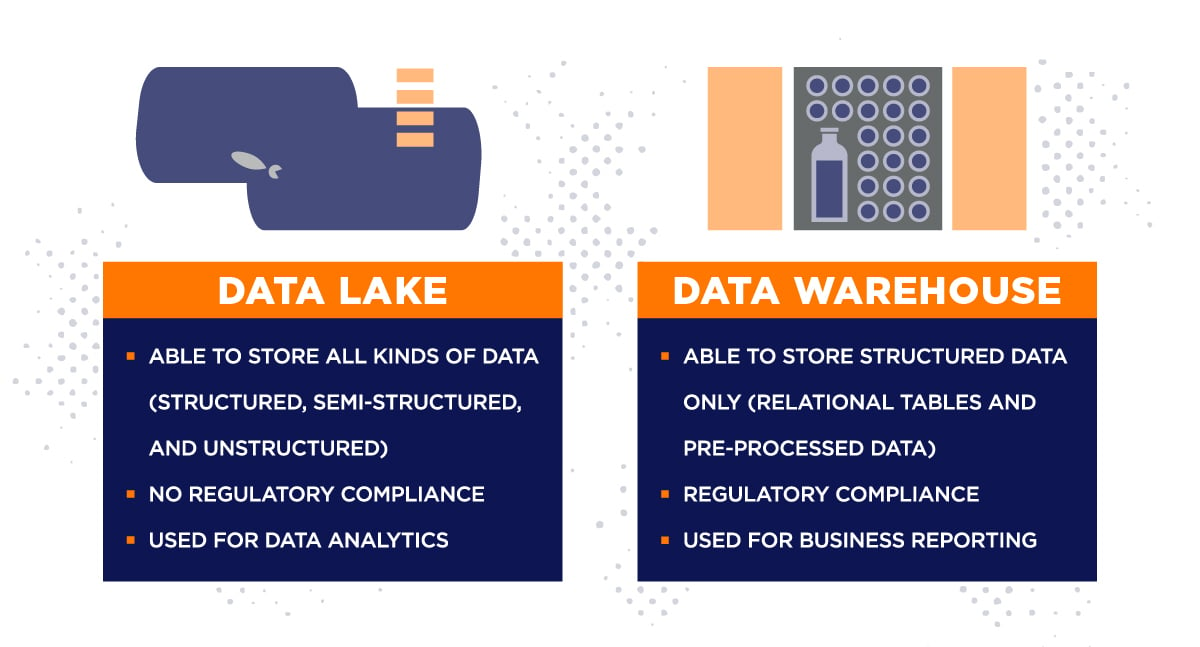

Big data technologies like data lakes and data warehouses are a solution, but their functionality differs slightly.

In that particular case, you might want to look closer at data warehousing, as it serves better as a storage solution for highly structured data.

3. Hire the right people for the job.

This might sound like a truism, but let’s stick with it for a moment.

Big data technologies are at the very forefront of technological innovation. These solutions are often layers of sophisticated technologies working as an ecosystem. As such, big data projects can get very complex and demanding. Not to mention – expensive.

That’s why you need to carefully think through the execution process.

And it can go one of two ways.

You either hire your own team. Or you outsource.

Of course each has its pros and cons. And you should definitely consider them before choosing the right solution for your business.

Outsourcing can often seem like the best option. And for a lot of smaller and mid-range businesses – it is. But you should know that it’s not free from drawbacks. Especially, if you don’t practice your due diligence beforehand.

PROS

+ Cost effective. Quite obvious, but also very important. You should know that employing a team of data scientists is expensive. Outsourcing your project will save you from having to pay many extra expenses like payroll taxes

+ Expertise. Hiring a software development company means immediately hiring experience and talent. And that means big data technologies implementation may be executed smoother and faster with less mistakes.

+ No recruitment process. The hiring process is limited to choosing the right software development company. That means less meetings, less negotiation, and extra time for you to focus on other things.

+ Less need for oversight. Obviously, you still need to keep tabs on your project with outsourcing. But with far less people to manage, it’s a lot easier.

CONS

– Legal issues. The last thing you want is a legal battle over a loosely defined contract. Make sure all the key aspects of the deal are addressed and the timeline for execution is clear.

– Data security. You’re going to need to trust an outside company with your data. So, you should be very careful about how it’s handled. Take legal precautions and make sure your contract is set up right in case of a data leak.

– Communication. The poor exchange of information between a client and a company is a recipe for disaster. Stay engaged and on top of your project. Make sure you track progress and that you’re up to date with development.

Assemble your own team. It’s a challenge to find the right group of dedicated experts and create a collaborative environment. That’s why hiring your own team is recommended for bigger businesses that need big data technologies ensuring complex, long-term solutions. It also works for those who know exactly who and what they want.

PROS

+ Control. Hiring your own team gives you complete control over all the aspects of your project. You have unlimited and instant access during all stages of development. You also have the final say-so.

+ Security. Hiring your own team can be a safer option if your data is sensitive. After all, outsourcing involves the risk of a data breach that you can’t control.

+ Independence. Having your own team gives you more independence. You can decide who does what. You set your own timeline. And you’re not dependent on the functioning of another company, which can mean less delays and management errors.

CONS

– Expensive. The cost of hiring a whole team of data analysts and engineers is a big one. Glassdoor estimates an average salary of a data engineer in the US to be $102,864 a year.

– Recruitment. Big data technologies are booming, and the demand for experts is high. Finding a team of solid specialists can prove to be a lengthy and demanding process, even with a solid HR department at your disposal.

– Time-consuming. Managing the implementation of big data technologies is a full time job. Unless you have a competent and experienced project manager you can count on staying busy supervising a big data project.

Note: Remember that hiring for IT requires technical proficiency. If you’re not a technical person – make sure you have one on your team. You can also hire a technical recruiter or an agency that specializes in hiring IT professionals to help you with the whole process.

Here’s a list of potential hires you should consider if you decide to assemble your own team of big data experts.

As you can see there are many steps you should take before you can decide on a perfect big data solution. That said, we can finally move on to the main part – analyzing the most essential big data technologies and tools you can use today.

Want to know more about making technical hires? We’ve got you covered! Check out our guide: How to Hire a Programmer for a Startup in 6 Easy Steps

7 Essential Big Data Technologies of 2024

Ok, so you’ve figured out the business side of things and you’ve analyzed your data flow. Now, it’s time to take a closer look at big data technologies and tools.

“With AI driving the future of data collection, RAG models and embedding-based databases are becoming essential. These technologies enable efficient search and summarization, turning massive datasets into actionable insights for AI-driven business optimization.”

Jacek Głodek, Founder, Iterators

What is Big Data Technology?

Big Data Technology is a type of software framework created to extract, analyze, and manage information coming from very large data sets. It is used whenever traditional data-processing software can’t handle the size and complexity of the provided data.

You can categorize big data technologies based on their purpose and their functionality. As such, we can distinguish four fundamental categories:

- Data Storage

- Data Mining

- Data Analysis

- Data Visualization

That said, we can move to the big data technologies list, which features the most notorious solutions on the market, as of 2020.

So without further ado, here they are:

1. Data Warehouse vs Data Lake

One of the problems with data management is the fact that data lives all over the place. Huge enterprises tend to store their data in many disparate locations. That can make data analysis and reporting pretty hard and time-consuming.

Fortunately, there are big data technologies that can help.

Two major big data technologies that are widely used for that purpose are data lake and data warehouse. It’s important to note that these terms are not interchangeable. Even though they’re both used to store data, they’re not quite the same thing.

So, what are they? And how are they different from each other?

It’s actually pretty simple.

A data lake is a large repository of raw data that is not structured or organized in any way. It is frequently a single store of all enterprise data. There are many “fish” in that lake, which is to say, it is a storage for all kinds of data, no matter what shape or form.

A data warehouse is more like a cold room where “fish” go after they’re segregated. The data is then ready for access and can be used reliably for statistical or data processing purposes. In other words, this storage solution is highly structured and functional.

Applications of Data Lakes and Data Warehouses

Obviously, you can use both big data technologies for storage. But there’s a little more to it.

Depending on the size of your enterprise, the amount of data you’re processing, and the profile of your business, you might want to choose one over another.

Here’s some information that should help you with that decision:

- Data lakes are a good fit for multi-access data analytics purposes, but they are not particularly effective if you need to get consistent results. That’s why this big data technology is used by data scientists rather than business professionals.

- Data warehouses, on the other hand, work great as reliable storage for data that was organized with specific processing purposes in mind. Here, consistency is key, and that’s why business professionals use data warehouses for reporting and business monitoring.

Tools Enabling Data Lakes and Data Warehouses

As far as implementation goes, there are software frameworks that provide the installation means for either one of these big data technologies.

For data lakes check out:

For data warehouses consider:

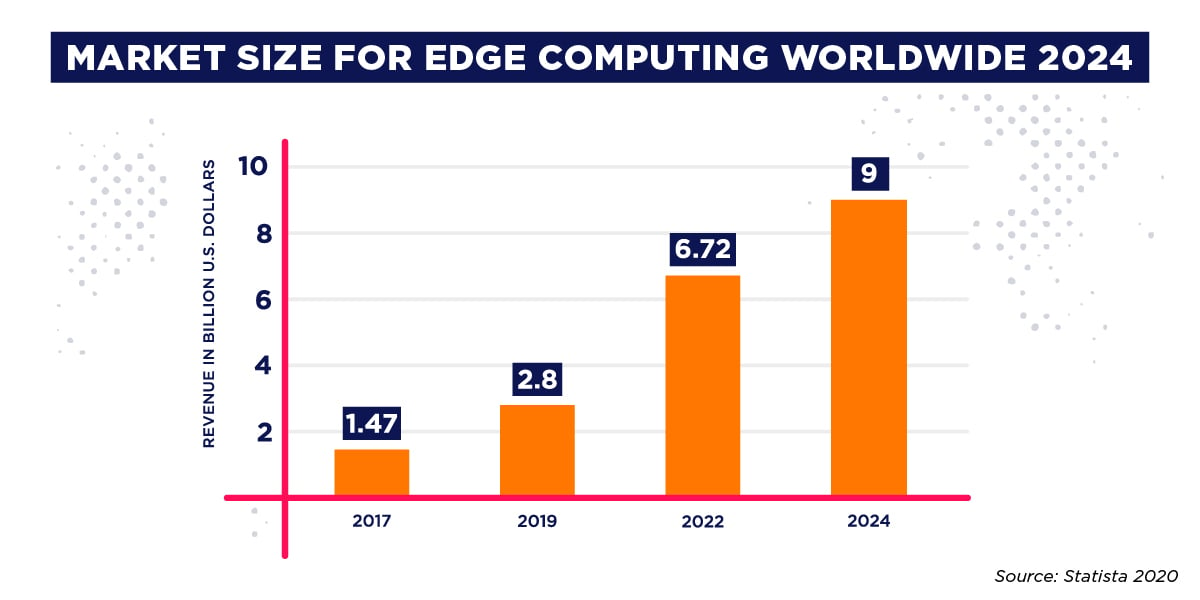

2. Edge Computing

Big data technologies main objective is to enable fast and accurate data processing. And there are many ways to do that. But let’s look at one – edge computing.

What is edge computing?

Edge computing is a technology that involves processing data in close proximity to its source. This concept was developed to solve the latency and bandwidth issues related to managing sensor data coming from smart homes, autonomous cars, or IoT devices.

Edge computing is about processing as much data as you can locally. Processing it close to the network’s edge, instead of the cloud. Reducing long-distance communication between the client and the server helps to decrease latency. It also brings a series of other benefits.

Benefits of Edge Computing

Out of all the big data technologies, edge computing is the one that facilitates sensor data processing the most.

How?

Let’s take a look:

- Better Performance: Limiting data analysis to the edge where the information originates significantly reduces latency. That means faster response times, plus it prevents your data from losing relevance.

- Secure: Edge computing involves distributing data across various devices, including sensors and cameras, and storing it in a local data center. Cloud computing, on the other hand, is centralized, making it much more vulnerable to cyberattacks.

- Cost Effective: Pre-analyzing and managing locally expandable parts of data helps reduce the costs of transporting and processing that data in the cloud.

- Reliable: Smart devices with built-in micro data centers are designed to operate in any environment. Storing the necessary data locally means less problems with cloud connectivity, which means better reliability.

- Scalable: Edge computing solutions are fairly easy to expand. By adding additional IoT devices and data centers, you don’t really have to worry about cloud storage.

Applications of Edge Computing

Compared to other big data technologies, edge computing is particularly useful in the IoT and smart devices sector. But is that all it’s good for? Let’s take a closer look.

- Autonomous Cars

Edge computing is extremely important for the development of fully automated vehicles. Since the safety of the passenger depends on the reliability of real time data processing, it has to be done flawlessly and locally.

- Smart Cities

A smart city is an urban area that relies heavily on many types of IoT devices that collect and process incoming data. As such, those devices use edge computing to manage resources and services efficiently. A good example is smart traffic lights.

- Gaming

Another use for edge computing is cloud gaming. With cloud gaming, some aspects of the game run in the cloud while rendered videos are transferred to lightweight clients such as mobile devices, VR glasses, etc… That type of streaming is also known as pixel streaming.

- Smart Homes

Like smart cities, smart homes also rely on big data technologies and IoT architecture. That includes devices like automated lights, kitchen appliances, surveillance cameras, video doors, music systems, and voice assistants. Edge computing provides the means for the seamless processing of that data without the risk of latency.

Tools Enabling Edge Computing

Edge computing involves working with a lot of sensor data. Processing it as close to its source is essential. As such, these solutions tend to marry hardware and software.

So, edge computing solutions typically comprise:

- Edge Devices, Servers, and Clouds

- Communication Platforms

- Model Deployments and CDN

- Edge Devices, Edge Servers, Edge Clouds

- Communication Platforms

- Model Deployments and CDN

3. Predictive Analytics

Data analytics is one of the most fundamental aspects of big data technologies. And there are four main types of analytics that differ based on their level of sophistication.

Let’s focus on the last two, as they are the most significant and promising, as far as big data technologies are concerned.

What is predictive analytics?

Predictive Analytics is a big data technology that aims to make predictions about future events by analyzing patterns in existing data. Predictive analytics was created by combining achievements in various fields.

These fields include:

- Data mining

- Statistics

- Predictive Modelling

- Machine Learning

- Artificial Intelligence

By identifying potential relationships between information hidden in large data sets, predictive analytics provides the means for data-based business insights and enables a number of associated benefits.

Benefits of Predictive Analytics

- Quick Business Insights: Predictive analysis is perfect for identifying patterns and correlations in large data sets. You can use the data to inform business decisions and solutions based on factual data.

- Process and Performance Optimization: Predictive analytics is useful for the manufacturing and production industries. It allows for predictive maintenance, a technique that estimates the wear-and-tear on machinery and when repair might be needed.

- Improved Customer Experience: Predictive analytics is among those big data technologies that can give you a clearer picture of your customers. Want to know about their habits, needs, and expectations? Predictive analytics allow you to adjust your business accordingly and improve their overall experience.

- Risk Mitigation: Because predictive analytics is based on analyzing large historical data sets, it allows you to estimate the probability of an action failing or succeeding. That aspect of big data technology is great for the financial sector, particularly for credit scoring.

- Fraud Detection: Because predictive analytics is so good at monitoring and analyzing patterns of behavior, it’s good for fraud detection. You can immediately spot any irregularities or odd patterns and take action, preventing potential threat.

Applications of Predictive Analytics

Predictive analysis is among those big data technologies and tools that can work wonders if you provide enough data for analysis.

Let’s take a look at the some of its most notorious applications:

- Predictive Customer Analytics

This type of predictive analytics application helps you get new customers, grow your client base, and increase retention. Here’s a taste of what it allows you to do:

- Understand your customers better.

- Find out the best way to connect with them.

- Learn what actions to take to maximize their engagement.

- Predictive Operational Analytics

This type of analytics is mostly used in the production and manufacturing industries. It concentrates on increasing your operational performance and helping you manage your assets and operations better. Operational analytics allow you to:

- Reduce maintenance costs.

- Reduce operational costs.

- Optimize the distribution of your resources.

- Improve the efficiency of your processes.

- Predictive Threat and Fraud Analytics

Predictive analytics is also great if you’re looking for a solution that helps you protect your business from fraudulent activity. A predictive analytics big data solution can:

- Detect an information leak.

- Identify fraud – both company and customer fraud.

- Take actions to prevent threats.

Tools Enabling Predictive Analytics

If you’re interested in implementing big data technologies like predictive analytics, there are a couple of things you should know.

- There are quite a few software frameworks that enable predictive analytics. Most of them are based on other technological innovations like AI and machine learning. If you want to get into specifics make sure you check out:

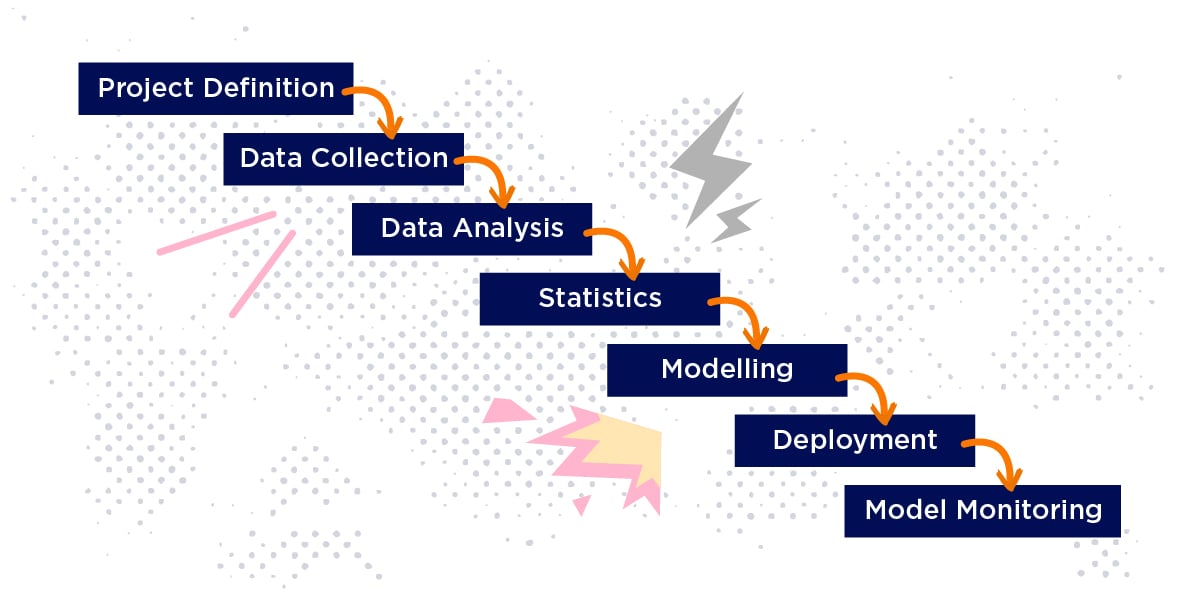

- There is a model of predictive analytics development you can follow. It includes 7 steps and looks like this:

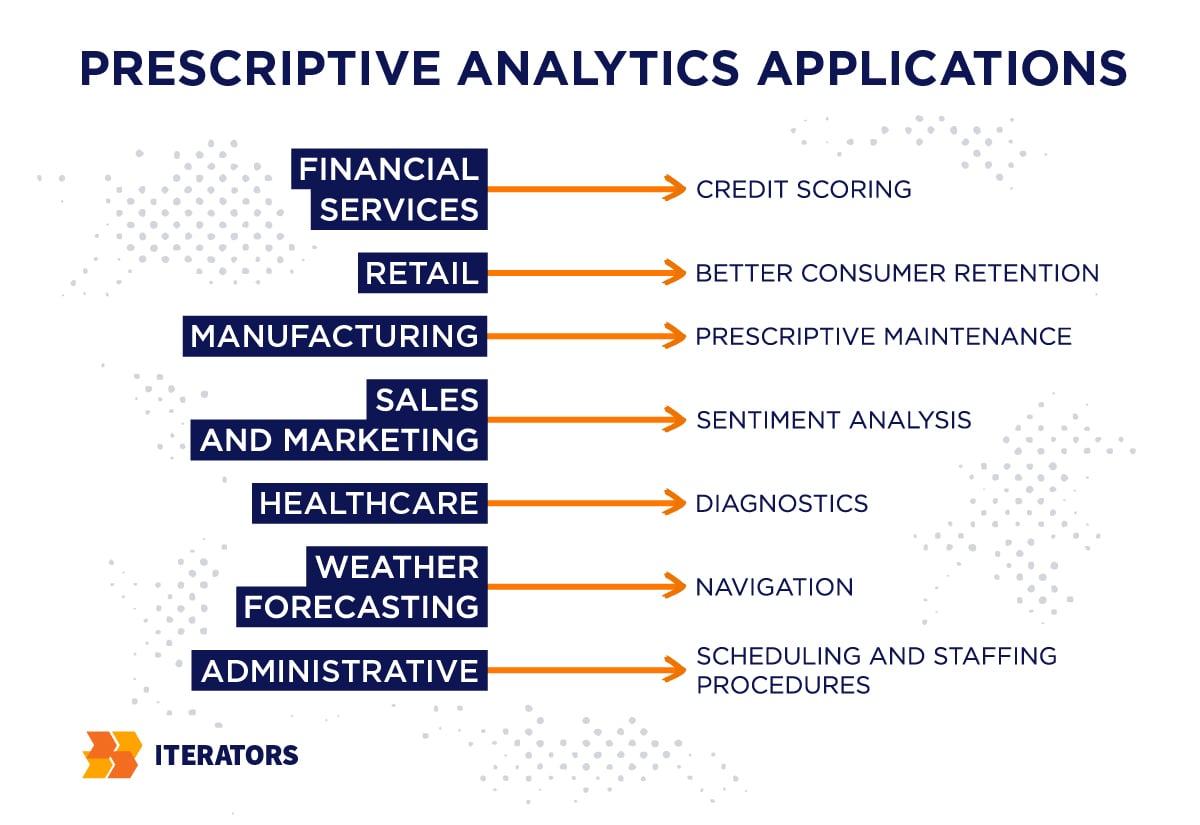

4. Prescriptive Analytics

Few people ever seem to wonder about solutions to problems that have yet to occur.

But those who developed prescriptive analytics sure did.

And they brought one of the most sophisticated big data technologies to life.

What is prescriptive analysis?

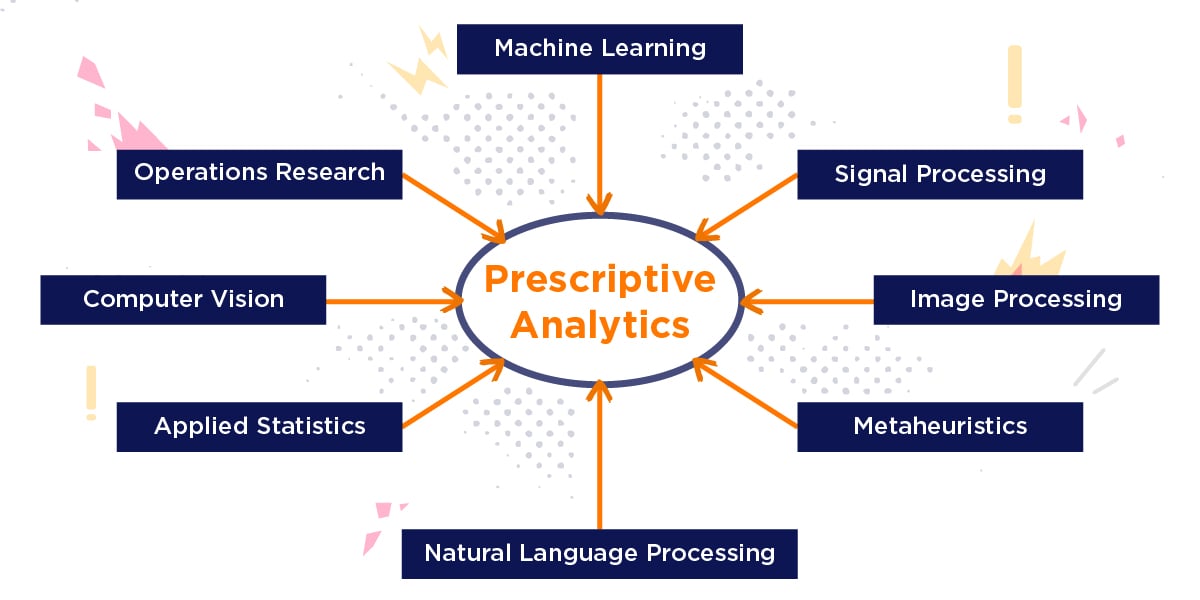

Prescriptive analysis is a big data technology that goes beyond predicting future outcomes. It provides information on when and why something is going to happen. Prescriptive analysis can also suggest potential solutions and strategies for dealing with a problem. That’s all based on historic data provided for analysis. The more detailed it is, the more accurate the “prescription.”

Prescriptive analytics wouldn’t be a thing, though, if it weren’t for a series of other innovations like machine learning or signal processing.

To give you an idea of how complex of a solution it is, let’s take a look at all the technologies that empower prescriptive analytics:

Benefits of Prescriptive Analytics

Both predictive and prescriptive analysis are relatively new big data technologies that offer similar benefits. What you need to remember, however, is that prescriptive analytics can take things a step further.

That said, let’s consider the advantages of using prescriptive analytics:

- Cost Reduction: There are a few ways prescriptive analysis can save you money. Most have to do with optimizing processes and performance. As such, the technology helps to identify unnecessary expenditures, improve resource management, and suggest the most optimal allocation of assets.

- Improved Customer Retention Rate: Prescriptive analytics is a great source of insight about your customers. By analyzing their habits, this technology learns to identify and anticipate behavior patterns. Which, if acted upon, can lead to higher customer satisfaction and a better retention rate.

- Risk Mitigation: You will see “risk mitigation” listed a lot as a benefit of big data technologies, and it’s true. Just as it can anticipate human behavior, prescriptive analytics can anticipate threats and suggest solutions right away.

- Increased Profit Margins: Prescriptive analytics can tell you where to save money. But it can also tell you where to make more money. When applied to sales and marketing, prescriptive analysis becomes a powerful tool for maximizing efficiency and profit.

Tools Enabling Prescriptive Analysis

Prescriptive analytics is a fairly new innovation as far as big data technologies go. Therefore, businesses often implement it as a custom solution, tailor-made for a specific use case.

Effectively, that means using coding languages:

As far as ready-made solutions are concerned, IBM Decision Optimization offers quite an extensive package of products based on prescriptive analysis.

5. Stream Processing

The International Data Corporation estimates that by 2025, up to 30% of the data generated worldwide will be real time in nature.

No wonder. The digitized world is a place where things happen quickly.

And when things happen quickly, immediate actions are needed. So, if you want to give yourself a competitive edge and be ready to manage all that real time data, you should definitely look into stream processing.

What is stream processing?

Stream processing is a big data technology that focuses on managing real time data flow. It’s primary use is for the monitoring and identifying of threats or crucial business information passing through your data stream.

If you ever hear about big data technologies called:

- Event Stream Processing (ESP)

- Streaming Analytics

- Real Time Analytics

- Complex Event Processing (CEP)

They all refer to the same thing – stream processing.

To understand how stream processing works, let’s consider an example:

Imagine you’re working a door at a club and Mr. Boss comes over and asks you:

“How many people wearing red hoodies did you let in today?”

That’s not a detail you were prepared to remember. So, to answer, you need to enter the club and count the people wearing red hoodies.

That’s an obvious uphill battle since the information you’re looking for (red hoodie) loses relevance very quickly – e.g., people can take hoodies off or new people can enter/leave.

So, what’s the solution?

And what does this have to do with big data technologies?

Well, for this particular example, a streaming analytics solution would manifest as a system of security cameras set to monitor all red hoodies coming and going. By looking at the data, our doorman knows exactly how many red hoodies are inside.

That’s the essence of streaming analytics. Being able to continuously register and analyze a stream of incoming data in real time as it flows.

Failure to extract the valuable insights in time can cause a range of undesirable outcomes – from lost business opportunities to undetected fraud. And nobody wants that.

Here’s how streaming analytics looks in practice:

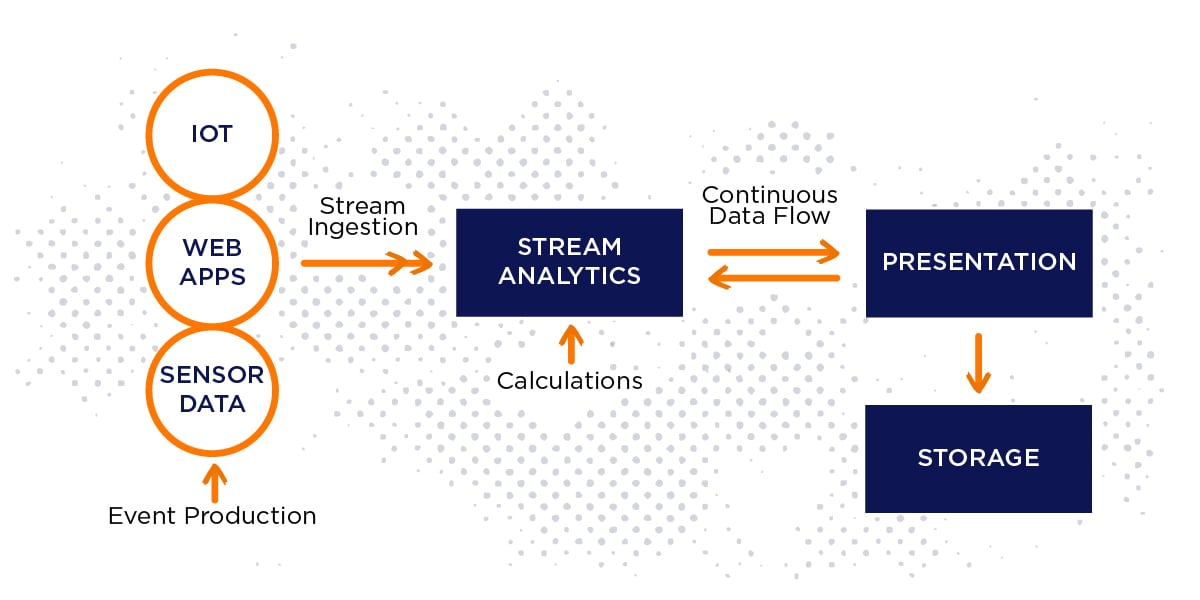

- Event Production: The process of streaming analytics starts with the event or occurrence that generates the data flow. It can be anything from a social media post or online transaction to a signal from a heavy machine sensor.

- Event Queuing & Stream Ingestion: At this stage, the information from all the sources is categorized before being ingested into the analytical layer.

- Stream Analytics: This is the processing stage where all the necessary calculations are done. The data is then sent to the presentation layer.

- Storage & Presentation: The last stage of the process. Here you can see the data in a clear, concise way. You can also find some historically valuable information in the data stream. Plus, it’s the final stage where you decide to store the data.

Benefits of Stream Processing

So what would be the reason for implementing big data technologies that deal with the constant stream of data in real time?

Let’s take a look:

- Real-time Data Monitoring

- Higher Fraud Detection

- Quick and Actionable Business Insights

- Maximized Efficiency

- Better Risk Management

Applications of Stream Processing

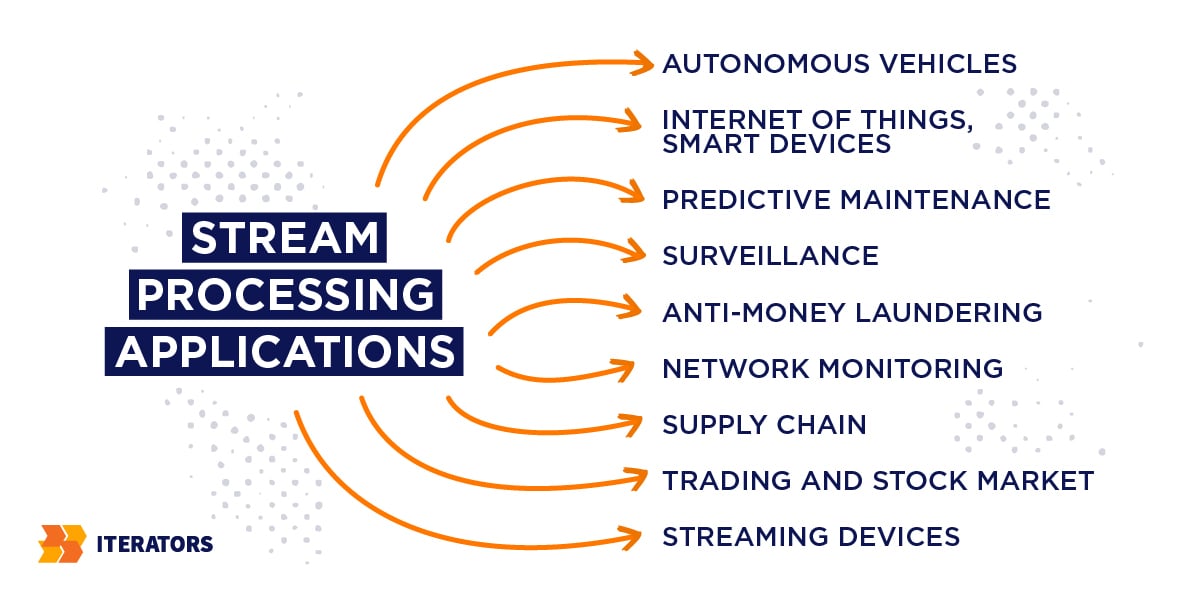

Whenever you need to process a large amount of data in real time, you’ll have a use for streaming analytics. Here’s a list of fields where stream processing already exists:

Tools Enabling Stream Processing

If you’re interested in tools that facilitate stream processing make sure you check out:

- Apache Kafka

- Apache Spark

- Apache Flink

- Lightbend Fast Data Platform

- Amazon Kinesis

- Azure Stream Analytics

- GoogleCloud Stream Analytics

6. In-memory Computing

As you surely know by now, big data technologies are very heavy on the CPU.

So heavy, that eventually people started to look for solutions that would increase the processing power. And that’s where in-memory computing comes into the picture.

What is in-memory computing?

In-memory computing is a technique that involves processing data entirely within a computer’s memory – e.g., in RAM or Flash. You can boost your processing power by eliminating all slow data access points and using a cluster of computers that work in parallel and share RAM.

In-memory computing is often carried out through in-memory data grids or in-memory databases. These allow users to perform complex computations on large-scale data sets while maintaining high processing speeds.

Benefits of In-memory Computing

In-memory computing is among those big data technologies that facilitate data analysis by optimizing the performance of processing tools.

So what exactly does that mean?

Let’s find out:

- Lightning Fast Performance: When compared to traditional processing methods that involve reading and writing data on hard drives, in-memory computing provides a much better performance.

- Easy to Scale: One of the coolest things about in-memory computing is how easy it is to scale. Whenever you need to add processing power, you can just expand your RAM horizontally. And with RAM prices dropping in recent years, this option is now economically viable.

- Enables Real Time Insights: Thanks to significantly increased processing power, in-memory computing allows for (near) real time data analysis, which is one of the most sought-after aspects of data analytics at the moment.

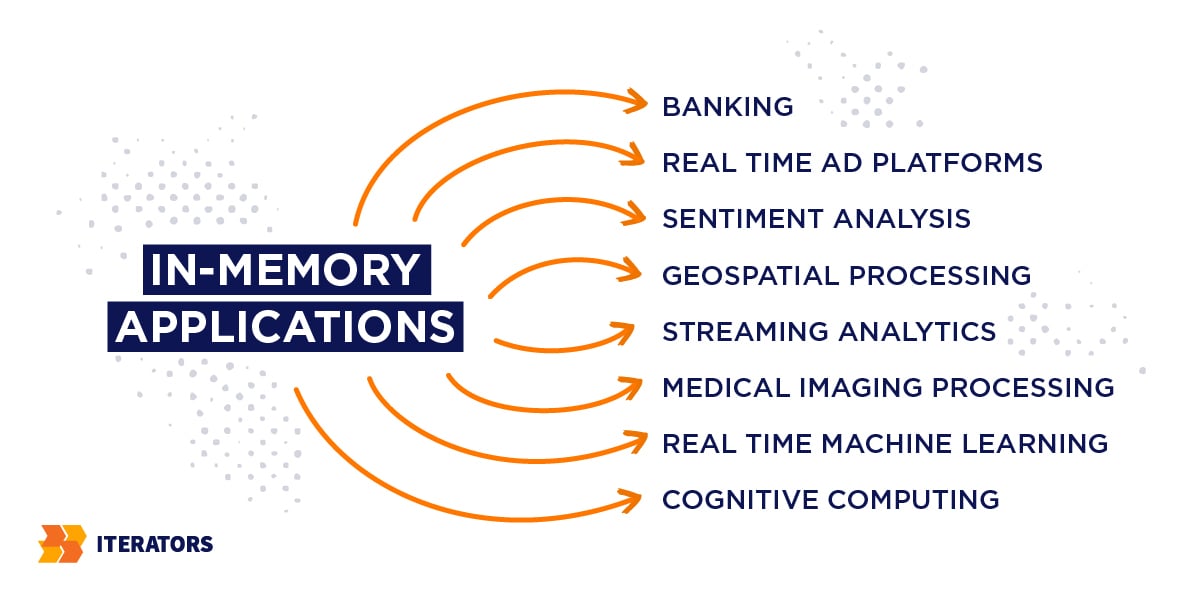

Applications of In-memory Computing

In recent years, in-memory computing has become one of the most sought-after big data technologies. Thanks to the benefits that it brings, many industries find useful applications for it. Let’s take a closer look at where you can apply in-memory computing:

Tools Enabling In-memory Computing

An effective in-memory computing implementation is usually a combination of software and hardware solutions.

As far as software is concerned, you can use tools like:

When it comes to hardware, you need to consider your processing needs. Needless to say, the more processing power you need, the more RAM and CPU you have to come up with. Check out the EC2 High Memory instances for RAM solutions created specifically for in-memory databases.

7. NoSQL Databases

Even though the common usage of NoSQL Databases originated in the mid 2000s, it is still very much relevant. And that’s simply because there aren’t many other big data technologies that are as effective when it comes to handling enormous data sets.

What is a NoSQL Database?

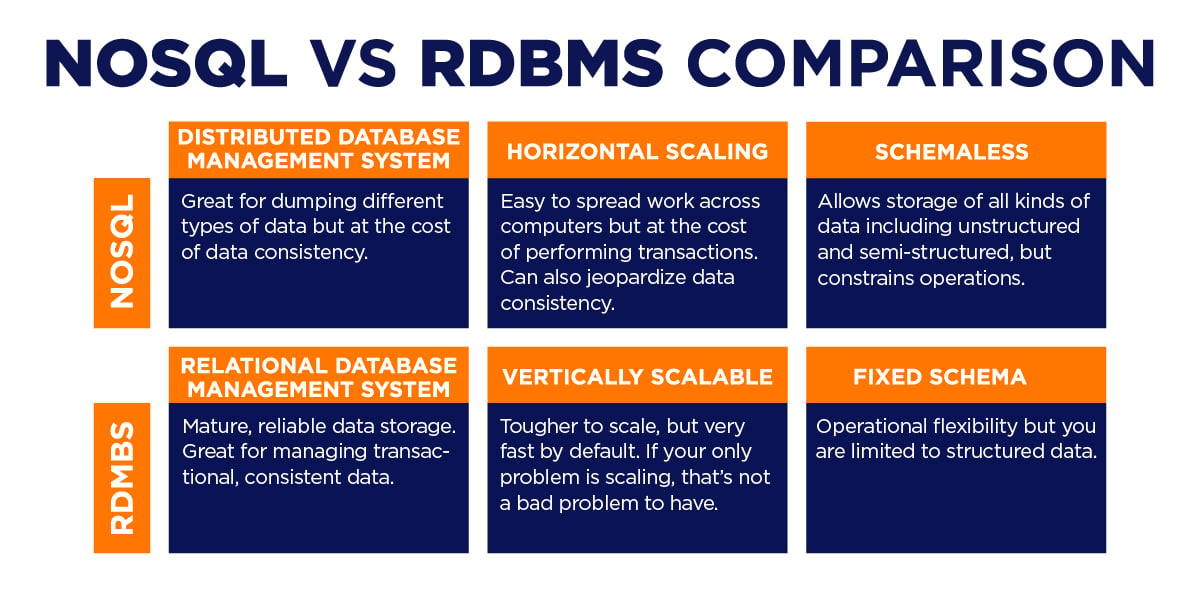

A NoSQL database is a type of distributed, non-relational database management system. The technology was specifically designed to deal with large quantities of varied data sets in real time. The name stands for “non SQL” or “not only SQL.” It refers to the lack of use of Structured Query Language, typical for relational databases.

In the mid 2000s, giant tech companies like Facebook, Amazon, and Google started to look for a more efficient way to process enormous data sets. That triggered a turn from relational databases, which were slow, hard to scale, and ineffective past a certain point. NoSQL databases came along as a much needed solution and became the new industry standard.

Let’s take a look at their properties and advantages in comparison with traditional, relational databases:

Applications of NoSQL Databases

As mentioned, NoSQL Databases are an effective solution for storing large, varied data sets. NoSQL is a standard big data technology used in huge companies like:

- Gmail

Tools Enabling NoSQL Databases

There is an abundance of software frameworks that enable NoSQL implementation. Some of the most popular ones include:

Conclusion

Big data technologies are a very comprehensive addition to any business. They innovate and boost bottom lines in many different ways. Once you’ve found what solution suits you best and get started with your project, there is no telling what extra benefits can occur.

One thing that’s certainly promising about big data is that it absorbs other innovations and makes great use of them. Technologies like machine learning and AI play a major part in big data development, and we can only see those getting more and more sophisticated.

With the constant innovation happening in the industry, it’s hard to predict where its rapid development will take us. But one thing is certain – the future holds a lot of data.

2 Comments

Thank you for reading! I hope you enjoyed the article. 🙂 Now, let’s start the discussion.

1. Which big data technology has the potential to impact your business the most?

2. What obstacles have you encountered when implementing big data solutions?

3. Has big data changed the way you approach your business? If so, tell us how!

We’d love to hear from you! Let us know if you have any questions or observations regarding the topic of big data technologies! Leave us a comment and we’ll be happy to respond. 🙂

Great article! Your insights on optimizing business with big data technologies are spot on. Thanks for the valuable information!