Welcome to the world of deep learning, where algorithms learn to discern patterns and make decisions akin to human cognition. In recent years, deep learning has emerged as a transformative force across various industries, revolutionizing the way we approach complex problems and unlocking new possibilities previously unimaginable.

In this article, we explore the myriad applications of deep learning, delving into its fundamental concepts, real-world examples, and the profound impact it has had across diverse sectors. From healthcare to finance, autonomous vehicles to natural language processing, deep learning’s reach knows no bounds.

Join us as we unravel this powerful technology, uncovering its potential to reshape industries, solve puzzling challenges, and pave the way for a future driven by innovation and intelligence. Whether you’re a seasoned expert or a curious newcomer, prepare to be inspired by the boundless opportunities that deep learning brings to the table.

Need help implementing deep learning into your project? At Iterators we can help design, build, and maintain custom software solutions for both startups and enterprise businesses.

Schedule a free consultation with Iterators today. We’d be happy to help you find the right software solution to help your company.

Definition and Fundamentals

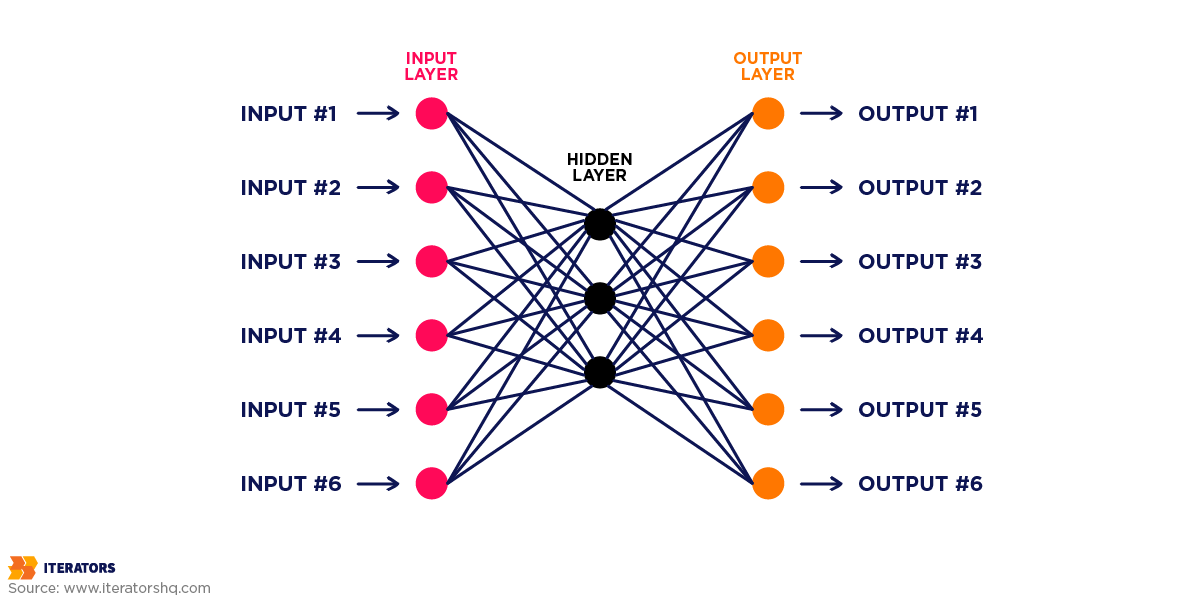

Central to deep learning is the concept of neural networks, hierarchical structures composed of interconnected nodes, or neurons, organized into layers. These networks learn from data through a process known as training, where they adjust their internal parameters to minimize errors and improve performance on specific tasks.

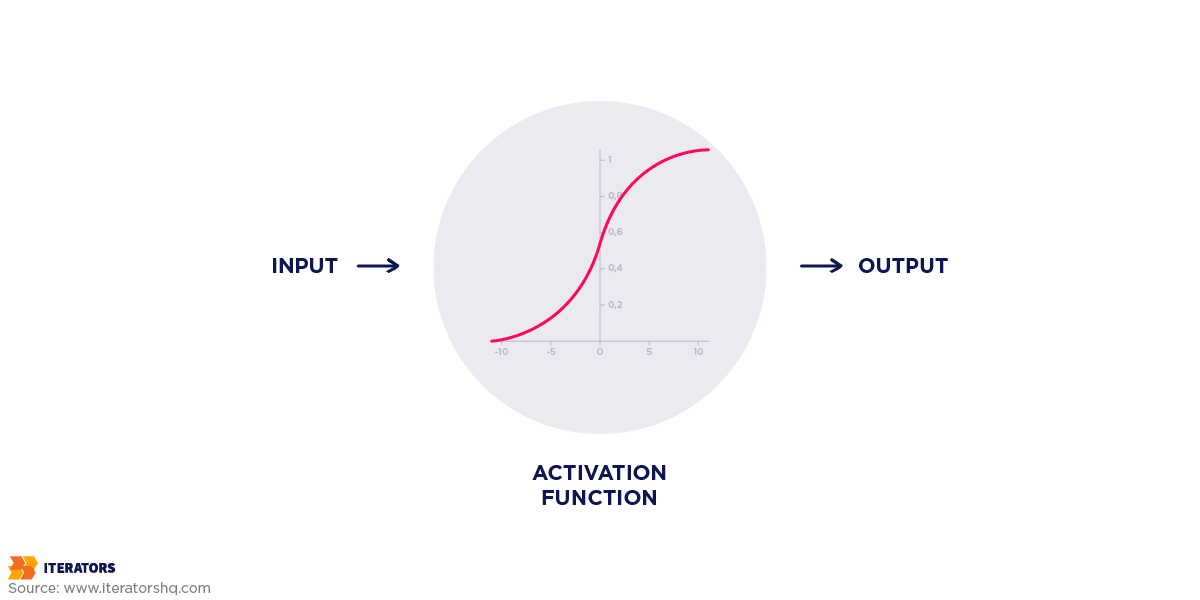

Key to the success of deep learning models are activation functions, which introduce non-linearity into the network, enabling it to learn complex relationships within the data. Additionally, input data preprocessing techniques, such as normalization and data augmentation, play a crucial role in ensuring optimal model performance.

Deep Learning vs. Traditional Machine Learning

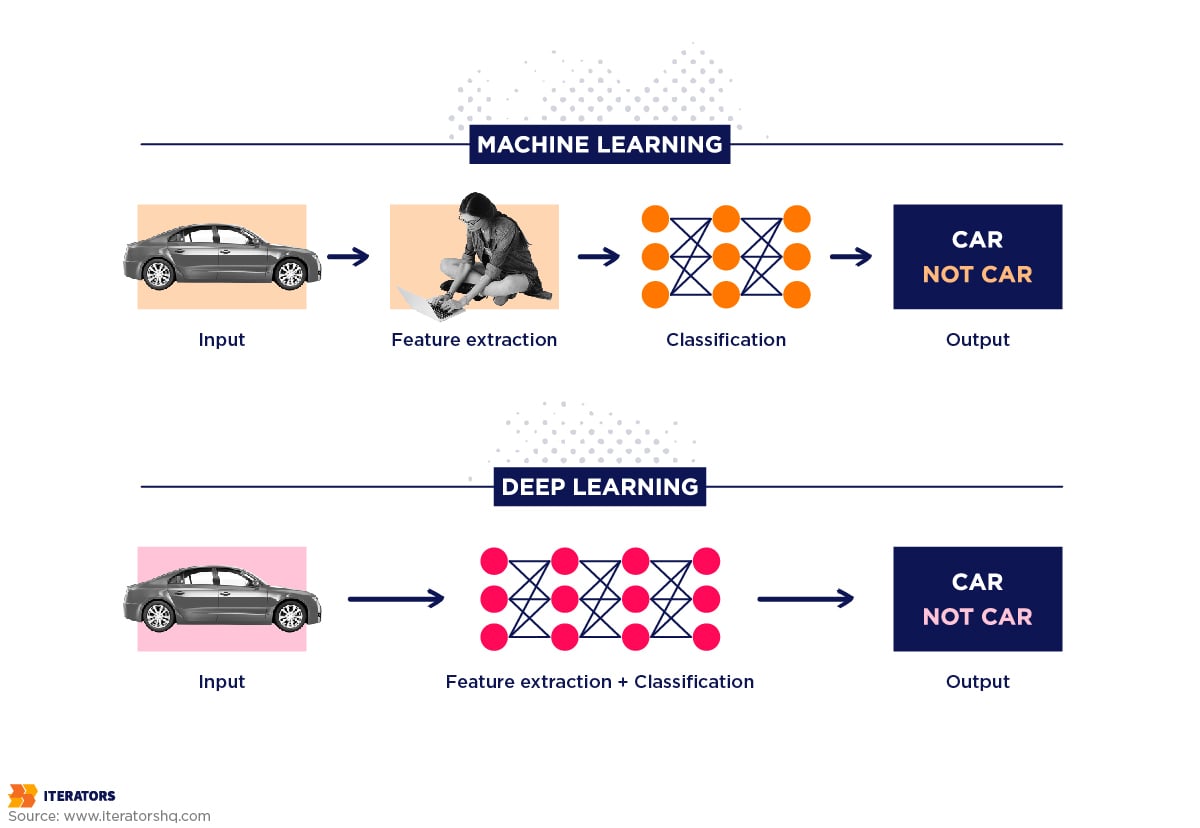

Deep learning represents a paradigm shift from traditional machine learning approaches, offering unparalleled capabilities in handling large-scale, unstructured data and extracting labyrinth-like patterns. While both methodologies aim to train algorithms to make predictions or decisions based on data, they differ significantly in their underlying techniques and applications.

Traditional machine learning algorithms often rely on feature engineering, where domain experts manually extract relevant features from raw data to feed into the model. These algorithms typically perform well on structured datasets with well-defined features but struggle with unstructured data types, such as images, text, and audio.

In contrast, deep learning algorithms bypass the need for feature engineering by automatically learning hierarchical representations of data through layers of interconnected neurons. This enables them to extract complex features directly from raw data, making them particularly effective for tasks involving unstructured data, such as image and speech recognition, natural language processing, and time series analysis.

While traditional machine learning algorithms excel in scenarios where interpretability and transparency are paramount, deep learning models often trade off interpretability for performance, making them more suitable for tasks where accuracy and predictive power are of utmost importance.

By understanding the distinctions between deep learning and traditional machine learning, we gain insights into when and how to leverage each approach effectively, depending on the nature of the data and the requirements of the task at hand.

| Deep Learning | Traditional Machine Learning | |

| Feature Engineering | Automated feature extraction | Requires manual feature engineering |

| Data Type | Works great with unstructured data | Suitable for structured data |

| Model Complexity | Complex, hierarchical structures | Simpler architectures |

| Interpretability | Performance has a higher premium than interpretability | Usually more interpretable |

| Applications | – Image recognition – Natural Language Processing (NLP) | – Finance – Healthcare |

Key Components of Deep Learning Systems

To understand the inner workings of deep learning systems, it’s essential to delve into their key components, each playing a critical role in the model’s performance and functionality. From neural network architectures to activation functions and input data preprocessing techniques, these components form the backbone of deep learning algorithms.

Deep learning models learn from data, extract meaningful patterns, and make accurate predictions. We will now explore the fundamental building blocks of deep learning systems.

Neural Network Architectures

Neural network architectures serve as the foundation of deep learning models, defining their structure and determining how data flows through the network. One of the most common architectures is the feedforward neural network, consisting of layers of interconnected neurons where information moves in one direction, from input to output. These networks are adept at tasks like classification and regression.

- Convolutional Neural Networks (CNNs) are specialized architectures designed for processing grid-like data, such as images. They employ convolutional layers to extract features hierarchically, capturing spatial patterns with remarkable efficiency. CNNs have revolutionized image recognition tasks, achieving state-of-the-art performance in tasks like object detection and facial recognition.

- Recurrent Neural Networks (RNNs) are tailored for sequential data processing, making them ideal for tasks like natural language processing and time series analysis. Unlike feedforward networks, RNNs possess feedback connections, allowing them to maintain memory of past inputs. This enables them to capture temporal dependencies and context, facilitating tasks like language translation and speech recognition.

More recently, attention mechanisms have gained prominence in architectures like Transformers, enabling models to focus on relevant parts of input sequences. Transformers have demonstrated exceptional performance in tasks requiring long-range dependencies, such as language modeling and machine translation.

Activation Functions and Role

Activation functions are critical components of neural networks, introducing non-linearity into the network’s output and enabling it to learn complex relationships within the data. One of the most commonly used activation functions is the Rectified Linear Unit (ReLU), which sets negative values to zero and maintains positive values unchanged. ReLU is widely favored due to its simplicity and effectiveness in mitigating the vanishing gradient problem, which can impede training in deep networks.

Another popular activation function is the Sigmoid function, which squashes input values to a range between 0 and 1, making it suitable for binary classification tasks. However, Sigmoid functions suffer from the vanishing gradient problem, particularly in deep networks.

The Hyperbolic Tangent (tanh) function, similar to the Sigmoid function, squashes input values to a range between -1 and 1. Tanh addresses some of the limitations of the Sigmoid function but still exhibits vanishing gradient issues in deep networks.

Recent advancements have introduced novel activation functions like the Exponential Linear Unit (ELU) and the Parametric Rectified Linear Unit (PReLU), which aim to improve upon the shortcomings of traditional functions by addressing issues such as vanishing gradients and dead neurons.

The choice of activation function depends on factors such as the nature of the problem, the architecture of the neural network, and the desired properties of the model.

Input Data Preprocessing Techniques

Input data preprocessing techniques play a crucial role in the effectiveness and efficiency of deep learning models by ensuring that the data is in a suitable format for training and inference. One key preprocessing step is data normalization, which scales the input features to a standard range, typically between 0 and 1 or -1 and 1. Normalization helps prevent features with large scales from dominating the training process and ensures that the model converges faster.

Another essential preprocessing technique is data augmentation, particularly common in image and audio processing tasks. Data augmentation involves applying transformations such as rotation, flipping, and cropping to increase the diversity of the training data. By augmenting the dataset, the model becomes more robust to variations and reduces the risk of overfitting.

Techniques like feature scaling and dimensionality reduction can enhance the efficiency of deep learning models by reducing the computational burden and improving training convergence. Feature scaling ensures that all features contribute equally to the model’s learning process, while dimensionality reduction methods like Principal Component Analysis (PCA) extract the most informative features, reducing redundancy and noise in the data.

Preventing Overfitting with Regularization

Regularization methods are indispensable tools in the deep learning experts arsenal for combating overfitting, a common problem where the model learns to memorize the training data rather than generalize to unseen data. Overfitting occurs when the model becomes overly complex, capturing noise and irrelevant patterns in the training data.

One popular regularization technique is L2 regularization, also known as weight decay, which penalizes large weights in the model’s parameters during training. By adding a regularization term to the loss function, L2 regularization encourages the model to learn simpler, smoother decision boundaries, reducing the risk of overfitting.

Another effective regularization method is dropout, where randomly selected neurons are temporarily “dropped out” of the network during training. This forces the model to learn redundant representations, making it more robust to noise and variations in the data.

Techniques like early stopping and model ensemble can also act as regularization methods by preventing the model from becoming too complex. Early stopping involves monitoring the model’s performance on a validation set and stopping training when performance begins to degrade, thus preventing overfitting to the training data.

Industries Revolutionized by Deep Learning

Deep learning has ushered in a new era of innovation, transforming industries across the globe with its unparalleled capabilities. From healthcare to finance, transportation to entertainment, deep learning’s impact is felt far and wide, revolutionizing traditional practices and unlocking new possibilities.

Harnessing the power of neural networks and advanced algorithms enables organizations to reimagine processes, optimize operations, and deliver groundbreaking solutions to complex challenges.

We’ll now explore the profound influence of deep learning across various sectors, highlighting key advancements, transformative applications, and the potential for future growth. Find out what industries are being revolutionized by the extraordinary capabilities of deep learning technology.

1. Healthcare Sector

The healthcare sector stands at the forefront of deep learning innovation, leveraging advanced algorithms to revolutionize patient care, diagnosis, and treatment. Deep learning models have demonstrated remarkable efficacy in medical imaging analysis, enabling early detection and accurate diagnosis of various conditions, including cancer, cardiovascular diseases, and neurological disorders.

One of the most significant advancements in medical imaging has been the application of convolutional neural networks (CNNs) to tasks such as MRI and CT image interpretation. These models can detect subtle abnormalities with high accuracy, assisting radiologists in providing timely and precise diagnoses.

Deep learning models are also being employed in pathology analysis, where they can analyze histopathological images to identify cancerous cells and tissue structures. This technology has the potential to improve cancer diagnosis rates and treatment outcomes by enabling faster and more accurate assessments.

In addition to diagnostic imaging, deep learning is transforming healthcare through predictive analytics and personalized medicine. By analyzing electronic health records and genetic data, deep learning models can predict patient outcomes, identify at-risk individuals, and tailor treatment plans to individual patient needs.

Deep learning algorithms are facilitating drug discovery and development by analyzing molecular structures, predicting drug interactions, and identifying potential therapeutic targets. This has the potential to accelerate the drug development process and bring new treatments to market more quickly.

The integration of deep learning technology into the healthcare sector holds immense promise for improving patient outcomes, reducing healthcare costs, and advancing medical research. As these technologies continue to evolve and mature, we can expect to see even greater advancements in disease prevention, diagnosis, and treatment, ultimately leading to a healthier and more prosperous society.

2. Finance and Investment Industry

Deep learning is revolutionizing the finance and investment industry by offering advanced analytical tools and predictive capabilities that enable more informed decision-making, risk management, and investment strategies. One significant application of deep learning in finance is in algorithmic trading, where complex neural networks analyze vast amounts of market data to identify patterns and trends, execute trades, and optimize portfolio performance in real-time.

These algorithms can process diverse data sources, including market prices, news sentiment, and macroeconomic indicators, to identify profitable trading opportunities and mitigate risks. Additionally, deep learning models can learn from historical market data to develop predictive models for asset price movements, helping traders anticipate market fluctuations and make data-driven investment decisions.

Deep learning is also transforming other areas of the finance industry, such as fraud detection and credit risk assessment. Deep learning models can analyze transaction data to detect anomalous patterns indicative of fraudulent activity, enabling financial institutions to prevent fraudulent transactions and protect customers’ assets.

Deep learning algorithms are being used to assess credit risk by analyzing borrowers’ financial data, credit history, and other relevant factors. These models can provide more accurate assessments of creditworthiness, enabling lenders to make better-informed lending decisions and reduce the risk of default.

Deep learning is facilitating the development of robo-advisors, automated investment platforms that use advanced algorithms to provide personalized investment advice and portfolio management services to investors. These platforms can analyze investors’ financial goals, risk tolerance, and market conditions to recommend suitable investment strategies and optimize portfolio performance.

These technologies are reshaping the finance and investment industry by enhancing efficiency, improving decision-making processes, and unlocking new opportunities for innovation and growth. As they continue to evolve, we can expect to see further advancements that drive greater efficiency, transparency, and accessibility in financial markets.

3. Autonomous Vehicles Development

Deep learning is the foundational technology driving the development of autonomous vehicles, revolutionizing the way these vehicles perceive, navigate, and interact with the world around them. Deep learning algorithms enable autonomous vehicles to interpret sensor data, make real-time decisions, and navigate complex environments with unparalleled accuracy and efficiency.

One of the key applications of deep learning in autonomous vehicles is in perception systems, where convolutional neural networks (CNNs) analyze sensor data from cameras, LiDAR, and radar to detect and classify objects in the vehicle’s surroundings.

LiDAR stands for Light Detection and Ranging. It’s a remote sensing technology that uses laser pulses to measure distances to objects or surfaces. LiDAR systems emit laser beams towards a target, and the time it takes for the laser pulses to reflect back to the sensor is used to calculate the distance to the object. By measuring the time delay and the angle of the reflected light, LiDAR systems can generate highly accurate 3D maps of the surrounding environment. LiDAR is commonly used in various applications, including topographic mapping, forestry, urban planning, archaeology, and most notably, in autonomous vehicles for precise object detection and mapping of the vehicle’s surroundings.

These models can identify pedestrians, vehicles, road signs, and other obstacles, enabling the vehicle to make informed decisions about its path and speed.

Deep learning models are used for semantic segmentation, which involves dividing the scene into meaningful segments and assigning labels to each segment. This allows autonomous vehicles to understand the layout of the environment and differentiate between various objects and road elements, enhancing their ability to navigate safely and efficiently.

Deep learning also plays a crucial role in decision-making and planning for autonomous vehicles. Reinforcement learning algorithms enable vehicles to learn optimal driving policies through trial and error, allowing them to navigate complex traffic scenarios and adapt to changing road conditions in real-time.

In localization and mapping systems, deep learning models fuse sensor data to estimate the vehicle’s position and build high-definition maps of the environment. These maps provide crucial information for route planning and navigation, enabling the vehicle to follow predefined paths and avoid collisions with obstacles.

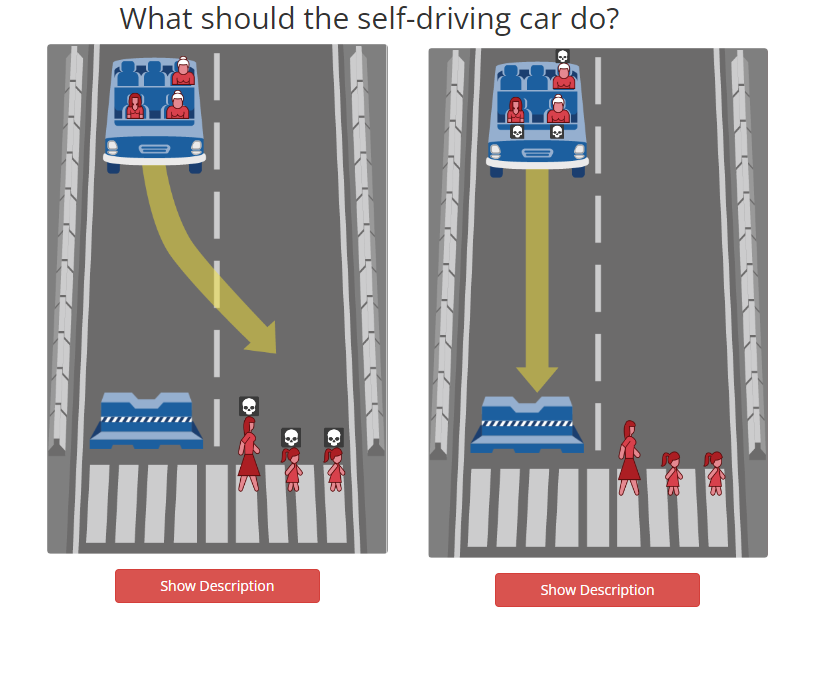

Deep learning technology is accelerating the development of autonomous vehicles by providing advanced perception, decision-making, and navigation capabilities. Through platforms like Moralmachine.net, simulations engage users in exploring ethical dilemmas faced by autonomous vehicles, sparking valuable discussions about AI ethics and decision-making. As technologies continue to evolve, we can expect to see further advancements in autonomous vehicle technology, ultimately leading to safer, more efficient, and more accessible transportation systems for people around the world.

Challenges and Limitations in Deep Learning Applications

Despite its transformative potential, deep learning encounters challenges and limitations in real-world applications. From training complexities to ethical considerations, navigating these hurdles is vital for harnessing the full power of deep learning. This section explores key challenges and offers insights into overcoming them effectively for successful deployment.

Training Challenges and Solutions

Training deep learning models presents several challenges, including the need for large amounts of labeled data, computational resources, and time-consuming optimization processes. One common challenge is the scarcity of labeled data, particularly in domains where manual annotation is expensive or impractical. This can hinder model performance and generalization capabilities, leading to overfitting or poor accuracy on unseen data.

To address this challenge, techniques such as transfer learning and data augmentation can be employed. Transfer learning allows models to leverage knowledge learned from pre-trained models on large datasets and fine-tune them for specific tasks with limited labeled data. Data augmentation involves generating synthetic data samples by applying transformations such as rotation, scaling, and cropping to existing data, thereby increasing the diversity of the training dataset.

Another challenge is the computational resources required for training deep learning models, particularly for large-scale architectures and datasets. Training deep neural networks often demands high-performance computing resources, including GPUs or TPUs, and can be prohibitively expensive or time-consuming for some organizations.

To mitigate this challenge, techniques such as model parallelism, distributed training, and cloud-based solutions can be employed to distribute the computational workload across multiple devices or servers, enabling faster training times and scalability.

Computational Challenges in Large-Scale Projects

Large-scale deep learning projects often encounter significant computational challenges due to the complexity and scale of the models, as well as the size of the datasets involved. One major challenge is the sheer computational cost of training deep neural networks, particularly for architectures with millions or even billions of parameters. Training such models requires extensive computational resources, including high-performance GPUs or TPUs, as well as large amounts of memory and storage capacity.

Another angle to scalability is that it becomes a concern as projects scale up to process increasingly larger datasets and train more complex models. Distributed training techniques, such as data parallelism and model parallelism, can help distribute the computational workload across multiple devices or servers, enabling faster training times and improved scalability.

Managing and processing large-scale datasets poses additional computational challenges, particularly in terms of data storage, preprocessing, and feature extraction. Handling massive volumes of data efficiently requires robust data management systems and scalable infrastructure capable of processing and analyzing data in parallel.

To address these challenges, organizations must invest in scalable computing infrastructure, distributed computing frameworks, and efficient data processing pipelines. Cloud-based solutions, such as AWS, Google Cloud, and Microsoft Azure, offer scalable computing resources and data storage solutions tailored to the needs of large-scale deep learning projects, enabling organizations to overcome computational barriers and unlock the full potential of deep learning technology.

Ethical Considerations in Development

As deep learning technology continues to advance and permeate various aspects of society, it is imperative to address the ethical implications and considerations associated with its development and deployment. One significant ethical concern is the potential for algorithmic bias, where models learn and perpetuate biases present in the training data, leading to unfair or discriminatory outcomes. This can have serious consequences, particularly in high-stakes applications such as healthcare, finance, and criminal justice, where decisions impact individuals’ lives.

It can be exemplified by cases like Amazon’s Rekognition, where facial recognition software showed higher error rates for darker-skinned faces due to biased training data. This bias, evident also in Apple Card’s algorithm, which allegedly discriminated against women by offering them lower credit limits than men with similar financial profiles. Apple denied any intentional bias but later reviewed and adjusted the algorithm.

Another case was algorithmic bias in Wells Fargo’s where An investigation in 2020 revealed that their auto loan pricing algorithm charged Black and Latino borrowers higher interest rates than white borrowers with similar creditworthiness. The bank attributed the issue to flaws in the model and agreed to a settlement.

The lack of transparency and interpretability in deep learning models poses challenges for accountability and trustworthiness. Complex neural networks are often regarded as “black boxes,” making it difficult to understand how they arrive at their decisions and evaluate their fairness and reliability.

Privacy concerns arise as deep learning models increasingly rely on vast amounts of personal data to make predictions and recommendations. Ensuring the privacy and security of sensitive data is crucial to maintaining trust and safeguarding individuals’ rights.

Take Compas, a risk assessment tool used in some US courts, utilizes a deep learning algorithm to predict a defendant’s likelihood of re-offending. In 2016, ProPublica’s investigation found racial bias in the algorithm’s predictions, wrongly classifying Black defendants as high-risk at a higher rate than white defendants. The lack of transparency in the algorithm made it difficult to challenge these biased outcomes.

Similarly, in 2018, a major data privacy scandal erupted when it was revealed that Cambridge Analytica, a political consulting firm, improperly obtained Facebook data from millions of users without their knowledge. This data was allegedly used to build targeted political advertising profiles, raising concerns about user privacy and the misuse of personal information by deep learning algorithms.

To mitigate these issues, proactive measures are essential. Developers must rigorously evaluate training data for biases and employ techniques like bias detection algorithms and fairness-aware training methods. Enhancing model interpretability through introspection and feature analysis fosters trust and accountability, crucial for ethical deep learning deployment.

Recent Advancements and Emerging Trends in Deep Learning

Recent advancements in deep learning have propelled the field to new heights, driving innovation and unlocking unprecedented capabilities across diverse applications. From breakthroughs in natural language processing to the rise of generative adversarial networks (GANs) and the widespread adoption of transfer learning, the landscape of deep learning is evolving rapidly. In this section, we explore the latest trends and emerging technologies shaping the future of deep learning.

1. Natural Language Processing

Recent advancements in natural language processing (NLP) have transformed the way computers understand and generate human language, opening up new possibilities for communication, information retrieval, and language-based tasks. One notable advancement is the development of transformer-based models, such as BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pre-trained Transformer), which have achieved remarkable performance on a wide range of NLP tasks.

These models leverage attention mechanisms and self-attention mechanisms to capture long-range dependencies and contextual information within text data, enabling them to generate more coherent and contextually relevant responses. Additionally, transfer learning approaches have enabled researchers to pre-train large language models on vast amounts of text data and fine-tune them for specific tasks with minimal additional training data, significantly reducing the need for task-specific labeled datasets.

Advancements in NLP have led to the development of state-of-the-art language understanding and generation systems, including question answering systems, chatbots, language translation tools, and sentiment analysis models. These systems have applications across various domains, including customer service, healthcare, finance, and education, enhancing human-computer interaction and enabling more natural and intuitive communication experiences.

The breakthroughs in NLP represent a significant milestone in artificial intelligence research, paving the way for more sophisticated and human-like language processing capabilities that have the potential to revolutionize how we interact with technology and each other.

2. Generative Adversarial Networks (GANs)

Generative Adversarial Networks (GANs) have emerged as powerful tools for generating realistic and high-quality synthetic data across various domains. One prominent application of GANs is in computer vision, where they are used to generate photorealistic images, enhance low-resolution images, and perform image-to-image translation tasks. For example, in the field of art and design, GANs can be used to generate novel artworks, create realistic textures, and aid in the creative process.

In addition to computer vision, GANs find applications in natural language processing (NLP) tasks such as text generation, language translation, and style transfer. GANs can generate coherent and contextually relevant text samples, enabling applications such as chatbots, story generation, and dialogue systems. They can also be used to translate text between different languages or alter the style and tone of written content.

Moreover, GANs have applications in healthcare, where they can generate synthetic medical images for training diagnostic models, simulate biological processes for drug discovery, and generate patient data for privacy-preserving research. GANs also find applications in entertainment, virtual reality, and advertising, where they can generate immersive experiences, create lifelike characters, and generate personalized content for users.

GANs are versatile and powerful tools with diverse applications across numerous domains, enabling creativity, innovation, and advancement in various fields. As GAN technology continues to evolve, we can expect to see even more exciting and impactful applications in the future.

3. Transfer Learning

Transfer learning plays a crucial role in accelerating the development of deep learning applications by leveraging knowledge learned from pre-trained models and transferring it to new tasks or domains with limited labeled data. This approach allows practitioners to overcome the challenges of data scarcity and computational resources, enabling faster model development and deployment.

One key advantage of transfer learning is its ability to leverage large-scale pre-trained models, such as BERT or ResNet, that have been trained on vast amounts of data and fine-tuned for specific tasks. By starting with a pre-trained model, practitioners can benefit from the learned representations and feature extraction capabilities, reducing the need for extensive training on task-specific datasets.

Furthermore, transfer learning facilitates domain adaptation, where models trained on one domain can be adapted to perform well in a different but related domain. This is particularly useful in scenarios where labeled data in the target domain is limited or costly to obtain, as it allows practitioners to transfer knowledge learned from a source domain to improve performance in the target domain.

Additionally, transfer learning enables rapid prototyping and experimentation, as practitioners can quickly adapt pre-trained models to new tasks or domains with minimal additional training. This accelerates the development cycle and allows for more efficient exploration of different model architectures, hyperparameters, and training strategies.

Transfer learning is a powerful technique for accelerating the development of deep learning applications, enabling practitioners to leverage existing knowledge and resources to tackle new challenges effectively and efficiently. By harnessing the power of transfer learning, practitioners can accelerate innovation, reduce development time, and unlock new possibilities in deep learning research and applications.

Future Prospects of Deep Learning

The future prospects and implications of deep learning are both vast and profound. As this transformative technology continues to evolve, its impact on society, industry, and research will shape the way we live, work, and interact with the world around us. Let’s now explore the exciting possibilities ahead.

1. Quantum Computing

Quantum computing holds the potential to revolutionize deep learning and usher in a new era of computational power and efficiency. Unlike classical computers, which process information using bits (binary digits) that can be either 0 or 1, quantum computers leverage quantum bits, or qubits, which can exist in multiple states simultaneously due to the principles of superposition and entanglement.

This unique property enables quantum computers to perform complex calculations and process vast amounts of data in parallel, significantly accelerating deep learning tasks such as training large-scale neural networks and optimizing complex optimization problems. Quantum computers have the potential to tackle computational challenges that are currently intractable for classical computers, unlocking new frontiers in artificial intelligence research and applications.

Quantum computing can enhance the capabilities of existing deep learning algorithms and architectures, enabling more efficient training processes, faster convergence rates, and improved model performance. Quantum-inspired algorithms, such as quantum annealing and quantum-inspired optimization, have already shown promise in accelerating optimization tasks and solving combinatorial optimization problems, which are prevalent in deep learning applications.

The potential impact of quantum computing on deep learning is profound, promising to revolutionize the field and enable breakthroughs in AI research, technology, and innovation. As quantum computing continues to advance, we can expect to see transformative changes that will shape the future of deep learning and drive unprecedented progress in artificial intelligence.

2. Ethical and Regulatory Frameworks

As deep learning technology continues to advance and permeate various aspects of society, ethical and regulatory frameworks are evolving to address the unique challenges and implications posed by AI-driven systems. Initially, ethical considerations focused on principles such as fairness, transparency, accountability, and privacy, aiming to ensure that AI systems uphold fundamental human values and rights.

However, as AI technologies become more sophisticated and pervasive, there is growing recognition of the need for comprehensive regulatory frameworks to govern their development, deployment, and use. Governments, industry organizations, and international bodies are increasingly enacting regulations and guidelines to address concerns related to data privacy, algorithmic bias, safety, security, and accountability.

For example, the European Union’s General Data Protection Regulation (GDPR) establishes strict guidelines for the collection, processing, and storage of personal data, with provisions for transparency, consent, and data protection rights. Similarly, initiatives such as the Montreal Declaration for Responsible AI and the IEEE Ethically Aligned Design provide ethical guidelines and principles for the responsible development and deployment of AI technologies.

Moving forward, the evolution of ethical and regulatory frameworks will play a crucial role in shaping the responsible and ethical use of deep learning and AI technologies. By promoting transparency, accountability, and human-centric design principles, these frameworks aim to foster trust, mitigate risks, and ensure that AI-driven systems contribute to positive societal outcomes while minimizing harm.

3. Research and Development Phase

Numerous exciting applications are currently in the research and development phase, poised to transform industries and address pressing societal challenges using deep learning technology. One such area is healthcare, where researchers are exploring the potential of deep learning for personalized medicine, disease prediction, and drug discovery. Deep learning models are being developed to analyze medical imaging data, genomic sequences, and electronic health records, enabling more accurate diagnosis and treatment planning.

In the field of climate science, deep learning techniques are being applied to analyze vast amounts of climate data, improve weather forecasting models, and predict extreme weather events with greater precision. These advancements have the potential to enhance our understanding of climate dynamics, mitigate the impacts of climate change, and inform policy decisions.

In robotics and autonomous systems, researchers are leveraging deep learning to develop intelligent robots capable of navigating complex environments, interacting with humans, and performing a wide range of tasks autonomously. From household chores to industrial automation, these robotic systems have the potential to revolutionize various industries and improve efficiency, safety, and productivity.

In the field of materials science, deep learning is being used to accelerate the discovery and development of novel materials with desirable properties for applications such as energy storage, electronics, and healthcare. By analyzing material properties, chemical structures, and synthesis processes, deep learning models can predict material properties, optimize experimental designs, and accelerate the pace of materials discovery and innovation.

In summary, these promising applications represent just a glimpse of the transformative potential of deep learning technology in research and development. As these projects progress from the lab to real-world applications, they have the potential to revolutionize industries, address societal challenges, and improve quality of life for people around the world.

Final Thoughts

As deep learning continues to evolve, there are endless opportunities for exploration and engagement in this exciting field. Whether you’re a researcher, developer, or industry professional, dive deeper into the world of deep learning to unlock new possibilities and contribute to advancements in AI technology.

Ready to dive into deep learning to enable your organization to build more impactful products? At Iterators, our expert team offers comprehensive resources and services to accelerate your journey. Our consulting services and cutting-edge tools support you at every step of your deep learning endeavors. Get started today and join the revolution in AI innovation!