What do you do when your Scala code compiles?

I’m the type of person who thinks about the darkest questions humanity has ever faced, such as Are humans born good or bad? or What will I have for my lunch? But there was one day when I thought: “What does scalac (Scala compiler) do during compiling time?” So, I asked one of my friends, Google, and I found out that my code goes through more than 25 phases during that time! I gotta say, I was intrigued.

Then, there was a strange bug with slickless. I hope you’re familiar with Slick — it’s one of the most popular libraries for databases in Scala, but it has one constraint. A case class that represents a table in the database cannot have more than 22 fields. Slickless provides the necessary implicits that enable us to use HList instead of case class representation. However, if a list of fields in HList is in the wrong order, or is incomplete, compiling time rapidly increases (more than 15 minutes). That was when I knew I had to know how it all works.

Scalac is difficult. And that’s especially true for me, as I’ve just finished physics, and started learning compiler techniques. Just imagine — you write some fancy functional Scala code that gets translated into JVM-not-so-cute bytecode (as shown below) and then it just works!

public final class Main {

public static void main(java.lang.String[]);

Code:

0: getstatic #16 // Field Main$.MODULE$:LMain$;

3: aload_0

4: invokevirtual #18 // Method Main$.main:([Ljava/lang/String;)

7: return

public static void delayedInit(scala.Function0<scala.runtime.BoxedUnit>);

Code:

0: getstatic #16 // Field Main$.MODULE$:LMain$;

3: aload_0

4: invokevirtual #22 // Method Main$.delayedInit:(Lscala/Function0;)V

7: return

public static java.lang.String[] args();

Code:

0: getstatic #16 // Field Main$.MODULE$:LMain$;

3: invokevirtual #26 // Method Main$.args:()[Ljava/lang/String;

6: areturn

public static void scala$App$_setter_$executionStart_$eq(long);

Code:

0: getstatic #16 // Field Main$.MODULE$:LMain$;

3: lload_0

4: invokevirtual #30 // Method Main$.scala$App$_setter_$executionStart_$eq:(J)V

7: return

public static long executionStart();

Code:

0: getstatic #16 // Field Main$.MODULE$:LMain$;

3: invokevirtual #34 // Method Main$.executionStart:()J

6: lreturn

public static void delayedEndpoint$Main$1();

Code:

0: getstatic #16 // Field Main$.MODULE$:LMain$;

3: invokevirtual #38 // Method Main$.delayedEndpoint$Main$1:()V

6: return

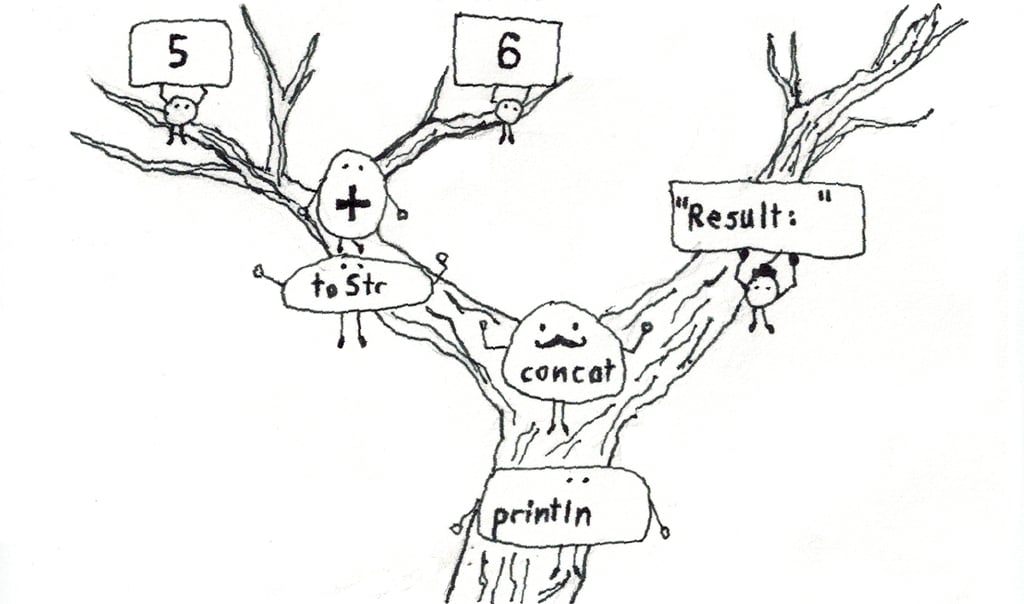

}First, I want to explain some of the abstractions used in the process.

val a = 5

val b = 6

println("Result: " + (a + b))An abstract syntax tree — also known as AST — is a representation of how your code is interpreted by the compiler. It is created by parser and lexer — some common compiler components. AST preserves all operations and values in your project. The easiest way to explain it is to show you examples:

And in Scala:

Expr(

Block(

List(

ValDef(Modifiers(), TermName("a"), TypeTree(), Literal(Constant(5))),

ValDef(Modifiers(), TermName("b"), TypeTree(), Literal(Constant(6)))

),

Apply(

Select(Ident(scala.Predef), TermName("println")),

List(

Apply(

Select(

Literal(Constant("Result: ")),

TermName("$plus")

),

List(

Apply(

Select(Ident(TermName("a")), TermName("$plus")),

List(Ident(TermName("b")))

)

)

)

)

)

)

)

Still not sure about AST? Click here for some more examples.

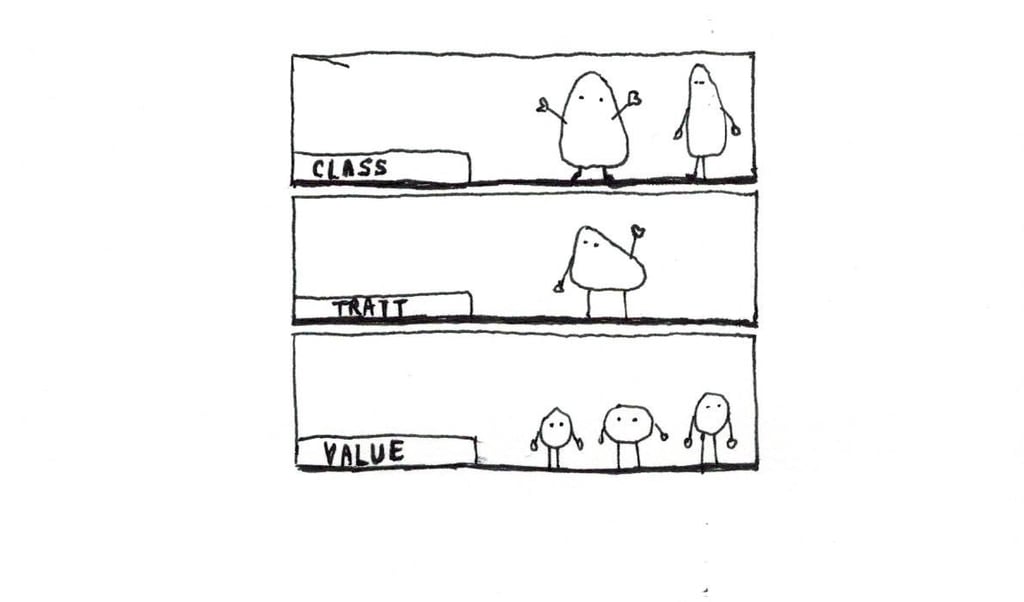

A symbols table is a data structure that has all values, classes, objects, and traits that you used in your project with additional info about their kind and owners. It enables the compiler to reach for them any time. In Scala, it can be seen by adding -Yshow-syms flag with the compiler. I will show you an example later.

JVM is a magic machine that translates .class files into a working program. Many convenient features are a part of JVM — for example JIT (special compiler used for optimizations) or garbage collector (memory manager).

How it actually works

Usually, compiler work is sectioned into three parts — front end, middle end, and back end. The front end creates an AST tree and modifies it on some basic level. The middle end does some platform-independent optimizations (tail calls), and the back end does optimizations for certain CPUs and generates assembly files. Scala compiler is organized somewhat differently, for instance CPU specific optimizations are delegated to JVM.

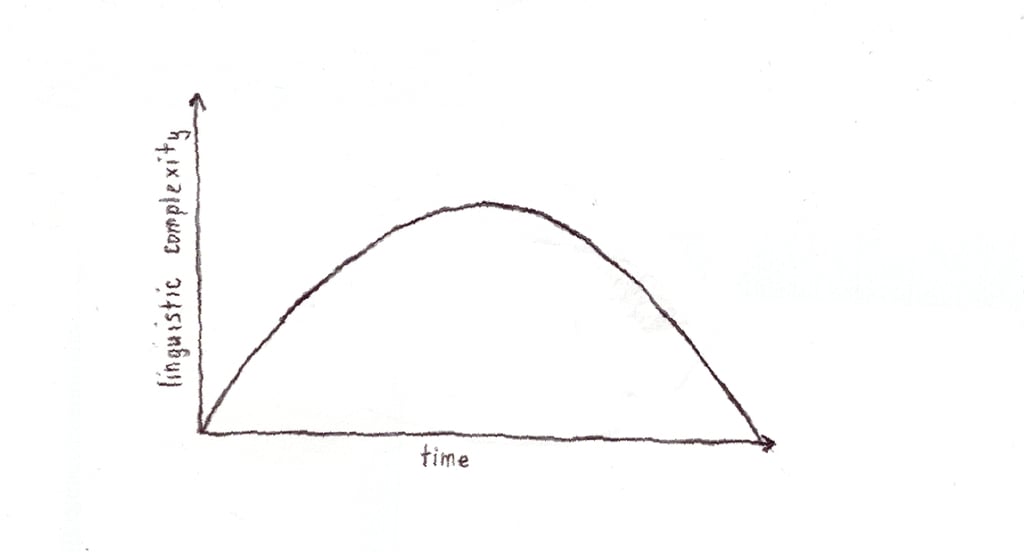

All 25 phases are connected in a pipeline. Each phase performs transforms your code. Linguistic complexity can be described by this graph:

In the beginning, compilation intermediate results become more complex as it is created and typed. After, it simplifies as the compiler removes advanced language features.

In the next part, I want to present some of the compiler phases in Scala — I will be using a prettier representation of AST trees provided by Scala to show you differences between each part.

Phases

Parser

The first phase creates non-typed AST using parser and scanner. This is the moment when syntax errors are thrown. Moreover, XMLs are translated and our precious functional code is desugared into simpler structures. For example:

for {

a2 <- a

b2 <- b } yield a2 + b2 val a: Int => Int = _ + 1

if(a) println("XD")

1 to 10

Is translated into:

a.flatMap(((a2) => b.map(((b2) => a2.$plus(b2)))));

val a: _root_.scala.Function1[Int, Int] = ((x$1) => x$1.$plus(1));

if (a) println("XD") else ();

1.to(10);

Namer, Typer, Package Objects

The three phases form one object in the compiler code as they have many mutual dependencies. At first, the namer creates a symbols table. In the next examples, there are two symbols tables: one from namer and the other from typer. The latter phase adds more information to the table about values inside objects.

object Add extends App {

val a = 5

def addSix(n: Int): Int = n + 6

val b = addSix(a)

println(s"Result: $b")

}

object Add@3#MOD

package @2#PK (final )

* class Object@32#CLS

* constructor Object@131

object Add@3#MOD

* object Add@3#MODC

* constructor Add@8240

* method addSix@8240#METH

* value n@8362#VAL

* value @8240#VAL

* value a@8240#GET ( )

* value a@8240#VAL (private )

* value b@8240#GET ( )

* value b@8240#VAL (private )

package @2#PK (final )

* package scala@2#PK (final)

* package scala@2#PKC (final)

* class Int@23#CLS (final abstract)

* method +@1110#METH

* method apply@746#METH (case )

* method println@1751#METH

* method s@744#METH

* object Predef@23#MOD

* object StringContext@23#MOD

* trait App@23#TRT (abstract)

The package objects phase deals with unwrapping package objects and putting them into ASTs, as shown in the picture.

As the official Scala Docs say, it is meat and potatoes. Mostly, it takes care of Scala’s rich type system, for example it:

- infers types,

- checks whether types match,

- searches for implicit arguments and adds them to trees,

- does implicit conversions,

- checks whether all type operations are allowed (for example type cannot be a subtype of itself),

- resolves overloading,

- type-checks parent references,

- checks type violations,

- searches for implicits ,

- expands macros,

- and creates additional methods for case classes (like apply or copy).

Pattern matching

This phase desugars pattern matching into a sequence of ifs and elses. For example:

k match {

case a :: Nil if a == 45 => "a"

case a if a.size < 10 => "b"

case _ => "c"

}

Is modified to:

case val x1: Seq = Main.this.k();

case7(){

if (x1.$isInstanceOf[scala.collection.immutable.::]())

{

val x2: scala.collection.immutable.:: = (x1.$asInstanceOf[scala.collection.immutable.::](): scala.collection.immutable.::);

{

val a: Nothing = x2.head().$asInstanceOf[Nothing]();

val p3: List = x2.tl$1();

if (immutable.this.Nil.==(p3))

if (a.==(scala.Int.box(45)))

matchEnd6("a")

else

case8()

else

case8()

}

}

else

case8()

};

case8(){

if (x1.size().<(10))

matchEnd6("b")

else

case9()

};

case9(){

matchEnd6("c")

};

matchEnd6(x: String){

x

}

Pickler

The pickler phase performs the serialization of classes into attributes that are used later during the creation of the bytecode.

Uncurry

Here currying and some of the functional syntactic sugar is removed:

def f(a: Int)(b: Int): Int = a + b

def f2(seq: Int*): Int = seq.sum

def f3(a: => Int): Int = a + 5

def f4: Int = 5

And is parsed onto:

def f(a: Int, b: Int): Int = a.+(b);

def f2(seq: Seq[Int]): Int = seq.sum[Int](math.this.Numeric.IntIsIntegral);

def f3(a: () => Int): Int = a.apply().+(5);

def f4(): Int = 5;

Tail calls

The tail calls phase optimizes tail recursion: in bytecode it is replaced with jump calls, so in bytecode it looks like a normal for loop in action.

Specialize

JVM has a very special way of dealing with generics. When you use List[A], in the end, you always get a List[Object]. In Java, it is forbidden to put a primitive type into Array — it has to be a class type. In Scala, it is very similar, even if an Int in Scala is an object. The main disadvantage of this solution is that objects take more memory than primitive types, so operations on lists are slower. To make them faster, it is possible to use specialize annotation — it forces compiler to use primitives and avoid type erasure. This phase deals with this case — for a more detailed description please visit this site.

Erasure and posterasure

When you use generics or value classes in your code, they are erased during this phase (type erasure is a JVM feature that was created with generics with Java 5 to enable backwards compatibility). The posterasure phase cleans up unnecessary code, does some optimizations, and unboxes value classes:

x.asInstanceOf[X] ==> x

(new B(v)).unbox ==> v

new B(v1) == new B(v2) ==> v1 == v2

Lambda lift, constructors, flatten

Until the final stage, there are some other phases, like lambda lift.

During this phase, a lambda expression is converted into a class, as seen in the example below.

def a(b: Int) = () => b + 21

def a(b: Int): Function0 = (new <$anon: Function0>(b): Function0);

@SerialVersionUID(value = 0) final class $anonfun$a$1 extends scala.runtime.AbstractFunction0$mcI$sp with Serializable {

def (b$1: Int): <$anon: Function0> = {

$anonfun$a$1.super.();

()

};

final def apply(): Int = $anonfun$a$1.this.apply$mcI$sp();

def apply$mcI$sp(): Int = $anonfun$a$1.this.b$1.+(21);

final def apply(): Object = scala.Int.box($anonfun$a$1.this.apply());

private[this] val b$1: Int = _

}

The Constructors phase is responsible for translating Scala constructors into JVM-friendly ones. It creates field definitions in a constructor. Later, flatten lifts inner classes to the top level.

JVM

In the Scala 2.12 compiler code, there is only one phase that performs back end actions, surprisingly, called JVM. In the beginning, it creates an array of primitive types that is demultiplexed by providing a mapping from their symbols to integers. Later on, ClassBTypes are created from symbols and a few steps later the bytecode is generated. At the end, there is some post-processing, like closure optimizations or eliminating ureachable code. To perform a transformation into the bytecode, Scala uses ASM library.

From one file containing the following:

object Main extends App

Three files with .class extension are created. The first represents the class, the second is a companion object, and the last one — delayed init. The first two files with corresponding names are a representation of the code; the last one enables our program to delay its init and read parameters for the main function.

The created bytecode is quite complicated. To fully see its possibilities, I encourage you to experiment on your own. All you have to do is compile your code and run the javap command with different flags.

Why so long?

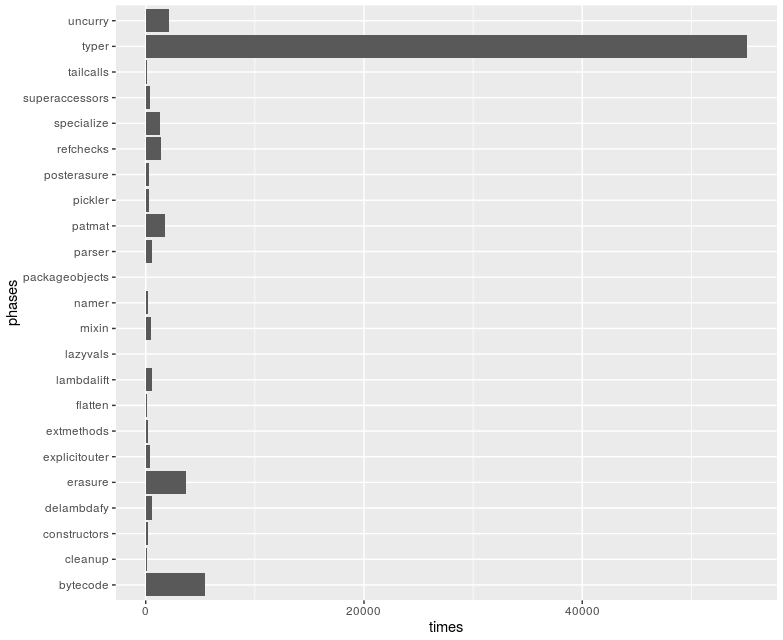

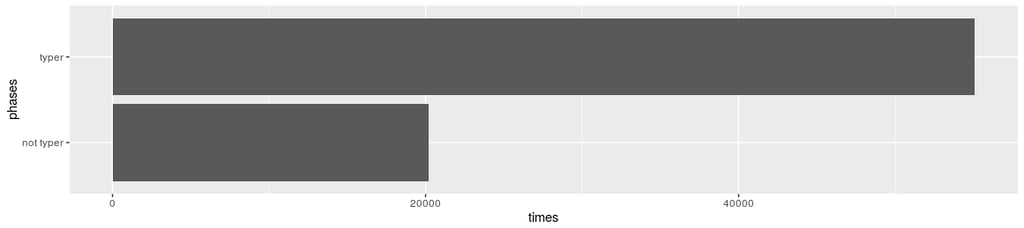

Now, you’re probably wondering why it actually takes so long to compile Scala code — well, the longest phase (not surprisingly) is typer. As I mentioned before, Scala has a rich, complicated type system that needs a lot of time to process. Furthermore, such libraries as Shapeless are based on implicits and macros that are also time consuming.

I compiled one of my projects with the –Xprint:all flag to present time differences between each phase:

Scalac’s bottlenecks seem to be implicit search and macro expansions. There are some things that can be done to minimize the problem. However, now I know that when I’m waiting impatiently for the results of compilation, compiler is probably stuck on the typer phase. Type system, in my opinion, is Scala’s best quality, and I think it is understandable that the phase takes so long.

Yesterday, I found out that the slickless bug was fixed, now at worst compiling time takes 3 minutes. They stopped using existential types.

9 Comments

Thank you for reading. I hope you enjoyed the article. Let’s start the discussion!

1. Which Scala Compiler Phase surprised you the most?

2. Has anyone experienced similar problems with other programming languages? If so, do you know what causes it?

We’d love to hear from you!

Hi. Nice write up. Just a quick note on the tail calls section:

“The tail calls phase optimizes tail recursion marked with tailrec annotation”

Actually, that optimization does not require the @tailrec annotation at all. The annotation purpose is for the compiler to let the developer know when a recursive method is not tail recursive. The optimization is done by the compiler regardless of the presence of the annotation.

Ah yes, you’re right, although there is no such guarantee in either SLS or the docs :-/ Good point!

> Scalac’s bottlenecks seem to be implicit search and macro expansions. There are some things that can be done to minimize the problem

A few months ago I wrote a blog post about how to profile and understand these bottlenecks, as well as finding ways to mitigate them without any changes to the compiler. If you haven’t read it yet, I recommend having a look at it 🙂

https://www.scala-lang.org/blog/2018/06/04/scalac-profiling.html

Terrific work. Thanks!

Very good work! The illustrations are funny! It allowed me to understand some aspects of the problematic scalac compilation times (in a very general sense).

Thank you!

Pretty nice post. I just stumbled upon your weblog and wanted to say

that I’ve truly loved surfing around your blog posts. After

all I’ll be subscribing to your feed and I am hoping you write once more very soon!

An outstanding share! I have just forwarded this onto a colleague who was doing a little research

on this. And he in fact bought me breakfast simply because I stumbled upon it for him…

lol. So allow me to reword this…. Thank YOU for

the meal!! But yeah, thanks for spending some time to talk about this

topic here on your site.

Very nice post, thanks 🙂